Ruby on Medicine: Counting Word Frequency in a File

Key Takeaways

- Ruby can be effectively used to count the frequency of words in a file, which can be helpful in analyzing large text files and identifying common themes, potential mistakes, and misspellings.

- Ruby hashes, representing a collection of key-value pairs, are a crucial tool for this task. In the context of word frequency, the ‘key’ is the word and the ‘value’ is the number of occurrences of the word in the file.

- The Ruby script developed in the tutorial reads a file, converts all words to lowercase, scans for words of a defined length, counts their frequency, and outputs the results into a new file, sorted alphabetically.

Welcome to a new article in SitePoint’s Ruby on Medicine series, where I show how the Ruby programming language can be applied to tasks related to the medical domain.

Newbie, moderate, and advanced Ruby programmers can benefit from this series, in addition to health/medical researchers and practitioners looking forward to learning the Ruby programming language, and apply it to their domain.

In this article, I’m going to show you how we can use Ruby to check the frequency of occurrence of words in some file. Counting the frequency of occurrence of words can come in handy in large text files, such as the OMIM^®^ – Online Mendelian Inheritance in Man^®^ file, which we worked with in the last article.. The reason counting the frequency of words could be beneficial is that going through the list of words and their frequencies will give us a more sense of what the document is about. It can also pinpoint any mistakes and misspellings, especially when we have a dictionary to compare against. When a word from the dictionary is not listed in your output, for instance, you can simply conclude that a misspelling has occurred to that word, or abbreviations were used rather than the words themselves.

In this article, the file we will be working with is the OMIM® – Online Mendelian Inheritance in Man® file. Let’s get started.

Get the OMIM® File

I talked about how to obtain the OMIM® file in my last article: Ruby on Medicine: Counting Real Words with Ruby, but I will repeat it here, just in case you don’t want to go back and forth between articles.

The OMIM^®^ can be obtained following those steps:

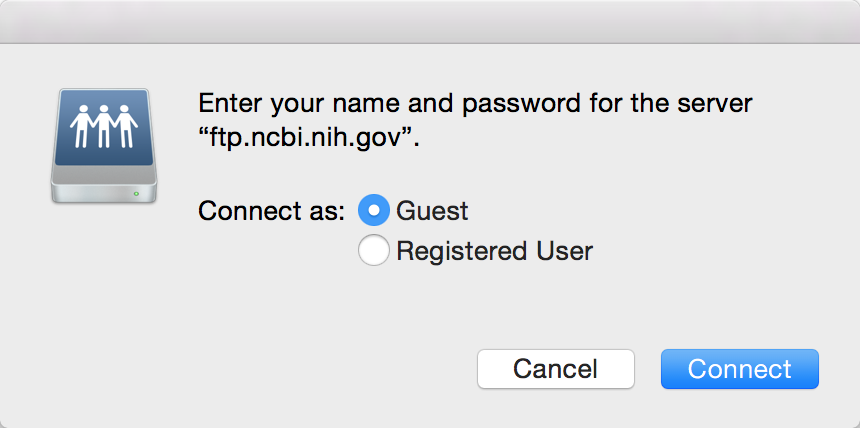

Go to this anonymous ftp address: ftp://ftp.ncbi.nih.gov. You should have a dialogue box show up that looks something like the following:

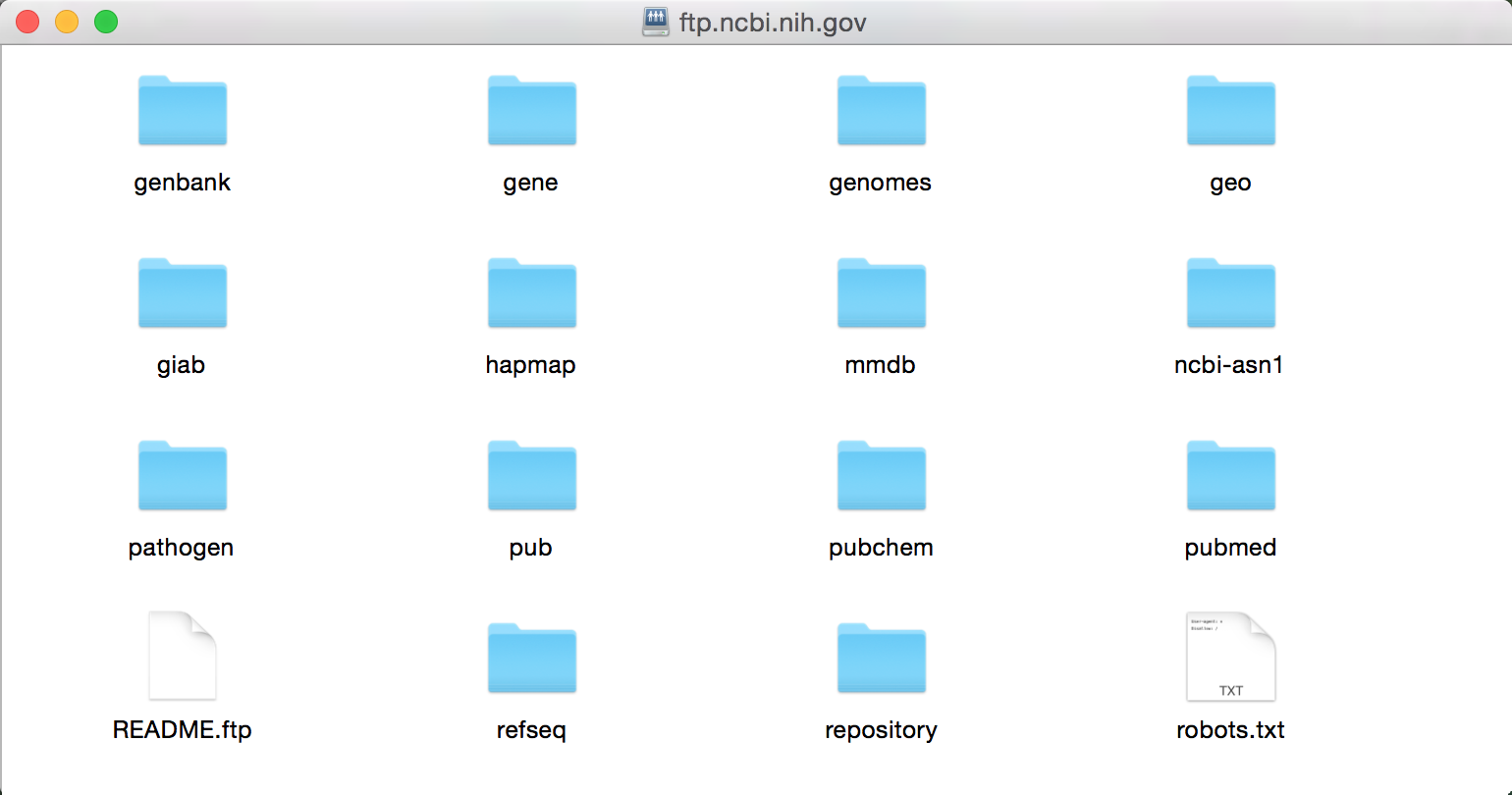

Choose Guest beside Connect as:, and then click the Connect button, in which case you’ll see the following directory:

The text file we need is the omim.txt.Z file (66.3 MB), which can be found in the /repository/OMIM/ARCHIVE directory.

Unzip the file, in which case you will get omim.txt (151.2 MB).

Hashes

Since we will be checking the words in a file, and counting the frequency of occurrence of each word, hashes will be very helpful.

But, what are Ruby hashes?

When I started this section, I mentioned that we will be checking words in a file, and the frequency of occurrence of each word. Hashes represent a collection of key-value pairs. In our case, this would look as follows:

"word" => frequency

Where word is some word in the file, and frequency is a number representing the number of occurrences of the word in that file.

Thus, a hash looks pretty much like an array but, instead of an index with integer values, the indices are keys, which can be of any type.

Creating a hash in Ruby is very simple and can be done as follows, which will create an empty hash:

Hash.new

If you want to create a hash with a default value, you can do the following:

Hash.new(0)

The above hash ha a default value of 0. This means that, if you tried to access a key that is not present in a hash created with a default value, the default value is what will be returned. To clarify what I mean here, look at the example below, where the two puts in the following script will return 0:

frequency = Hash.new(0)

puts "#{frequency[0]}"

puts "#{frequency[653]}"

For more information about Ruby hashes, you can read this article and the docs.

Counting the Number of Occurrences

In this section, we will be building the Ruby program that will check the frequency of occurrence of each word in the file, and store the results in an output file.

The first thing we want to do is create a new hash, and assign it the default value 0:

frequency = Hash.new(0)

We want to store the results in a file, so the Ruby script will write the results to an output file. We can use File.open() with the w (write) mode:

output_file = File.open('wordfrequency.txt', 'w')

The front-end of the operation is reading in the OMIM® file, which, in our case, is omim.txt. The statement for this operation will look as follows:

input_file = File.open('omim.txt', 'r')

As we are looking through the file for words, the Ruby function we need is scan(pattern). Ruby documentation mentions the following about this function:

scan iterates through *str*, matching the pattern (which may be a Regexp or a String). For each match, a result is generated and either added to the result array or passed to the block. If the pattern contains no groups, each individual result consists of the matched string, $&. If the pattern contains groups, each individual result is itself an array containing one entry per group.

When we talk about pattern matching, in this case matching the words in the file, regular expressions (regex) come in handy. I have talked about regular expression in another article: Ruby on Medicine: Hunting For The Gene Sequence.

The main regex we need in this tutorial is \b, that is, the word boundary. As you may have guessed from its name, it helps us match the words.

See the following examples to see how the word boundary works:

\bhat\b

This regex matches hat in red hat.

If we take only one boundary as follows:

\bhat

This will match a word with hat at the beginning, such is in hatrat.

And, if we wanted to match hat at the end, such as rathat, we can do this using this regex:

hat\b

Here is script that will return the frequency of the occurrence of each word in the file together:

frequency = Hash.new(0)

input_file = File.open('omim.txt', 'r')

output_file = File.open('wordfrequency.txt', 'w')

input_file.read.downcase.scan(/\b[a-z]{3,16}\b/) {|word| frequency[word] = frequency[word] + 1}

frequency.keys.sort.each{|key| output_file.print key,' => ',frequency[key], "\n"}

exit

Before I show you the output, I just want to quickly clarify some points in the above script.

The downcase function returns all the uppercase letters replaced with their lowercase counterparts. We use it here so word matches occur regardless of their case.

The regular expression /\b[a-z]{3,16}\b/ is basically telling us to return all the words which length (number of characters) is between 3 and 16. For instance, we may skip those words with no particular interest such as if, or, on. Words with length larger than 16 may be a gene sequence, which we will not consider a word.

Finally, sort will sort the keys (i.e. words) listed in the output file alphabetically.

Output

The list of words and their frequency of occurrence in the OMIM® file, using the Ruby script above, will generate this output file: wordfrequency.txt (2.37 MB)

In the list, you may notice some words with very little frequency. In a large text file like OMIM^®^, what would that indicate? Are they words with no meaning? Misspelled words? What do you think?

The last two articles in this series covered how to use regular expressions to count specific words and all words, respectively. My hope is that these two examples give you tools to apply this technique in your own domain.

Frequently Asked Questions (FAQs) about Ruby on Medicine: Counting Word Frequency in a File

How does Ruby handle word frequency in a file?

Ruby uses a combination of methods to handle word frequency in a file. The first step is to read the file, which is done using the ‘File’ class and its ‘read’ method. The text from the file is then split into an array of words using the ‘split’ method. Ruby then uses the ‘inject’ method to create a hash where each word is a key and its frequency is the value. The ‘sort_by’ method is then used to sort the hash by frequency, and the ‘reverse’ method is used to ensure that the words with the highest frequency are at the top.

Can I use Ruby to analyze text files other than medical documents?

Absolutely. While the article focuses on medical documents, the Ruby code provided can be used to analyze any text file. The code reads a file, breaks it down into individual words, and counts the frequency of each word. This can be useful in a variety of contexts, not just medical documents.

How can I modify the Ruby code to ignore certain words when counting frequency?

To ignore certain words when counting frequency, you can add a conditional statement in the ‘inject’ method. This statement would check if the current word is in a list of words to ignore. If it is, the method would move on to the next word without adding it to the hash.

What if I want to count the frequency of phrases, not just individual words?

Counting the frequency of phrases involves a slightly more complex process. Instead of splitting the text into individual words, you would need to split it into phrases. This could be done by defining a phrase as a certain number of words, or by using punctuation or other markers to determine where one phrase ends and another begins.

How can I use this Ruby code in a larger project?

This Ruby code can be incorporated into a larger project by defining it as a method and calling that method when needed. The method could take a file path as an argument, read the file, count the word frequency, and return the sorted hash. This would allow you to analyze text files as part of a larger Ruby application.

Can I use this code to analyze web pages or other online text?

Yes, with some modifications. Instead of reading a file, you would need to fetch the web page or online text. This could be done using a library like ‘open-uri’. Once you have the text, you can use the same methods to split it into words, count the frequency, and sort the results.

How can I visualize the results of the word frequency analysis?

There are several ways to visualize the results of the word frequency analysis. One simple way is to print the sorted hash to the console. For a more visual representation, you could use a library like ‘matplotlib’ to create a bar chart or word cloud.

How can I handle different cases and punctuation when counting word frequency?

To handle different cases, you can convert all words to lower case using the ‘downcase’ method before counting their frequency. To handle punctuation, you can use the ‘gsub’ method to remove punctuation characters from the words.

Can I use this code to analyze non-English text files?

Yes, this code can be used to analyze text files in any language. However, you may need to modify the code to handle language-specific features. For example, in languages with compound words, you may need to split words differently.

How can I improve the performance of the word frequency analysis?

There are several ways to improve the performance of the word frequency analysis. One way is to use a more efficient data structure, like a trie, to store the words and their frequencies. Another way is to use parallel processing to analyze large files more quickly.

Doctor and author focussed on leveraging machine/deep learning and image processing in medical image analysis.