As has been widely reported and discussed, Google has registered a trademark for “TrustRank.”

Behind the trademark, we find a research paper from the usual suspects at the Stanford Digital Libraries project. This paper discusses a method of weeding out spam from search results by using a “seed set” of trusted web sites.

TrustRank, as described in the paper, uses a method similar to the PageRank algorithm to determine how trustworthy a given web site is, or put another way, how likely it is that the site can be trusted.

There is always interest in the SEM community when a search engine files for a patent or trademark, acquires a company with interesting technology, or when an individual remotely associated with the search engine publishes a research paper.

It’s important to retain a sense of perspective about these things, because all of the major search engines pay a lot of people to do research and invent stuff. Just because a patent has been applied for or granted, that does not automatically translate into the search engine implementing the patent within their primary search results.

TrustRank appears to be a bit different, because trademarks actually have to be used in order to be maintained. I’ve heard the argument made that Google must plan to use TrustRank if they’ve registered a trademark. Does this mean that Google will implement something like the TrustRank algorithm described in this research paper? Possibly, or they may just be playing it safe while they decide what to do.

In any case, innovations like TrustRank are going to play a strong role in the future of web search. Whether search engines use a manually edited “seed list” of “good” web sites, user feedback, or other means, the search engines all have a strong incentive to reduce the amount of spam in their search results.

Since everyone else is speculating about how Google might implement TrustRank, I’ll toss out one idea. It may be a bad idea, of course. I’ll let those who actually have to deal with the practical implementation decide…

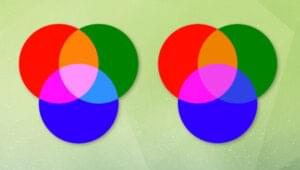

As discussed in the SitePoint SEM Kit (you can read about it in the sample chapter), the PageRank algorithm models a “random web surfer,” who occasionally gets bored with his random clicking and starts over at a new page. The chance that our random surfer will ‘get bored’ is represented in the PageRank algorithm as a “damping factor.”

One possible way to implement TrustRank without throwing away PageRank would be to adjust the damping factor based on the “trust” we have in a certain web page. If you have a low degree of trust, then adjust the damping factor, increasing the probablility that our random surfer would ‘get bored’ by that page. This would mean that less trusted pages would pass less PageRank along to the rest of the web – not penalizing the sites they link to, but simply reducing the benefit of such links, including internal links within the site itself.

What will Google actually do? Your guess is as good as mine, unless you’re one of the folks who believes they actually implement every process that they patent.

Stay tuned, watch this space, for more fun and games with patents, algorithms, research papers and other puzzling evidence.