In this article, we’re going to deploy an Airflow application in a Conda environment and secure the application using Nginx and request SSL certificate from Let’s Encrypt.

Airflow is a popular tool that we can use to define, schedule, and monitor our complex workflows. We can create Directed Acyclic Graphs (DAGs) to automate tasks across our work platforms, and being open-source, Airflow has a community to provide support and improve continuously.

This is a sponsored article by Vultr. Vultr is the world’s largest privately-held cloud computing platform. A favorite with developers, Vultr has served over 1.5 million customers across 185 countries with flexible, scalable, global Cloud Compute, Cloud GPU, Bare Metal, and Cloud Storage solutions. Learn more about Vultr.

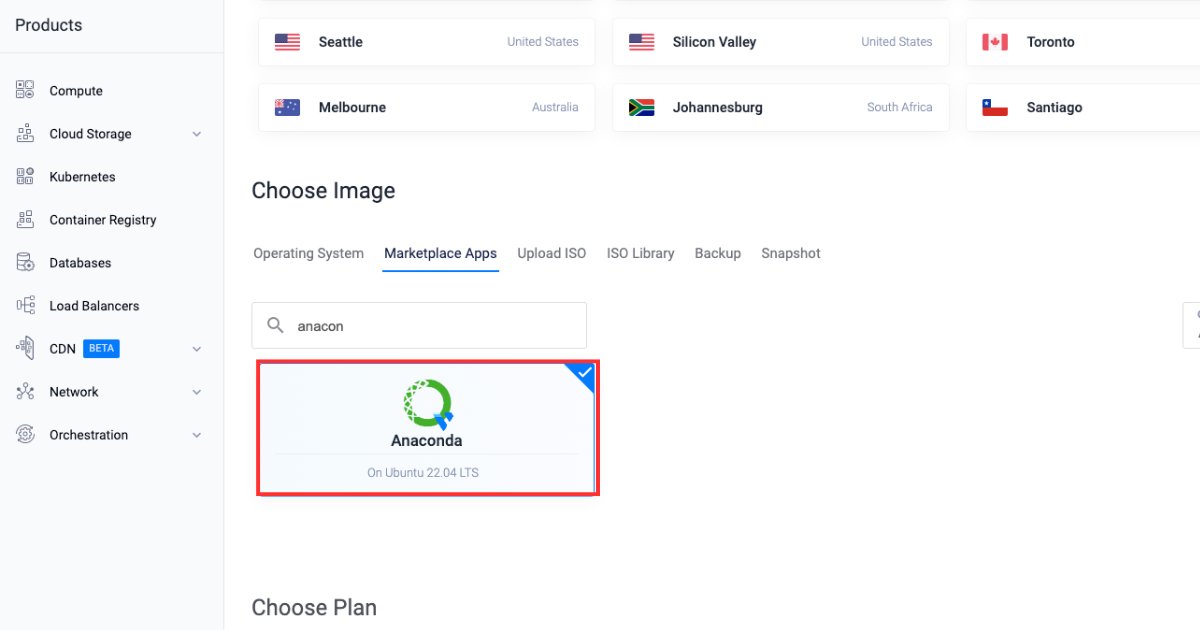

Deploying a Server on Vultr

Let’s start by deploying a Vultr server with the Anaconda marketplace application.

-

Sign up and log in to the Vultr Customer Portal.

-

Navigate to the Products page.

-

Select Compute from the side menu.

-

Click Deploy Server.

-

Select Cloud Compute as the server type.

-

Choose a Location.

-

Select Anaconda amongst marketplace applications.

-

Choose a Plan.

-

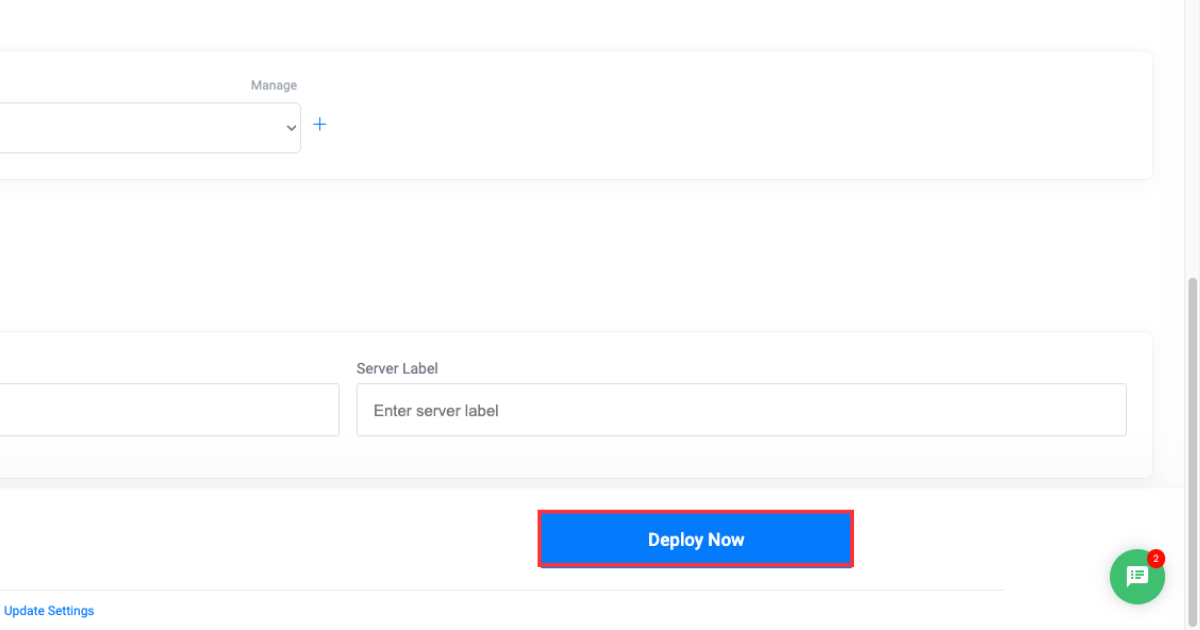

Select any more features as required in the “Additional Features” section.

-

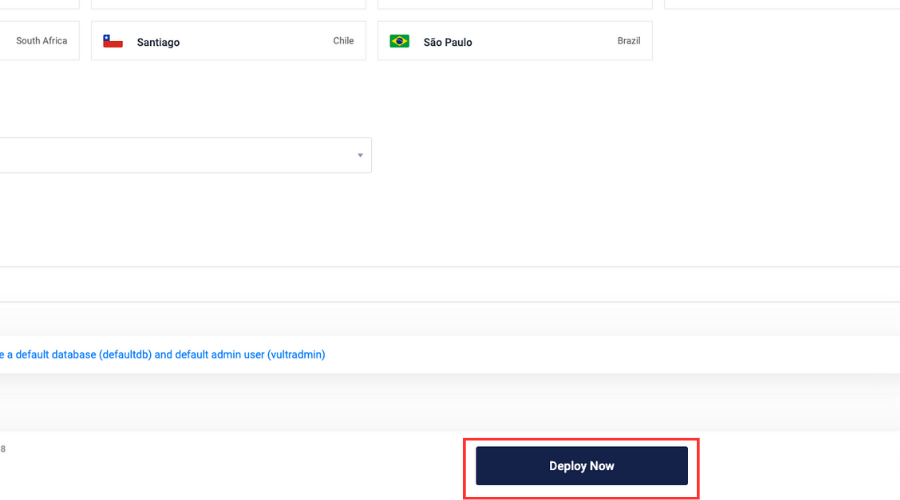

Click the Deploy Now button.

Creating a Vultr Managed Database

After deploying a Vultr server, we’ll next deploy a Vultr-managed PostgreSQL Database. We’ll also create two new databases in our database instance that will be used to connect with our Airflow application later in the blog.

-

Open the Vultr Customer Portal.

-

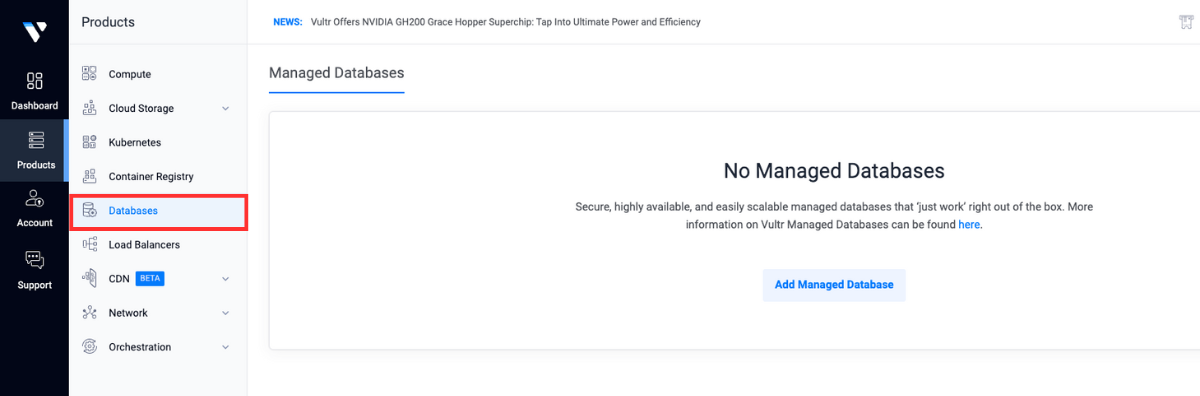

Click the Products menu group and navigate to Databases to create a PostgreSQL managed database.

-

Click Add Managed Databases.

-

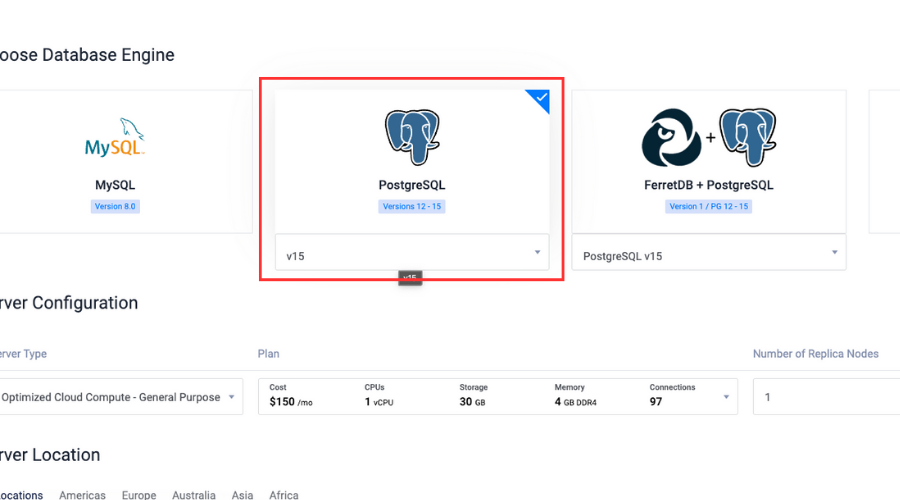

Select PostgreSQL with the latest version as the database engine.

-

Select Server Configuration and Server Location.

-

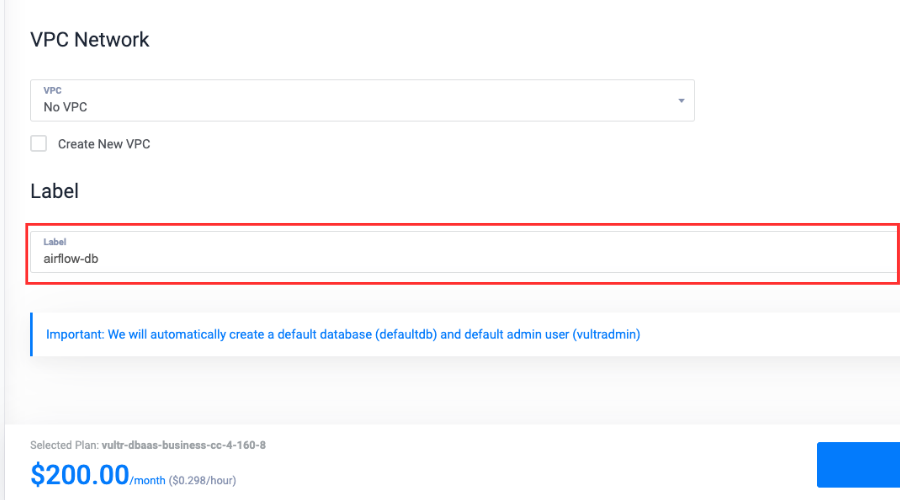

Write a Label for the service.

-

Click Deploy Now.

-

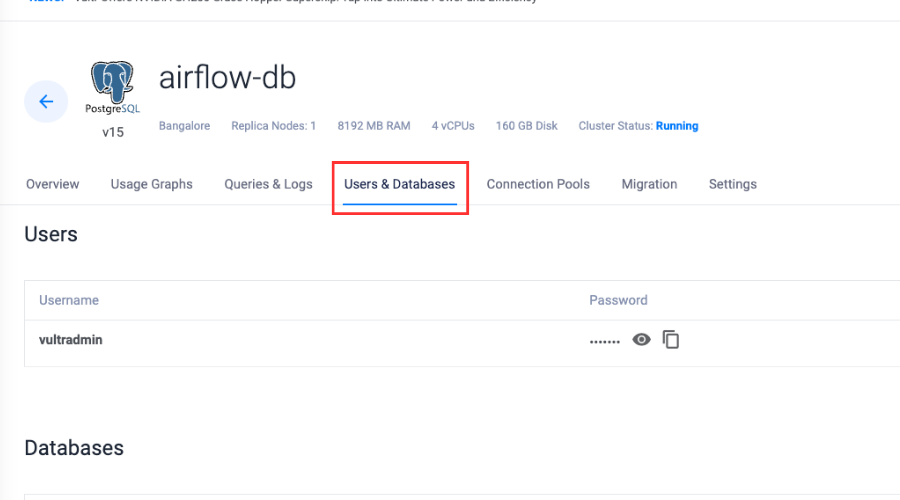

After the database is deployed, select Users & Databases.

-

Click Add New Database.

-

Type in a name, click Add Database and name it

airflow-pgsql. -

Repeat steps 9 and 10 to add another database in the same managed database and name it

airflow-celery.

Getting Started with Conda and Airflow

Now that we’ve created a Vultr-managed PostgreSQL instance, we’ll use the Vultr server to create a Conda environment and install the required dependencies.

-

Check for the Conda version:

$ conda --ver -

Create a Conda environment:

$ conda create -n airflow python=3.8 -

Activate the environment:

$ conda activate airflow -

Install Redis server:

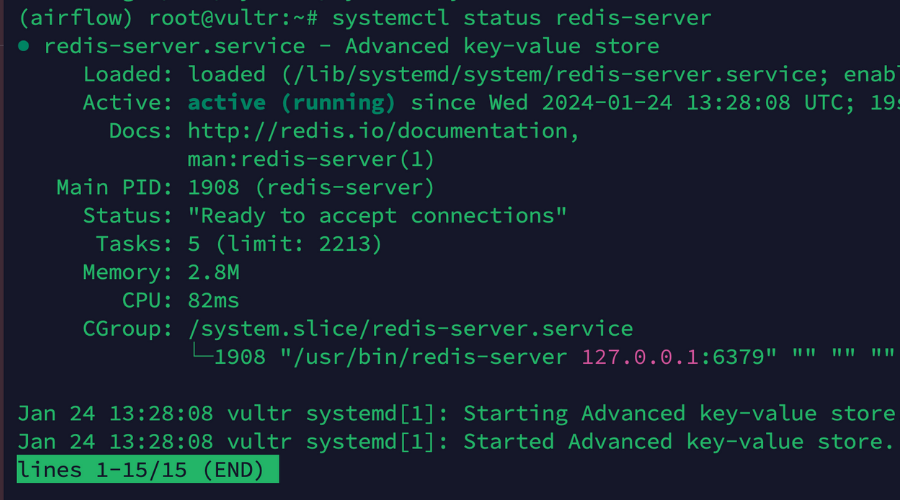

(airflow) $ apt install -y redis-server -

Enable the Redis server:

(airflow) $ sudo systemctl enable redis-server -

Check the status:

(airflow) $ sudo systemctl status redis-server

-

Install the Python package manager:

(airflow) $ conda install pip -

Install the required dependencies:

(airflow) $ pip install psycopg2-binary virtualenv redis -

Install Airflow in the Conda environment:

(airflow) $ pip install "apache-airflow[celery]==2.8.1" --constraint "https://raw.githubusercontent.com/apache/airflow/constraints-2.8.1/constraints-3.8.txt"

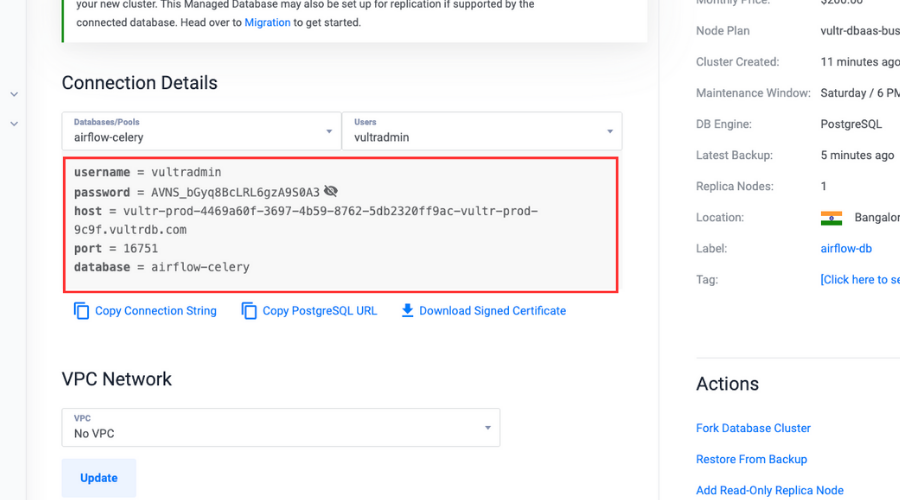

Connecting Airflow with Vultr Managed Database

After preparing the environment, now let’s connect our Airflow application with the two databases we created earlier within our database instance and make necessary changes to the Airflow configuration to make our application production-ready.

-

Set environment variable for database connection:

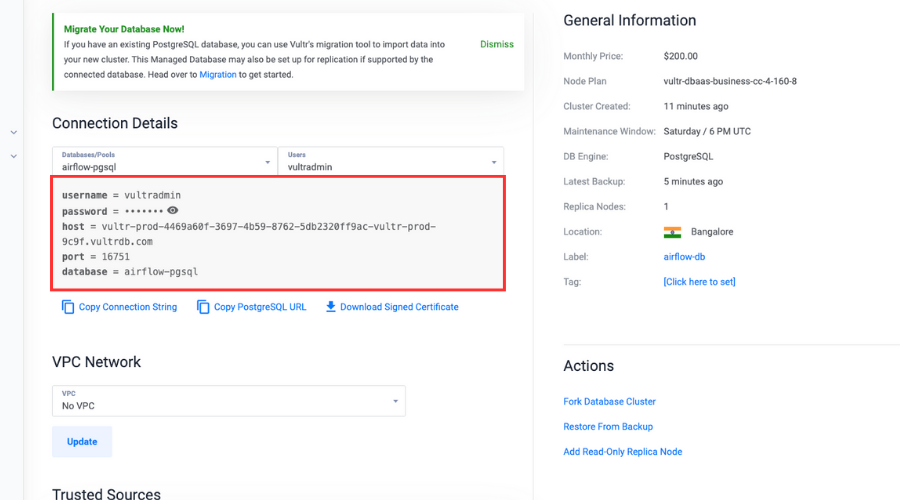

(airflow) $ export AIRFLOW__DATABASE__SQL_ALCHEMY_CONN="postgresql://user:password@hostname:port/db_name"Make sure to replace the

user,password,hostname, andportwith the actual values in the connection details section by selecting theairflow-pgsqldatabase. Replace thedb_namewithairflow-pgsql.

-

Initialize the metadata database.

We must initialize a metadata database for Airflow to create necessary tables and schema that stores information like DAGs and information related to our workflows:

(airflow) $ airflow db init -

Open the Airflow configuration file:

(airflow) $ sudo nano ~/airflow/airflow.cfg -

Scroll down and change the

executor:executor = CeleryExecutor -

Link the Vultr-managed PostgreSQL database, and change the value of

sql_alchemy_conn:sql_alchemy_conn = "postgresql://user:password@hostname:port/db_name"Make sure to replace the

user,password,hostname, and port with the actual values in the connection details section by selecting theairflow-pgsqldatabase. Replace thedb_namewithairflow-pgsql. -

Scroll down and change the worker and trigger log ports:

worker_log_server_port = 8794 trigger_log_server_port = 8795 -

Change the

broker_url:broker_url = redis://localhost:6379/0 -

Remove the

#and change theresult_backend:result_backend = db+postgresql://user:password@hostname:port/db_nameMake sure to replace the

user,password,hostname, andportwith the actual values in the connection details section by selecting theairflow-celerydatabase. Replace thedb_namewithairflow-celery.

-

Save and exit the file.

-

Create an Airflow user:

(airflow) $ airflow users create \n --username admin \n --firstname Peter \n --lastname Parker \n --role Admin \n --email spiderman@superhero.orgMake sure to replace all the variable values with the actual values.

Enter a password when prompted to set it for the user while accessing the dashboard.

Daemonizing the Airflow Application

Now let’s daemonize our Airflow application so that it runs in the background and continues to run independently even when we close the terminal and log out.

These steps will also help us to create a persistent service for the Airflow webserver, scheduler, and celery workers.

-

View the

airflowpath:(airflow) $ which airflowCopy and paste the path into the clipboard.

-

Create an Airflow webserver service file:

(airflow) $ sudo nano /etc/systemd/system/airflow-webserver.service -

Paste the service configurations in the file.

airflow webserveris responsible for providing a web-based user interface that will allow us to interact and manage our workflows. These configurations will make a background running service for our Airflow webserver:[Unit] Description="Airflow Webserver" After=network.target [Service] User=example_user Group=example_user ExecStart=/home/example_user/.local/bin/airflow webserver [Install] WantedBy=multi-user.targetMake sure to replace

UserandGroupwith your actual non-root sudo user account details, and replace theExecStartpath with the actual Airflow path including the executable binary we copied earlier in the clipboard. -

Save and close the file.

-

Enable the

airflow-webserverservice, so that the webserver automatically starts up during the system boot process:(airflow) $ systemctl enable airflow-webserver -

Start the service:

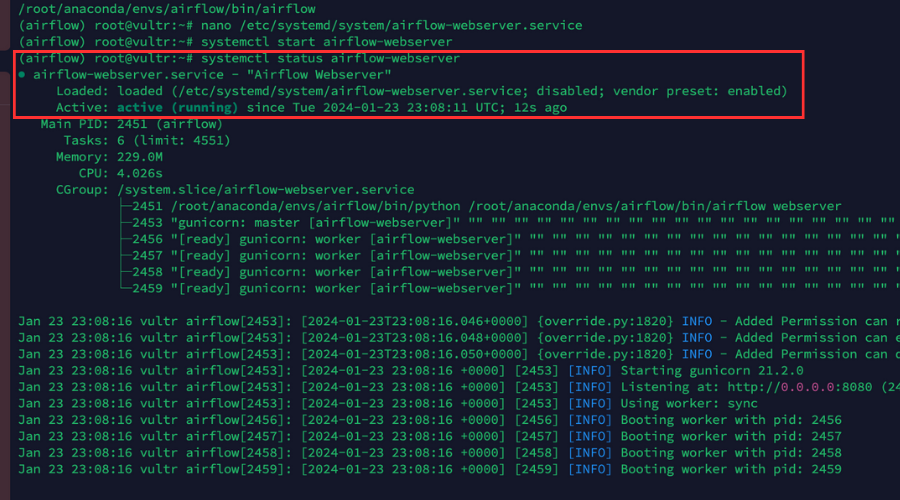

(airflow) $ sudo systemctl start airflow-webserver -

Make sure that the service is up and running:

(airflow) $ sudo systemctl status airflow-webserverOur output should appear like the one pictured below.

-

Create an Airflow Celery service file:

(airflow) $ sudo nano /etc/systemd/system/airflow-celery.service -

Paste the service configurations in the file.

airflow celery workerstarts a Celery worker. Celery is a distributed task queue that will allow us to distribute and execute tasks across multiple workers. The workers connect to our Redis server to receive and execute tasks:[Unit] Description="Airflow Celery" After=network.target [Service] User=example_user Group=example_user ExecStart=/home/example_user/.local/bin/airflow celery worker [Install] WantedBy=multi-user.targetMake sure to replace

UserandGroupwith your actual non-root sudo user account details, and replace theExecStartpath with the actual Airflow path including the executable binary we copied earlier in the clipboard. -

Save and close the file.

-

Enable the

airflow-celeryservice:(airflow) $ sudo systemctl enable airflow-celery -

Start the service:

(airflow) $ sudo systemctl start airflow-celery -

Make sure that the service is up and running:

(airflow) $ sudo systemctl status airflow-celery -

Create an Airflow scheduler service file:

(airflow) $ sudo nano /etc/systemd/system/airflow-scheduler.service -

Paste the service configurations in the file.

airflow scheduleris responsible for scheduling and triggering the DAGs and the tasks defined in them. It also checks the status of DAGs and tasks periodically:[Unit] Description="Airflow Scheduler" After=network.target [Service] User=example_user Group=example_user ExecStart=/home/example_user/.local/bin/airflow scheduler [Install] WantedBy=multi-user.targetMake sure to replace

UserandGroupwith your actual non-root sudo user account details, and replace theExecStartpath with the actual Airflow path including the executable binary we copied earlier in the clipboard. -

Save and close the file.

-

Enable the

airflow-schedulerservice:(airflow) $ sudo systemctl enable airflow-scheduler -

Start the service:

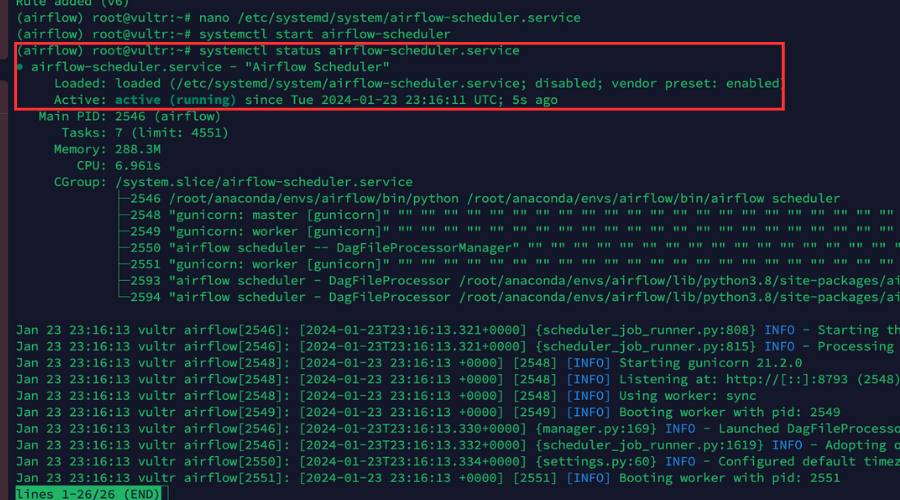

(airflow) $ sudo systemctl start airflow-scheduler -

Make sure that the service is up and running:

(airflow) $ sudo systemctl status airflow-schedulerOur output should appear like that pictured below.

Setting up Nginx as a Reverse Proxy

We’ve created persistent services for the Airflow application, so now we’ll set up Nginx as a reverse proxy to enhance our application’s security and scalability following the steps outlined below.

-

Log in to the Vultr Customer Portal.

-

Navigate to the Products page.

-

From the side menu, expand the Network drop down, and select DNS.

-

Click the Add Domain button in the center.

-

Follow the setup procedure to add your domain name by selecting the IP address of your server.

-

Set the following hostnames as your domain’s primary and secondary nameservers with your domain registrar:

- ns1.vultr.com

- ns2.vultr.com

-

Install Nginx:

(airflow) $ apt install nginx -

Make sure to check if the Nginx server is up and running:

(airflow) $ sudo systemctl status nginx -

Create a new Nginx virtual host configuration file in the

sites-availabledirectory:(airflow) $ sudo nano /etc/nginx/sites-available/airflow.conf -

Add the configurations to the file.

These configurations will direct the traffic on our application from the actual domain to the backend server at

http://127.0.0.1:8080using a proxy pass:server { listen 80; listen [::]:80; server_name airflow.example.com; location / { proxy_pass http://127.0.0.1:8080; } }Make sure to replace

airflow.example.comwith the actual domain we added in the Vultr dashboard. -

Save and close the file.

-

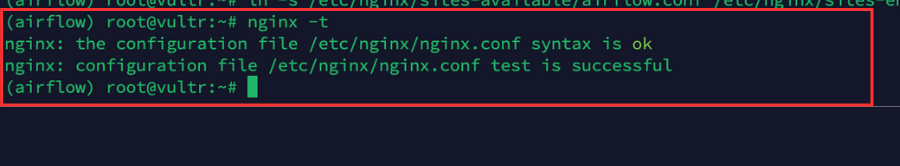

Link the configuration file to the

sites-enableddirectory to activate the configuration file:(airflow) $ sudo ln -s /etc/nginx/sites-available/airflow.conf /etc/nginx/sites-enabled/ -

Make sure to check the configuration for errors:

(airflow) $ sudo nginx -tOur output should appear like that pictured below.

-

Restart Nginx to apply changes:

(airflow) $ sudo systemctl reload nginx -

Allow the HTTP port

80through the firewall for all the incoming connections:(airflow) $ sudo ufw allow 80/tcp -

Allow the HTTPS port

443through the firewall for all incoming connections:(airflow) $ sudo ufw allow 443/tcp -

Reload firewall rules to save changes:

(airflow) $ sudo ufw reload

Applying Let’s Encrypt SSL Certificates to the Airflow Application

The last step is to apply a Let’s Encrypt SSL Certificate to our Airflow application so that it becomes much more secure and saves our application from unwanted attacks.

-

Using Snap, install the Certbot Let’s Encrypt client:

(airflow) $ snap install --classic certbot -

Get a new SSL certificate for our domain:

(airflow) $ certbot --nginx -d airflow.example.comMake sure to replace

airflow.example.comwith our actual domain name.

And when prompted enter an email address and press Y to accept the Let’s Encrypt terms. -

Test that the SSL certificate auto-renews upon expiry.

Auto-renewal makes sure our SSL certificates are up to date, reducing the risk of certificate expiry and maintaining the security of our application:

(airflow) $ certbot renew --dry-run -

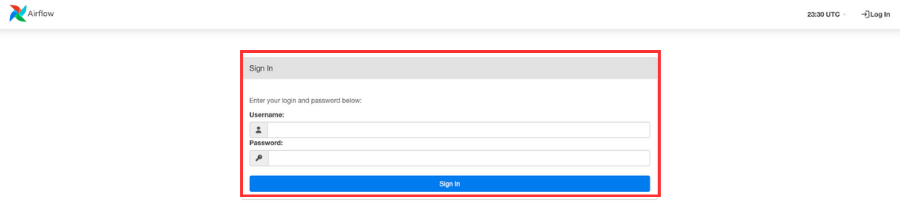

Use a web browser to open our Airflow application:

https://airflow.example.com.When prompted, enter the username and password we created earlier.

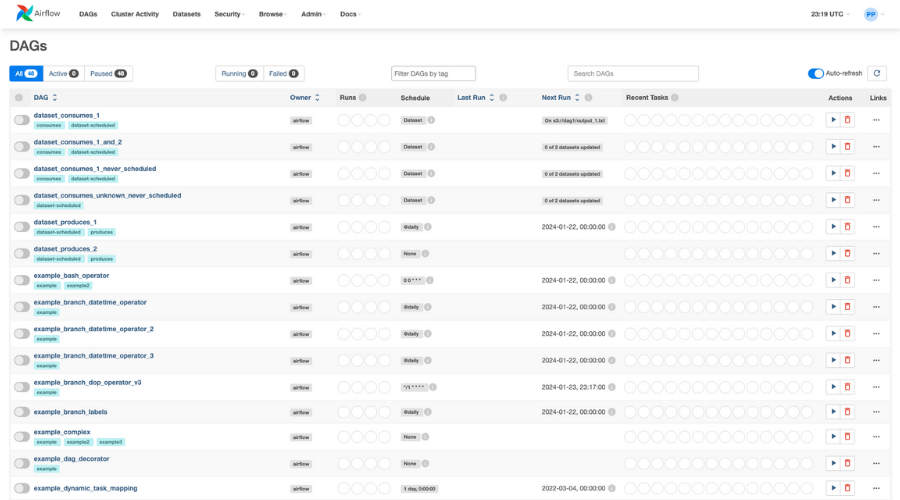

Upon accessing the dashboard, all the DAGs will be visible that are provided by default.

Conclusion

In this article, we demonstrated how to create Conda environments, deploy a production-ready Airflow application, and improve the performance and security of an application.

Vultr is the world’s largest privately-held cloud computing platform. A favorite with developers, Vultr has served over 1.5 million customers across 185 countries with flexible, scalable, global Cloud Compute, Cloud GPU, Bare Metal, and Cloud Storage solutions.