This is the first article in an occasional series on interesting database technologies oustide the (No)SQL mainstream. I will introduce you to the core concepts of these DBMS, share some thoughts on them and tell you where to find more information. Most of this is not intended for immediate use in your next project: rather, I want to provide inspiration and communicate interesting new takes on the problems in this field. But if, someday, one of those underdogs becomes the status quo, you can tell everyone that you knew it before it was cool … All jokes aside, I hope you’ll enjoy these. Let’s get started.

Overview

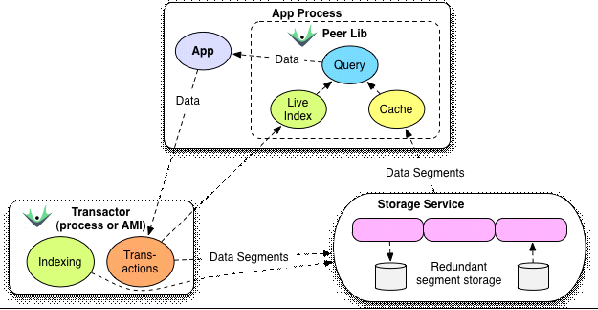

Datomic is the latest brain-child of Rich Hickey, the creator of Clojure. It was released earlier this year and is basically a new type of DBMS that incorporates his ideas about how today’s databases should work. It’s an elastically scalable, fact-based, time-sensitive database with support for ACID transactions and JOINs. Here are the core aspects this interesting piece of technology revolves around:- A novel architecture. Peers, Apps and the Transactor.

- A fact-based data-model.

- A powerful, declarative query language, “Datalog”.

1. A novel architecture

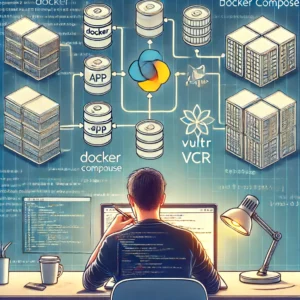

The single most revolutionary thing about Datomic would be its architecture. Datomic puts the brain of your app back into the client. In a traditional setup, the server handles everything from queries and transactions to actually storing the data. With increasing load, more servers are added and the dataset is sharded across these. As most of todays NoSQL databases show, this method works very well, but comes at the cost of some “brain”, as Mr. Hickey argues. The loss of consistency and/or query-power is a well-known tradeoff for scale. To achieve distributed storage, but with a powerful query language and consistent transactions, Datomic leverages existing scalable databases as simple distributed storage services. All the complex data processing is handled by the application itself. Almost as in a native desktop application (if you can remember one of those). This brings us to the first cornerstone of the Datomic infrastructure:The Peer Application

A peer is created by embedding the Datomic library into your client-code. From then, every instance of your application will be able to:- ● communicate with the Transactor and storage services

- ● run Datalog queries, access data and handle caching of the working set

The Transactor

The Transactor will:- ● handle ACID transactions

- ● synchronously write to redundant storage

- ● communicate changes to Peers

- ● indexing your dataset in the background

Storage services

These services handle the distributed storage of data. Some possibilities:- ● Transactor-Local storage (free, useful for playing with Datomic on a single machine)

- ● SQL Databases (require Datomic Pro)

- ● DynamoDB (require Datomic Pro)

- ● Infinispan Memory Cluster (require Datomic Pro)

(Original image taken from here.)

(Original image taken from here.)

2. A fact-based data-model

Datomic doesn’t model data as documents, objects or rows in a table. Instead, data is represented as immutable facts called “Datoms”. They are made up of four pieces:- Entity

- Attribute

- Value

- Transaction timestamp

- ● Support for sparse, irregular or hierarchical data

- ● Native support for multi-valued attributes

- ● No enforced schema

- ● No need to store data history separately, time is an integral part of datomic

- ● Not suited for large, dynamic data

- ● Flexible schemes tend to lead to rashness

- ● Attribute conflicts

3. Datalog, the finder of lost facts

Datomic queries are made up of “WHERE”, “FIND” and “IN” clauses, and a set of rules to apply to facts. The query processor then finds all matching facts in the database, taking into account implicit information Rules are “fact-templates”, which all facts in the database are matched against. Explicit rules could be something like: [?entity :age 42] Implicit rules look like variable bindings: [?entity :age ?a] and can be combined with LISP/Clojure-like expressions: [ (< ?a 30) ] A rule-set to match customers of age > 40 who bought product p would look like this: [?customer :age ?a] [ (> ?a 40) ] [?customer :bought p] The rule-sets are then embedded into the basic query-skeleton: [:find <variables> :where <rules>] <variables> is just the set of variables you want to have included in your results. We are only interested in the customer, not in his age, so our customer query would look like this: [:find ?customer :where [?customer :age ?a] [(> ?a 40)] [?customer :bought p]] We now run this via: Peer.q(query) This syntax will probably take some getting used to (except you’re familiar with Clojure), but I find it to be very readable and, as the Datomic Rationale promises, “meaning is evident”.Querying the past

To run your queries on your fact-history, no change in the query string is required: Peer.q(query, db.asOf(<time>) You can also simulate your query on hypothetical, new data. Kind of like a predictive query about the future: Peer.q(query, db.with(<data>)) For more information on the query – syntax, please refer to the very good documentation and this video.Use cases

Datomic is certainly not here to kill every other DBMS, but it’s an interesting match for some applications. The one that came to my mind first was analytics:- ● Facts are immutable, non-ACID writes should be fast, as analytics systems usually won’t require strong consistency

- ● Facts are time-sensitive. This is quite interesting for analytics.

Criticism

Revolutionary ideas, like the ones Datomic is based on, should always be appreciated, but analysed from multiple angles. The technology is too young to make a final judgement but some early criticism includes:- ● Separation of data and processing. All required data has to be moved to the client application, before it can be processed/queried. This might pose a problem once you get to larger datasets. Local cache will also inevitably constrain working-set growth. Once this upper-bound is passed, round-trips to the server-backend will be necessary again, with even bigger performance penalties. The Datomic team expects it’s approach to “work for most common use cases”, but this can’t be verified at this early stage.

- ● The Transactor component as a Single Point of Failure and a bottleneck

- ● Sharding might not be necessary for the read-only infrastructure, but the Transactor will need some type of sharding mechanism, once it has to deal with heavy loads.

- ● The Datomic Pro pricing is quite restricting, and considering that Datomic Free is no good for any kind of production use, building some experimental projects will be pretty hard for the average developer.

Conclusion

I personally think that many of the concepts and ideas behind Datomic, especially making time a first-class citizen, are great and bear a lot of potential. But I can’t see me using it in the near future, because I’d like to prove some of the team’s performance claims for myself, a desire not in keeping with my finances relative to a Pro license. Otherwise, some of Datomic’s advanced features, like fulltext search, multiple data-sources (besides the distributed storage service) and the possibility to use the system for local data-processing only, could potentially be useful. Please share any thoughts you might have in the comments!Dive deeper

○ The Datomic Rationale ○ A few great videos on Datomic ○ Back to the Future with Datomic ○ Datomic: Initial Analysis (comparing Datomic to other DBMS)Frequently Asked Questions (FAQs) about Datomic

What is the difference between Datomic On-Prem and Datomic Cloud?

Datomic On-Prem and Datomic Cloud are two different deployment models for the Datomic database system. Datomic On-Prem is designed to be installed and run on your own servers or in a data center of your choice. It provides you with full control over your data and infrastructure. On the other hand, Datomic Cloud is a fully managed service that runs on AWS (Amazon Web Services). It provides automatic scaling, backups, and failover, reducing the operational overhead.

How is Datomic priced?

Datomic has different pricing models for its On-Prem and Cloud versions. For Datomic On-Prem, you pay a one-time license fee based on the number of peers and transactors. For Datomic Cloud, you pay based on the AWS resources consumed, which can vary depending on your usage and the specific AWS services you use.

Is there a free version of Datomic?

Yes, Datomic offers a free version known as Datomic Free. It’s a fully functional version of Datomic that’s limited to a single transactor and two peers. It’s ideal for development, testing, and small production deployments.

What are the system requirements for Datomic?

Datomic can run on any system that supports Java 8 or later. For Datomic On-Prem, you’ll need a server or servers to host the transactor and peers. For Datomic Cloud, you’ll need an AWS account and access to the necessary AWS services.

How does Datomic handle data consistency?

Datomic provides strong consistency through its use of an ACID-compliant transaction model. All transactions are serialized through a single transactor, ensuring that all reads and writes are consistent across the entire database.

Can I migrate my data from Datomic On-Prem to Datomic Cloud?

Yes, Datomic provides tools and documentation to help you migrate your data from Datomic On-Prem to Datomic Cloud. The process involves exporting your data from the On-Prem version and importing it into the Cloud version.

What kind of support is available for Datomic?

Datomic offers a range of support options, including documentation, tutorials, and a community forum. For customers with a paid license, Datomic also offers direct support from its team of experts.

How does Datomic handle backups and data recovery?

Datomic has built-in support for backups and data recovery. For Datomic On-Prem, you can configure automatic backups to a storage location of your choice. For Datomic Cloud, backups are automatically stored in AWS S3.

What programming languages can I use with Datomic?

Datomic is designed to work with the Clojure programming language, but it also provides APIs for Java and other JVM languages. Additionally, you can use Datomic’s REST API to interact with the database from any language that can make HTTP requests.

How does Datomic handle scaling?

Datomic’s architecture allows it to scale horizontally by adding more peers. For Datomic Cloud, scaling is handled automatically by AWS. For Datomic On-Prem, you can add more peers to handle increased read load, and you can add more transactors to handle increased write load.

Ricky Onsman

Ricky OnsmanRicky Onsman is a freelance web designer, developer, editor and writer. With a background in information and content services, he built his first website in 1994 for a disability information service and has been messing about on the Web ever since.