7 Tips for Creating an App to Help Mental Illness

Have you ever thought of creating an app to aid those affected by mental illness? The role of mobile phone apps in mental health treatment is a relatively new tool. Apps can be utilized by mental health practitioners, individuals interested in mental well being, those treated for an addiction as well as those with chronic debilitating psychiatric conditions. Various studies suggest that people with mental health conditions are interested in using apps in their treatment and thus increasing the likelihood of adoption.

But what makes a good mental health app and how can you ensure you are making something worthwhile? In this article I’ll look at the detail of the mental health sector, explore some interesting apps and innovations and discuss the challenges for developers. We will be covering how to:

- Define and engage with your intended audience

- Be interactive, move beyond text and make the app part of something bigger

- Be credible

- Never underestimate the impact of stigma

- Understand security risks

- Risks might not be what you think

- Be progressive

Key Takeaways

- Understand and engage with your intended audience: Different mental health conditions require different app functionalities. For example, an app for depression might serve as an educational tool, a diagnostic tool, a treatment tool, an early predictor of relapses, or an online therapy platform.

- Make the app interactive and a part of something bigger: Features such as tactile interactions, tracking, counseling sessions, peer networks, and gaming elements can make the app more engaging and useful.

- Ensure credibility: Collaborate with mental health professionals, use evidence-based practices, and be transparent about the science behind the app.

- Be aware of stigma: Make sure the app can be used discretely and its branding is not overtly associated with mental illness.

- Be clear about security risks: User information must be secure, and users should be informed about who their information is shared with. Ensure compliance with privacy and confidentiality laws of the location you are working in.

1. Define and Engage with Your Intended Audience

Before you get excited about creating the technical aspects of your app, take a step back and consider who your audience is. This will help you determine the functionality required from your app. A schizophrenia sufferer wanting to reduce their hallucinations will have different needs to a mental health professional. Once you’ve delineated between health professionals and health consumers don’t assume either to be a homogenous entity.

A ‘depression’ app for example might:

- Educate and inform people who think they may have depression (with the end result being contact with a medical professional).

- Assist friends or family of someone with depression.

- Be part of a diagnostic tool in conjunction with a mental health professional.

- Be part of a treatment tool (for example integrating therapeutic strategies and a mood journal).

- Be an early predictor of relapses into depression for someone who is already receiving treatment or has received treatment in the past.

- Provide online talk therapy as an alternative to face to face counseling.

These are all different and need a different focus!

The experiences of different health consumers vary depending on a range of factors including age, symptoms and diagnosis. The creators of Mindshift, an app for young people in British Columbia experiencing anxiety found that young people wanted an app that used little data, contained minimum text and designed discretely to avoid disclosing usage to inquisitive peers.

This differs to the experiences of older people with dementia, who may need an app with larger text, reminders and location tracking. People with schizophrenia can experience short-term memory loss. So apps that display a question on one page and response options on the next can be ineffectual and an app that does not change screens or require scrolling can be a better choice.

Short-term memory loss is also a symptom of schizophrenia, so even apps that display a question on one page and response options on the next could pose added challenges. To remedy this, Ben-Zeev created an app that does not change screens or require scrolling, and users move forward through prompts, reducing options for confusion.

The best way to learn about these differing requirements is to ask and learn. Reading articles on the Internet isn’t enough, go to where the people who will use your app are. In creating Mindshift, B’stro held focus groups to find out what young people experiencing anxiety needed in an app.

This research should include interaction with not only mental health consumers but also the health care industry. The addiction treatment space has had success in alcohol recovery by creating customizable apps for patients, which include localized information and personalized support. A great demonstration of this is A-Chess, a relapse prevention multi purpose app that works in addiction recovery. Its success is in its detail. It can be tailored by the health professional to identify specific needs and triggers of the client.

The above video is worth a quick look to see the app in action, especially the integration of pre-determined trigger times and locations, an assessment of mood, an offer of subsequent coping strategies, an offer of a video call with on-call counsellor questions, a video played when the user rejects and then a phone call and a subsequent phone call.

To create a meaningful app, it’s vital to engage with specialist clinicians in your health subject areas and the people who will be your end users. This might be partnering with a University research department, engaging with national mental health associations, contacting mental health consumer advocates, running focus groups and researching what others are doing.

2. Be Interactive, Make the App Part of Something Bigger

A text-based app can be a useful tool for those seeking educational resources for treatment. But it’s not exciting if it does less than a desktop based program, when the portability, discretion and immediacy of an app has so many advantages.

Tactility is one example where an app can come into its own. Let Panic Go is designed for people who experience panic attacks. When people feel a panic attack coming, they can launch Let Panic Go and tap the screen in sync with each breath to determine how fast they’re breathing. For some people, this repetitive action is enough to slow down their rate of breathing. If it’s not, the app will suggest a slower pace that the user can try to match. Once breathing is under control, the app offers other assistance to help the user through the attack.

Tracking is another example. In the A-Chess app, users are able to enter a map of places associated with previous drug use and the phone triggers a series of interactions when they travel near these places. Many mental health apps incorporate counseling sessions with professionals. The delivery of these varies and can include private chat rooms and face to face therapy. Others offer a peer network for support including private messages, online meetings and discussion groups.

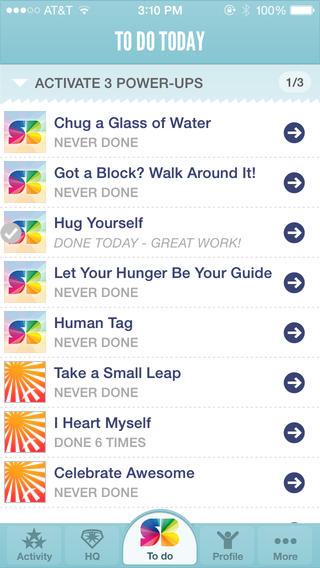

Any of you who are gamers may already know the virtues of gaming as a mental health tool. Super Better is app-based game that helps the user build social, mental, and emotional resilience in the face of any illness, injury, or health goal. The user is required to complete a number of daily tasks or games to build their esteem and emotional well-being.

The use of monitoring sensors in apps are influencing research in the treatment of mental health conditions. Particularly those that require constant monitoring of symptoms and treatment to avoid relapse. An interesting example of this is FOCUS, which is currently being developed at Dartmouth University as a schizophrenia management tool. The app uses techniques such as sleep modeling and predictive sensory data in relapse prediction by measuring the device lock time, ambient lighting and ambient audio to predict sleep time. Sensors can measure changes in sleep patterns and increased isolation. This can be used to create a “lapse signature” unique to the patient, helping with clinical treatment and intervention.

MOBILYZE utilizes phone sensor values such as GPS, ambient light and recent calls to measure users mood and monitor their wellbeing. Then providing positive reinforcement for engagement in positive activities.

PRIORI measures variance in a user’s speech pattern to predict manic and depressive states for people with bipolar.

If you are using text-based resources, make them useful. A sleep or mood journal that can be print enabled or readable by a mental health professional in real time (who may see a patient once a month) is far more meaningful than a list of positive affirmations.

3. Be Credible

It’s difficult to define credibility. It would be ideal if all mental health apps were designed in collaboration with mental health professionals and launched after research based trials. The reality is that if this was to happen, the apps would be far behind technology and researchers are struggling to determine a definitive guide for best practice mental health apps.

For example, a study released this year analyzing 243 depression apps available during 2013 revealed that many fail to incorporate evidence-based practices, health behavior theory, or clinical expertise into their design. The study excluded almost this many again for the lack of reporting of organizational affiliation and content sources.

Other research highlights the problem of clinical assessment of apps due to search terms/procedures used, the retail stores/research databases searched and the criteria used to determine app quality

In the UK, the National Health Service (NHS) is hoping to introduce a new Kitemark for apps scheme. Under this, apps will be accredited with the NHS logo and in the US this is taken a step further with iPrescribeApps, a platform to enable physicians to access apps curated using evidence based criteria and expert opinion by the physicians of iMedicalApps.

Apps could be more transparent. I like the way Super Better incorporates science into their game style app. As a user plays, they find science icons hidden throughout missions. Clicking these icons reveals the science of Super Better, including links to the research articles that the game is based on.

4. Never Underestimate the Impact of Stigma

Despite progress, people suffering from mental health conditions still face stigma by peers, health professionals, insurance companies and potential employers. Consider how your app can be used discretely in a public place with an option to use it without sound. The branding of the app should be clear enough to clarify the purposes of the logo for someone in need but indiscernible to others who may see the phone. This may be one of the few times when you need to be conservative with your designs.

Perhaps one of the most ‘how could they?’ examples was the Samaritans Radar, an app launched by the UK mental health charity in 2014. Radar was a free web application that used an algorithm to look for specific keywords and phrases within a Tweet that could be connected to suicidal intentions. Once users signed up, they received an alert when one of the people they followed tweeted out certain trigger words. This happened without the knowledge or consent of the people who had written the original tweet. It was cited by some as ‘a gift to bullies and trolls’. Experts further expressed concern about allowing the untrained public to monitor social media accounts using algorithms that may or may not be validated. This could potentially unwittingly and inaccurately labelling millions of people with mental health disorders. Because it worked using Oauth to operate as the application user, rather than a generic “@samaritans” user, it was able to access protected accounts. Before the app was pulled, more than 3,000 people activated Samaritans Radar, and over 1.64 million Twitter accounts were tracked.

5. Be Clear About Security Risks

Mental health is an area where privacy and confidentiality are paramount. If a mobile app is used incorrectly or insecurely, user information has the risk exposure. A user needs to have clear and accurate information about whom the information they submit is shared with. It may be with their primary mental health professional, but is their medical journal shared with a pharmaceutical company? What about their health insurer? Are there future consequences? I can imagine a scenario where an individual puts in a claim for depression in their workplace and the workplace insurer is able to show that they downloaded the app. Not as unlikely as it sounds given the incidences of workplaces monitoring social media whilst employees are on sick leave. Privacy and confidentiality laws differ according to countries and states so be aware of what is required with the location you are working.

It has been suggested that a lack of connectivity with apps makes preserving security difficult. One example to resolve this is to use standards-based coding and terms in your app, such as DICOM, HL7, SNOMED and ICD-10 to increase the safety of shared information. An understanding of inscription is imperative. For example, when data enters the app, is stored in the app, and/or is sent from the app.

6. Risks Might Not Be What you Think

Perhaps the biggest risk to progress is that of time and effort. Mental health professionals have no real incentive or reimbursement encouraging patients to use mental health apps unless they are part of a funded research project. I can’t help thinking about how much extra time is required for reading mood journals and sleep diaries. One risk is that without ideal sensitivity and specificity, the algorithms for notifying mental health professionals could result in increased false-positive notifications, leading to increased workload and costs. Health professionals are busy. I’m sure I’m not the only person who has gone to the doctor and had a busy professional use Google search rather than a medical resource. Health apps prescribed by doctors may be classified as health devices and need regulatory compliance. This could take a long time and the costs of regulation could be a disincentive for developers and consumers who would presumably bear the cost.

7. Be Progressive

Mental health apps need to maintain their relevance whilst they are available. In building a mental health app you need to consider its progress throughout the span of its use. What trajectory does the app have and is it customisable? How does it remain relevant when a user becomes well? How does is it maintain good mental health after periods of progress by the user? I don’t think there has been any research into the longer-term effect of mental health apps in the commercial market. It would definitely be interesting for developers to know.

This article aims to give you ‘food for thought’ about mental health apps. There is a big future in mental health apps, especially with 1 in 4 people diagnosed with mental illness in their lifetime and the cost and lack of access to local mental health professionals a significant barrier to mental health treatment for many people.

Frequently Asked Questions on Creating Apps for Mental Illness

What are the key features to consider when developing a mental health app?

When developing a mental health app, it’s crucial to consider features that will provide the most benefit to users. These may include mood tracking, which allows users to log their emotions and identify patterns; therapeutic exercises, such as guided meditations or cognitive behavioral therapy techniques; and community features, such as forums or chat rooms where users can connect with others who are experiencing similar struggles. Additionally, privacy and security features are paramount, as users will be sharing sensitive personal information.

How can I ensure the privacy and security of users’ data in a mental health app?

Ensuring the privacy and security of users’ data is a critical aspect of mental health app development. This can be achieved through encryption, secure servers, and strict data access controls. It’s also important to be transparent with users about how their data is being used and stored, and to provide them with options to control their own data.

How can I make my mental health app user-friendly?

User-friendliness is key in mental health app development. This can be achieved by creating a simple, intuitive interface; providing clear instructions and guidance; and ensuring the app is accessible to users with different abilities and needs. Regular user testing and feedback can also help identify areas for improvement.

How can I promote engagement and regular use of my mental health app?

Promoting engagement and regular use can be achieved through a variety of strategies. These may include gamification elements, such as rewards or achievements; personalized content and recommendations; and regular updates and new features to keep the app fresh and interesting. It’s also important to provide support and resources for users, such as educational materials or links to professional help.

What are the potential challenges in mental health app development?

Mental health app development can present several challenges. These may include ensuring the app is evidence-based and clinically effective; navigating the regulatory landscape for health apps; and addressing the stigma and misconceptions around mental health. It’s also important to consider the potential for misuse or harm, and to have safeguards in place to prevent this.

How can I ensure my mental health app is evidence-based and clinically effective?

Ensuring your app is evidence-based and clinically effective can be achieved by collaborating with mental health professionals during the development process; conducting rigorous testing and research; and staying up-to-date with the latest scientific findings and best practices in mental health care.

How can I navigate the regulatory landscape for mental health apps?

Navigating the regulatory landscape for mental health apps can be complex, but it’s crucial to ensure your app is compliant with all relevant laws and regulations. This may involve consulting with legal experts, obtaining necessary certifications or approvals, and staying informed about changes in the regulatory environment.

How can I address the stigma and misconceptions around mental health in my app?

Addressing stigma and misconceptions can be achieved through educational content, positive messaging, and a respectful and empathetic tone. It’s also important to provide accurate and reliable information, and to challenge harmful stereotypes and myths.

How can I prevent misuse or harm in my mental health app?

Preventing misuse or harm can be achieved through safeguards such as user guidelines, reporting mechanisms, and moderation of community features. It’s also important to provide clear disclaimers and warnings, and to direct users to professional help when necessary.

How can I measure the success of my mental health app?

Measuring the success of your app can be achieved through a variety of metrics, such as user engagement and retention rates; user feedback and reviews; and clinical outcomes or improvements in users’ mental health. It’s also important to consider the impact of your app on broader societal issues, such as reducing stigma and improving access to mental health care.

Cate Lawrence is a Berlin based writer and blogger who spends her spare time cooking and teaching her cat to chase a red dot.

Published in

·App Development·Design·Design & UX·Mobile·Mobile UX·Mobile Web Development·Patterns & Practices·UX·October 23, 2014