Are FTP Programs Secure?

Key Takeaways

- FTP programs like FileZilla can obscure passwords, but if credentials are stored, they are easily accessed from configuration files, compromising security.

- FTP inherently lacks security, transmitting data and credentials in clear text, making it susceptible to interception and misuse.

- Secure alternatives such as FTPS and SFTP offer encryption of data during transfer, providing a higher level of security compared to traditional FTP.

- SSH keys enhance security by enabling authentication without transmitting sensitive details, effectively protecting against interception.

- Continuous delivery tools and secure file transfer protocols like SFTP and FTPS can mitigate risks associated with human error during manual file transfers.

Do you deploy or transfer files using FTP? Given the age of the protocol and its wildly popular nature amongst a wide number of hosting companies, it’s fair to say you might.

But are you aware of the security issues this may open up for you and your business? Let’s consider the situation in-depth.

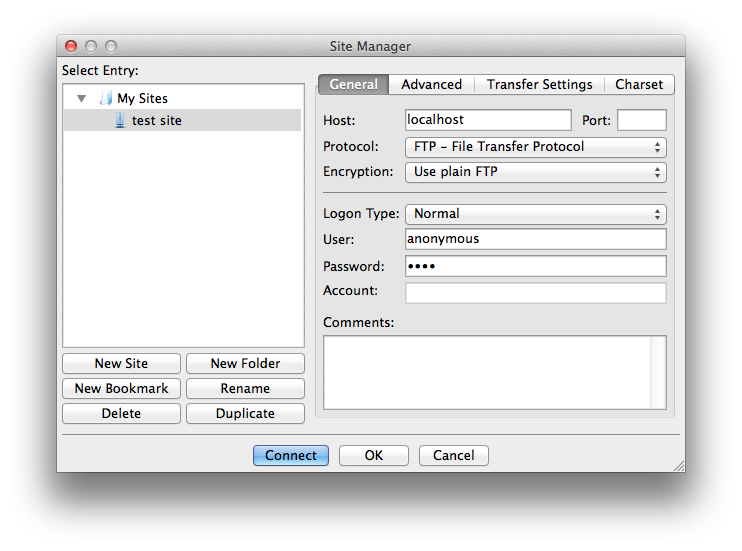

Programs such as FileZilla, CyberDuck, Transmit, or Captain FTP can be secure. They may implement measures such as obscuring passwords from view by those around you. But if you’re transferring data with FTP, these measures are effectively mitigated.

I’ll cut to the chase; the reason I’m writing this is because of an interesting discussion on SitePoint back in August. The discussion focused quite heavily on FileZilla, making a range of assertions as to how insecure it is (or isn’t).

A key aspect of the debate centered around whether you should store your passwords with FileZilla. One of the comments linked to a quite descriptive article which showed that, despite obscuring your credentials when using the software, if you save your credentials, they’re quite easy to retrieve.

If you’ve not read the article, FileZilla stores connection details in a simple XML file, an example of which you can see below.

<?xml version="1.0" encoding="UTF-8" standalone="yes" ?>

<FileZilla3>

<Servers>

<Server>

<Host>localhost</Host>

<Port>21</Port>

<Protocol>0</Protocol>

<Type>0</Type>

<User>anonymous</User>

<Pass>user</Pass>

<Logontype>1</Logontype>

<TimezoneOffset>0</TimezoneOffset>

<PasvMode>MODE_DEFAULT</PasvMode>

<MaximumMultipleConnections>0</MaximumMultipleConnections>

<EncodingType>Auto</EncodingType>

<BypassProxy>0</BypassProxy>

<Name>test site</Name>

<Comments />

<LocalDir />

<RemoteDir />

<SyncBrowsing>0</SyncBrowsing>test site

</Server>

</Servers>

</FileZilla3>You can see that it’s storing a lot of information about the connection, so that you don’t need to remember it. But note how it stores your password in the clear too?

Sure, when you use the program, it obscures the password, as shown in the screenshots above, so that it can’t be read over your shoulder.

But there’s little point when you can just lift it from the computer, should you have access. To be fair, in the latest version of FileZilla, storing passwords is disallowed by default.

What About Encrypted Configuration Files?

People suggested that at the very least, the configuration files should be encrypted or be set up in such a way as to ask for a master password before access was granted, much like 1Password and KeePassX do.

Louis Lazaris then linked to a discussion on Stack Exchange, which attempted to counter the position. Here’s the core of the post:

You see, encrypting the credentials requires an encryption key which needs to be stored somewhere. If a malware is running on your user account, they have as much access to what you (or any other application running at the same level) have. Meaning they will also have access to the encryption keys or the keys encrypting the encryption keys and so on.

I believe the above assertion doesn’t fully appreciate the design considerations of programs such as the two listed above. Applications which are specifically designed to be a secure vault for passwords, and other secure information, would likely not be as easy to crack as this answer implies.

For example, a recent blog post from 1Password lists a number of the key mechanisms employed in the fight against crackers.

These include 128 and 256-bit symmetrical keys, SHA512 and PBKDF2 encryption – along with a range of other features employed to protect the data files being accessed, all while retaining the ease of use and simplicity of them.

So to infer that employing secure encryption vaults is not really any more secure is incorrect, especially given all of these techniques available.

It’s FTP, Not Your App!

But the arguments of whether credentials should or shouldn’t be saved is moot, as there’s a key point which using FTP in the first place overlooks – your credentials and data are sent in the clear. Don’t believe me? Have a read of Why is FTP insecure, on the Deccanhosts blog.

If you weren’t aware of it, through using a simple packet sniffer, such as Wireshark, you can retrieve not only the username and password used, but any other credentials stored in the files being sent, along with the algorithms, database structures, and anything else stored there.

Given the fact that, for quite some time, it’s been common practice to store this information in .ini and config files, I’d suggest quite a large amount of readily downloaded software, such as WordPress, Joomla, etc., will be developed in such a fashion.

FTP was never designed with security in mind; it was designed as a public service. Inherent in this design were a series of further assumptions, which also didn’t take security into consideration. Enrico Zimuel, senior software engineer at Zend, even goes so far as to say: Never use FTP – ever!

Yes, security changes came later, but they were added on — not built-in. There’s no protection against brute-force attacks and while SSH tunneling is possible, it’s difficult, as you need to encrypt both the command and data channels. As a result, your options are limited. And when you seek to implement them, the difficulty factor isn’t always trivial.

Are you a Webmaster? Do you enable a chroot jail for your FTP users? If you’re not familiar with the term chroot, it’s a way of limiting user movement and access. From the directory they log into, they can descend into any sub-directory but can’t move outside of it.

Alternate Options to FTP

Before I convince you that it’s all doom and gloom – it’s not. A number of the FTP programs around today – especially the ones referenced earlier – also support some more secure derivatives of and alternatives to FTP. Let’s have a look at them.

FTPS and SFTP

FTPS is Secure FTP, much like HTTPS is secure HTTP, and runs over SSL (Secure Sockets Layer) and TLS (Transport Layer Security). The user credentials and data are no longer sent in the clear; instead they are encrypted before they’re transmitted.

Client software also has the flexibility, if it’s allowed by the server, to encrypt only parts of the communication, not all of it. This might seem counterintuitive based on the discussion so far.

But if the files being transferred are already encrypted, or if no information of a sensitive nature is being transferred, then it’s likely ok not to incur the overhead that encryption requires.

However, switching to FTPS does come at a cost (and a price). Using FTPS involves generating either a self-signed SSL certificate, or purchasing one from a trusted certificate authority. So better security is available, but there’s a greater amount of effort and cost involved.

But before you shy away, ask yourself how much your information is worth to your business? That might convince you to preservere.

Now let’s look at SFTP. SFTP, or SSH File Transfer Protocol, works differently to FTPS. Designed as an extension of SSH 2.0, SFTP creates a normal FTP connection but executes it over an already encrypted connection. The FTP data stream itself is no more secure than normal FTP, however, the connection over which it operates is more secure.

SSH, SCP and Other Login Shells

If you’re going to move away from FTP, why take half measures? Why use FTP at all? If you’ve installed SFTP, you’ve installed the SSH tools; these give you access to a wide array of functionality.

Starting at the top with SSH itself, this provides full user access to the remote system, letting them do more than standard FTP ever would, or could. The connection is secure and data can be copied from one system to another quite easily.

If you’re a bit of a command line guru, you can even use a tool such as Rsync over SSH.

In a simple use case, it can be used to recursively copy all files from a local directory to a directory on a remote machine. The first time it’s run, all files are copied over.

The second and subsequent times, it checks for file differences, transferring only the differences, newer files, and optionally removing files and directories on the remote machine no longer present locally.

The problem is that granting this kind of access is in itself a security issue waiting to happen. But the effects can be mitigated. OpenSSH allows for a number of configuration choices, such as disallowing root access, limiting the users who can login remotely, and chroot’ing users to specific directories.

Perhaps users don’t need to be on the remote machine in the first place or don’t need many privileges while they’re there. If that’s the case, and it likely is, you can pick from a number of shells, designed to accommodate these situations.

Two of the best are scponly and rssh. Scponly only allows a user to copy files to a remote machine.

The user can’t login, move around, look at, or change files. What’s great is that it still works with rsync (and other tools). rssh goes a bit further, allowing access to SCP, SFTP, rdist, rsync and CVS.

To implement it, a systems administrator need only change the user’s shell, with their tool of choice, and then edit /etc/rssh.conf, listing the allowed protocols. Here’s an example configuration:

allowscp

allowsftpThis configuration allows users to use only SCP and SFTP.

SSH Keys

Next, let’s consider SSH keys. The process takes a bit of an explanation, but I’ll try and keep it quick and concise, paraphrasing heavily from this answer on Stack Exchange:

First, the public key of the server is used to construct a secure SSH channel, by enabling the negotiation of a symmetric key which will be used to protect the remaining session, enable channel confidentiality, integrity protection and server authentication. After the channel is functional and secure, authentication of the user takes place.

The server next creates a random value, encrypts it with the public key of the user and sends it to them. If the user is who they’re supposed to be, they can decrypt the challenge and send it back to the server, who then confirms the identity of the user. It is the classic challenge-response model.

The key benefit of this is that the private key never leaves the client nor is any username or password ever sent. If someone intercepts the SSL traffic, and is able to decrypt it (using a compromised server private key, or if you accept a wrong public key when connecting to the server) – your private details will never fall in the hand of the attacker.

When used with SCP or SFTP, this further reduces effort required to use them, while increasing security. SSH keys can require a passphrase to unlock the private key, and this may seem to make them more difficult to use.

But there are tools around which can link this to your user session, when you log in to your computer. When set up correctly, the passphrase is automatically supplied for you, so you have the full benefit of the system.

What About Continuous Delivery?

Perhaps you’ve not heard the term before, but it’s been floating around for some time now. We’ve written about it on SitePoint before, as recently as last week. Coined by Martin Fowler, Continuous Delivery is defined as:

A software development discipline where you build software in such a way that the software can be released to production at any time.

There’s many ways to implement it, but services such as Codeship and Beanstalk go a long way to taking the pain away.

Here’s a rough analogy of how they work. You set up your software project, including your testing code and deployment scripts, and store it all under version control. I’ll assume you’re using an online service, such as GitHub or Bitbucket.

When a push is made to either of these services, after a commit or release is made in your code branch, the service then runs your application’s tests. If the tests pass, then a deployment of your application is made, whether to test or production.

Assuming everything went well, it would then take care of rolling out a deployment for you automatically. You’d be notified afterwards that the deployment had either succeeded or failed.

If it succeeded, then you can continue on with the next feature or bug fix. If something went wrong, you can check it out to find the cause of the issue. Have a look at the short video below, showing the deployment of a test repository in action with Codeship.

What did you have to do? Push a commit to the Github repository – that’s it! You don’t need to remember to run scripts, where they are, what options and switches to pass to them (especially not late on a Friday evening, when you’d rather be anywhere but doing work).

I appreciate that’s rather simplistic and doesn’t cover all the options and nuances, but you get the idea.

The Problem of Human Error

Let’s finish up by moving away from the basic security concerns of using FTP, to the effectiveness of doing so on a day-to-day basis. Let’s say, for example, that you’re developing a website, say an e-commerce shop, and your deployment process makes use of FTP, specifically FileZilla.

There’s a number of inherent issues here, relating to human error:

- Will all the files be uploaded to the right locations?

- Will the files retain or obtain the required permissions?

- Will one or two files be forgotten?

- Is there a development name that needs to be changed in production?

- Are there post deployment scripts that need to be run?

All of these are valid concerns, but are all easily mitigated when using continuous delivery tools. If it’s late, if the pressure’s on, if the person involved is either moving on from the company or keen to get away on holidays, manually transferring files over FTP is asking for trouble.

Ok, manually transferring files, period, is asking for trouble. Human error is just too difficult to remove.

Quick Apology to FileZilla

I don’t want to seem like I’m picking on FileZilla. It’s a really good application and one I’ve made good use of over a number of years. And there have been techniques used to attempt to make it more secure.

The key point I have is with FTP itself, not necessarily with FileZilla alone.

Wrapping Up

So this has been my take on the FTP security debate. My recommendation — just don’t use it; what’s more, when managing deployments, keep security in mind. After all, it’s your data.

But what are your thoughts? Do you still use FTP? Are you considering moving away? Share your experiences in the comments and what solutions you’ve tried so we can all work towards a solution that’s practical and easy to use.

Further Reading and Resources

- Zend\Crypt\Password

- Password (in)security (slide deck)

- Why is FTP insecure

- Wireshark (network protocol analyzer)

- The continuing evolution of security in 1Password 4

- Beware: FileZilla Doesn’t Protect Your Passwords

- Linux Restricted Shells: rssh and scponly

Frequently Asked Questions about FTP Programs Security

What are the main security risks associated with FTP programs?

FTP programs, while useful for file transfers, come with several security risks. The primary concern is that FTP does not encrypt data, meaning that all information transferred, including sensitive data like usernames and passwords, is sent in plain text. This makes it easy for cybercriminals to intercept and misuse this information. Additionally, FTP is vulnerable to attacks such as brute force attacks, packet capture, and spoofing attacks. These risks can lead to data breaches, unauthorized access, and other serious security issues.

How can I mitigate the risks of using FTP programs?

There are several ways to mitigate the risks associated with FTP programs. One of the most effective methods is to use secure versions of FTP, such as SFTP or FTPS. These protocols encrypt data during transfer, making it much harder for cybercriminals to intercept. Additionally, using strong, unique passwords and regularly updating them can help protect against brute force attacks. It’s also important to keep your FTP program up to date, as updates often include security patches.

What is the difference between FTP, SFTP, and FTPS?

FTP, or File Transfer Protocol, is a standard network protocol used for transferring files from one host to another over the internet. However, it does not provide any encryption. SFTP, or Secure File Transfer Protocol, is a secure version of FTP that uses SSH (Secure Shell) to encrypt data during transfer. FTPS, or FTP Secure, is another secure version of FTP that uses SSL (Secure Sockets Layer) or TLS (Transport Layer Security) for encryption.

Is FTP still used today?

Yes, FTP is still widely used today, especially in business environments for transferring large files or batches of files. However, due to its security vulnerabilities, many organizations are transitioning to more secure alternatives like SFTP or FTPS.

How can I tell if my FTP program is secure?

To determine if your FTP program is secure, you should check if it uses encryption during file transfers. Secure versions of FTP, like SFTP and FTPS, encrypt data during transfer. You can usually find this information in the program’s documentation or settings. Additionally, a secure FTP program should offer features like strong password enforcement, two-factor authentication, and regular updates.

Can I use a VPN with an FTP program to enhance security?

Yes, using a VPN (Virtual Private Network) with an FTP program can enhance security. A VPN encrypts all data sent over the internet, including your FTP traffic. This can help protect your data from being intercepted by cybercriminals.

What are some secure alternatives to FTP?

Some secure alternatives to FTP include SFTP, FTPS, and SCP (Secure Copy Protocol). These protocols all use encryption to protect data during transfer. Additionally, cloud-based file transfer services often provide strong security measures, including encryption, two-factor authentication, and access controls.

What is a brute force attack and how can it affect FTP?

A brute force attack is a type of cyber attack where an attacker tries to gain access to a system by guessing the password. Because FTP sends passwords in plain text, it is particularly vulnerable to brute force attacks. If an attacker successfully guesses the password, they can gain unauthorized access to the system and potentially steal or manipulate data.

How can I protect my FTP program from spoofing attacks?

Spoofing attacks, where an attacker pretends to be a legitimate user or device, can be a serious threat to FTP programs. To protect against these attacks, you can use secure versions of FTP that use encryption, like SFTP or FTPS. Additionally, using strong, unique passwords and regularly updating them can help protect against spoofing attacks.

What is packet capture and how does it affect FTP?

Packet capture, also known as packet sniffing, is a method used by cybercriminals to intercept and analyze data packets as they are transmitted over a network. Because FTP does not encrypt data, it is particularly vulnerable to packet capture. An attacker can use this method to steal sensitive information, like usernames and passwords. To protect against packet capture, you should use a secure version of FTP that uses encryption, like SFTP or FTPS.

Matthew Setter is a software developer, specialising in reliable, tested, and secure PHP code. He’s also the author of Mezzio Essentials (https://mezzioessentials.com) a comprehensive introduction to developing applications with PHP's Mezzio Framework.