Face Detection on the Web with Face-api.js

Web browsers get more powerful by the day. Websites and web applications are also increasing in complexity. Operations that required a supercomputer some decades ago now runs on a smartphone. One of those things is face detection.

The ability to detect and analyze a face is super useful, as it enables us to add clever features. Think of automatically blurring faces (like Google Maps does), panning and scaling a webcam feed to focus on people (like Microsoft Teams), validating a passport, adding silly filters (like Instagram and Snapchat), and much more. But before we can do all that, we first need to find the face!

Face-api.js is a library that enables developers to use face detection in their apps without requiring a background in machine learning.

The code for this tutorial is available on GitHub.

Key Takeaways

- Face-api.js is a library that enables developers to use face detection in their web applications without requiring a background in machine learning. It can detect faces, estimate various things in them, and even recognize who’s in the picture.

- The library uses TensorFlow.js, a popular JavaScript machine learning library, to create, train, and use neural networks in the browser. However, it wraps all of this into an intuitive API, making it easy to use.

- Face-api.js is a great tool for hobby projects, experiments, and even for an MVP. However, it can take a toll on performance, and developers may have to choose between bandwidth and performance or accuracy.

- While face-api.js is a powerful tool, it’s important to note that artificial intelligence excels at amplifying biases. Therefore, developers should be thoughtful about using this technology and test thoroughly with a diverse testing group.

Face Detection with Machine Learning

Detecting objects, like a face, is quite complex. Think about it: perhaps we could write a program that scans pixels to find the eyes, nose, and mouth. It can be done, but to make it totally reliable is practically unachievable, given the many factors to account for. Think of lighting conditions, facial hair, the vast variety of shapes and colors, makeup, angles, face masks, and so much more.

Neural networks, however, excel at these kinds of problems and can be generalized to account for most (if not all) conditions. We can create, train, and use neural networks in the browser with TensorFlow.js, a popular JavaScript machine learning library. However, even if we use an off-the-shelf, pre-trained model, we’d still get a little bit into the nitty-gritty of supplying the information to TensorFlow and interpreting the output. If you’re interested in the technical details of machine learning, check out “A Primer on Machine Learning with Python”.

Enter face-api.js. It wraps all of this into an intuitive API. We can pass an img, canvas, or video DOM element and the library will return one or a set of results. Face-api.js can detect faces, but also estimate various things in them, as listed below.

- Face detection: get the boundaries of one or multiple faces. This is useful for determining where and how big the faces are in a picture.

- Face landmark detection: get the position and shape of the eyebrows, eyes, nose, mouth and lips, and chin. This can be used to determine facing direction or to project graphics on specific regions, like a mustache between the nose and lips.

- Face recognition: determine who’s in the picture.

- Face expression detection: get the expression from a face. Note that the mileage may vary for different cultures.

- Age and gender detection: get the age and gender from a face. Note that for “gender” classification, it classifies a face as feminine or masculine, which doesn’t necessarily reveal their gender.

Before you use any of this beyond experiments, please take note that artificial intelligence excels at amplifying biases. Gender classification works well for cisgendered people, but it can’t detect the gender of my nonbinary friends. It will identify white people most of the time but frequently fails to detect people of color.

Be very thoughtful about using this technology and test thoroughly with a diverse testing group.

Installation

We can install face-api.js via npm:

npm install face-api.js

However, to skip setting up build tools, I’ll include the UMD bundle via unpkg.org:

/* globals faceapi */

import 'https://unpkg.com/face-api.js@0.22.2/dist/face-api.min.js';

After that, we’ll need to download the correct pre-trained model(s) from the library’s repository. Determine what we want to know from faces, and use the Available Models section to determine which models are required. Some features work with multiple models. In that case, we have to choose between bandwidth/performance and accuracy. Compare the file size of the various available models and choose whichever you think is best for your project.

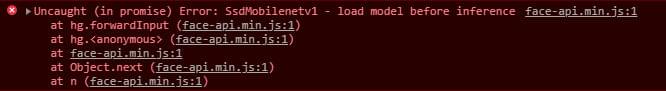

Unsure which models you need for your use? You can return to this step later. When we use the API without loading the required models, an error will be thrown, stating which model the library expects.

We’re now ready to use the face-api.js API.

Examples

Let’s build some stuff!

For the examples below, I’ll load a random image from Unsplash Source with this function:

function loadRandomImage() {

const image = new Image();

image.crossOrigin = true;

return new Promise((resolve, reject) => {

image.addEventListener('error', (error) => reject(error));

image.addEventListener('load', () => resolve(image));

image.src = 'https://source.unsplash.com/512x512/?face,friends';

});

}

Cropping a picture

You can find the code for this demo in the accompanying GitHub repo.

First, we have to choose and load the model. To crop an image, we only need to know the boundary box of a face, so face detection is enough. We can use two models to do that: SSD Mobilenet v1 model (just under 6MB) and the Tiny Face Detector model (under 200KB). Let’s say accuracy is extraneous because users also have the option to crop manually. Additionally, let’s assume visitors use this feature on a slow internet connection. Because our focus is on bandwidth and performance, we’ll choose the smaller Tiny Face Detector model.

After downloading the model, we can load it:

await faceapi.nets.tinyFaceDetector.loadFromUri('/models');

We can now load an image and pass it to face-api.js. faceapi.detectAllFaces uses the SSD Mobilenet v1 model by default, so we’ll have to explicitly pass new faceapi.TinyFaceDetectorOptions() to force it to use the Tiny Face Detector model.

const image = await loadRandomImage();

const faces = await faceapi.detectAllFaces(image, new faceapi.TinyFaceDetectorOptions());

The variable faces now contains an array of results. Each result has a box and score property. The score indicates how confident the neural net is that the result is indeed a face. The box property contains an object with the coordinates of the face. We could select the first result (or we could use faceapi.detectSingleFace()), but if the user submits a group photo, we want to see all of them in the cropped picture. To do that, we can compute a custom boundary box:

const box = {

// Set boundaries to their inverse infinity, so any number is greater/smaller

bottom: -Infinity,

left: Infinity,

right: -Infinity,

top: Infinity,

// Given the boundaries, we can compute width and height

get height() {

return this.bottom - this.top;

},

get width() {

return this.right - this.left;

},

};

// Update the box boundaries

for (const face of faces) {

box.bottom = Math.max(box.bottom, face.box.bottom);

box.left = Math.min(box.left, face.box.left);

box.right = Math.max(box.right, face.box.right);

box.top = Math.min(box.top, face.box.top);

}

Finally, we can create a canvas and show the result:

const canvas = document.createElement('canvas');

const context = canvas.getContext('2d');

canvas.height = box.height;

canvas.width = box.width;

context.drawImage(

image,

box.left,

box.top,

box.width,

box.height,

0,

0,

canvas.width,

canvas.height

);

Placing Emojis

You can find the code for this demo in the accompanying GitHub repo.

Why not have a little bit of fun? We can make a filter that puts a mouth emoji (👄) on all eyes. To find the eye landmarks, we need another model. This time, we care about accuracy, so we use the SSD Mobilenet v1 and 68 Point Face Landmark Detection models.

Again, we need to load the models and image first:

await faceapi.nets.faceLandmark68Net.loadFromUri('/models');

await faceapi.nets.ssdMobilenetv1.loadFromUri('/models');

const image = await loadRandomImage();

To get the landmarks, we must append the withFaceLandmarks() function call to detectAllFaces() to get the landmark data:

const faces = await faceapi

.detectAllFaces(image)

.withlandmarks();

Like last time, faces contains a list of results. In addition to where the face is, each result also contains a raw list of points for the landmarks. To get the right landmarks per feature, we need to slice the list of points. Because the number of points is fixed, I chose to hardcode the indices:

for (const face of faces) {

const features = {

jaw: face.landmarks.positions.slice(0, 17),

eyebrowLeft: face.landmarks.positions.slice(17, 22),

eyebrowRight: face.landmarks.positions.slice(22, 27),

noseBridge: face.landmarks.positions.slice(27, 31),

nose: face.landmarks.positions.slice(31, 36),

eyeLeft: face.landmarks.positions.slice(36, 42),

eyeRight: face.landmarks.positions.slice(42, 48),

lipOuter: face.landmarks.positions.slice(48, 60),

lipInner: face.landmarks.positions.slice(60),

};

// ...

}

Now we can finally have a little bit of fun. There are so many options, but let’s cover eyes with the mouth emoji (👄).

First we have to determine where to place the emoji and how big it should be drawn. To do that, let’s write a helper function that creates a box from an arbitrary set of points. The box holds all the information we need:

function getBoxFromPoints(points) {

const box = {

bottom: -Infinity,

left: Infinity,

right: -Infinity,

top: Infinity,

get center() {

return {

x: this.left + this.width / 2,

y: this.top + this.height / 2,

};

},

get height() {

return this.bottom - this.top;

},

get width() {

return this.right - this.left;

},

};

for (const point of points) {

box.left = Math.min(box.left, point.x);

box.right = Math.max(box.right, point.x);

box.bottom = Math.max(box.bottom, point.y);

box.top = Math.min(box.top, point.y);

}

return box;

}

Now we can start drawing emojis over the picture. Because we have to do this for both eyes, we can put feature.eyeLeft and feature.eyeRight in an array and iterate over them to execute the same code for each eye. All that remains is to draw the emojis on the canvas!

for (const eye of [features.eyeLeft, features.eyeRight]) {

const eyeBox = getBoxFromPoints(eye);

const fontSize = 6 * eyeBox.height;

context.font = `${fontSize}px/${fontSize}px serif`;

context.textAlign = 'center';

context.textBaseline = 'bottom';

context.fillStyle = '#000';

context.fillText('👄', eyeBox.center.x, eyeBox.center.y + 0.6 * fontSize);

}

Note that I used some magic numbers to tweak the font size and the exact text position. Because emojis are unicode and typography on the Web is weird (to me, at least), I just tweak the numbers until they appear about right. A more robust alternative would be to use an image as an overlay.

Concluding

Face-api.js is a great library that makes face detection and recognition really accessible. Familiarity with machine learning and neural networks isn’t required. I love tools that are enabling, and this is definitely one of them.

In my experience, face recognition on the Web takes a toll on performance. We’ll have to choose between bandwidth and performance or accuracy. The smaller models are definitely less accurate and would miss a face in some of the factors I mentioned before, like poor lighting or when faces are covered with a mask.

Microsoft Azure, Google Cloud, and probably other businesses offer face detection in the cloud. Because we avoid downloading big models, cloud-based detection avoids heavy page loads, tends to be more accurate as it’s frequently improved, and may even be faster because of optimized hardware. If you need high accuracy, you may want to look into a plan that you’re comfortable with.

I definitely recommend playing with face-api.js for hobby projects, experiments, and maybe for an MVP.

Frequently Asked Questions (FAQs) about Face API.js

What is Face API.js and how does it work?

Face API.js is a JavaScript library that uses TensorFlow.js to perform face detection, face recognition, and facial landmark detection in the browser. It works by using machine learning models to detect and recognize faces in images or real-time video streams. The library provides several APIs that allow developers to perform tasks such as detecting all faces in an image, recognizing the faces of specific individuals, and identifying facial features like the eyes, nose, and mouth.

How can I install and use Face API.js in my project?

To install Face API.js, you can use npm or yarn. Once installed, you can import the library into your project and start using its APIs. The library provides a comprehensive set of examples and tutorials that can help you get started.

Can Face API.js be used for real-time face detection and recognition?

Yes, Face API.js can be used for real-time face detection and recognition. The library provides APIs that can process video streams and detect or recognize faces in real-time. This makes it suitable for applications such as surveillance, video conferencing, and interactive installations.

What are the system requirements for using Face API.js?

Face API.js requires a modern browser that supports WebGL and WebAssembly. It also requires a relatively powerful CPU and GPU, as face detection and recognition are computationally intensive tasks. However, the exact requirements will depend on the specific use case and the number of faces that need to be processed.

How accurate is Face API.js in detecting and recognizing faces?

The accuracy of Face API.js depends on several factors, including the quality of the input images or video, the lighting conditions, and the pose of the faces. However, the library uses state-of-the-art machine learning models that have been trained on large datasets, so it can achieve high accuracy in most conditions.

Can Face API.js detect and recognize faces in different lighting conditions and poses?

Yes, Face API.js can detect and recognize faces in a variety of lighting conditions and poses. However, like all machine learning models, its performance can be affected by extreme lighting conditions or unusual poses.

Can Face API.js be used in commercial projects?

Yes, Face API.js is open-source and can be used in both personal and commercial projects. However, it’s always a good idea to check the license terms before using any open-source library in a commercial project.

How can I improve the performance of Face API.js in my application?

There are several ways to improve the performance of Face API.js. One way is to optimize the input images or video, for example by reducing their resolution or converting them to grayscale. Another way is to fine-tune the parameters of the face detection and recognition algorithms.

Can Face API.js recognize faces in images or video that contain multiple people?

Yes, Face API.js can detect and recognize multiple faces in the same image or video. The library provides APIs that return an array of detected faces, each with its own bounding box and recognition results.

Can Face API.js be used with other JavaScript libraries or frameworks?

Yes, Face API.js can be used with any JavaScript library or framework. It’s designed to be flexible and easy to integrate into existing projects.

Tim Severien is an enthusiastic front-end developer from the Netherlands, passionate about JavaScript and Sass. When not writing code, he write articles for SitePoint or for Tim’s blog.