5 A/B Testing Tools for Making Data-driven Design Decisions

Key Takeaways

- A/B testing is increasingly important in a competitive online marketplace, and can greatly affect a website’s success by optimizing user experience and increasing conversions.

- Five top A/B testing tools are Optimizely, Google Optimize, Unbounce, AB Tasty, and Crazy Egg, each offering unique features and targeting different user needs.

- While some tools require a developer for more complex tests, most offer easy-to-use visual editors for creating experiments, making A/B testing accessible to a wider range of users.

- Regardless of the chosen tool, it’s crucial to establish baseline performance using Google Analytics before making any changes, and to view each incremental improvement as a step towards maximizing conversions.

A/B testing is becoming more and more common as teams realize how important it is for a website’s success. The Web is a huge, competitive marketplace with very few (if any) untapped markets, meaning that being successful by offering something unique is rare. Much more common is that you’re competing for the business of your customers with several other websites, so attempting to convert every visitor into a customer or upselling/cross-selling your services better could make all the difference to your bottom line.

Due to this, the market for A/B testing tools and CRO (conversion rate optimization) tools is growing exponentially. But choosing one can be quite a time-consuming challenge, so in this article I’ll compare the best A/B testing tools to help you decide which is most suitable for you or your team. If you want to get up to speed with A/B testing and CRO, check out our recent Introduction to A/B Testing article.

TL;DR: A/B testing is about experimenting with visual and content changes to see which results in more conversions. A/B testing often follows usability testing as a means of testing a solution to a flaw in the user experience identified using metrics like bounce rate in an analytics tool like Google Analytics, and thanks to the depth and quality of A/B testing tools available now, A/B testing is accessible to designers as well as marketers and developers.

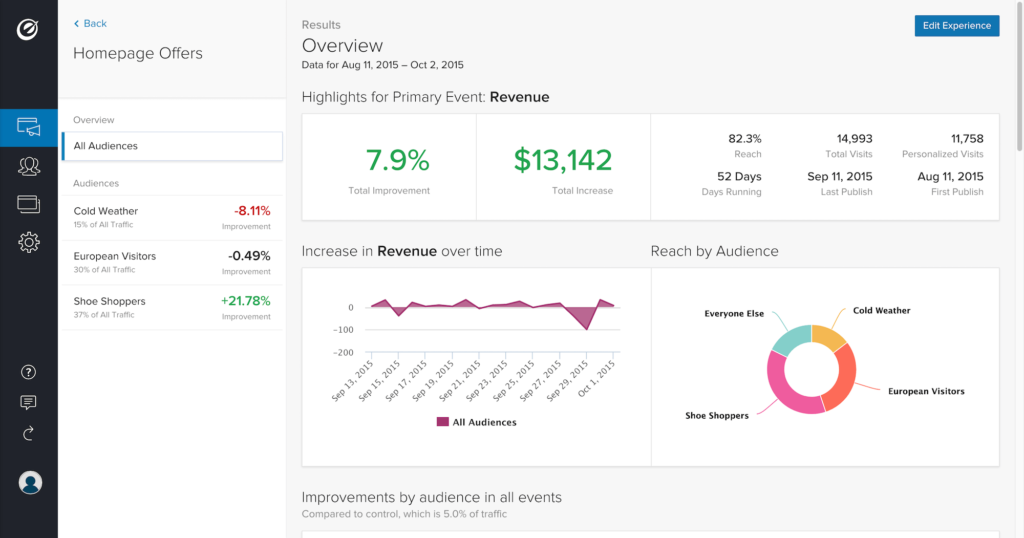

1. Optimizely

- Summary: the leading A/B testing tool in 2017

- Price: contact sales team

- Who it’s for: designers, marketers and developers collaborating

Optimizely is one of the leading — if not the leading — A/B testing and CRO tools on the market today. It offers analytics tools to suit users of all levels, and a multitude of A/B testing tools. (You could think of it as the Google Analytics of A/B testing, with a much simpler user interface.)

Consider this scenario: You have an ecommerce store built with Magento. You’re aware that in certain cases it may benefit stores to add a one-step checkout solution instead of the standard multi-page checkout, but you’re not sure if your store fits that use case. You need to test both options and compare the results with/without the one-step checkout experience. You know that running two versions of the checkout simultaneously requires changes to the code, which is a complex matter.

With Optimizely, you can send a certain amount of your users to a totally separate checkout experience to collect conversion data. If the experiment yields negative results, you delete the experiment and the original checkout web page still exists and works fine. No harm done.

With their Web Experimentation tool, which offers an easy-to-use visual editor to create A/B tests without requiring a developer (optional), the ability to target specific user types and segments, and create experiments on any device, Optimizely has all your bases covered.

Although you can run A/B tests without a developer, your variations can be more targeted (for example, your variations can go beyond color, layout and content changes) if you have the skills and/or resources to develop custom experiments with code. By integrating your A/B tests into your code, you can serve different logic and test major changes before pushing them live.

Also, if your product extends beyond the web, Optimizely works with iOS, tvOS and Android apps. Optimizely’s Full Stack integrations makes it possible to integrate A/B tests into virtually any codebase, including Python, Java, ruby, Node, PHP, C#, Swift and Android.

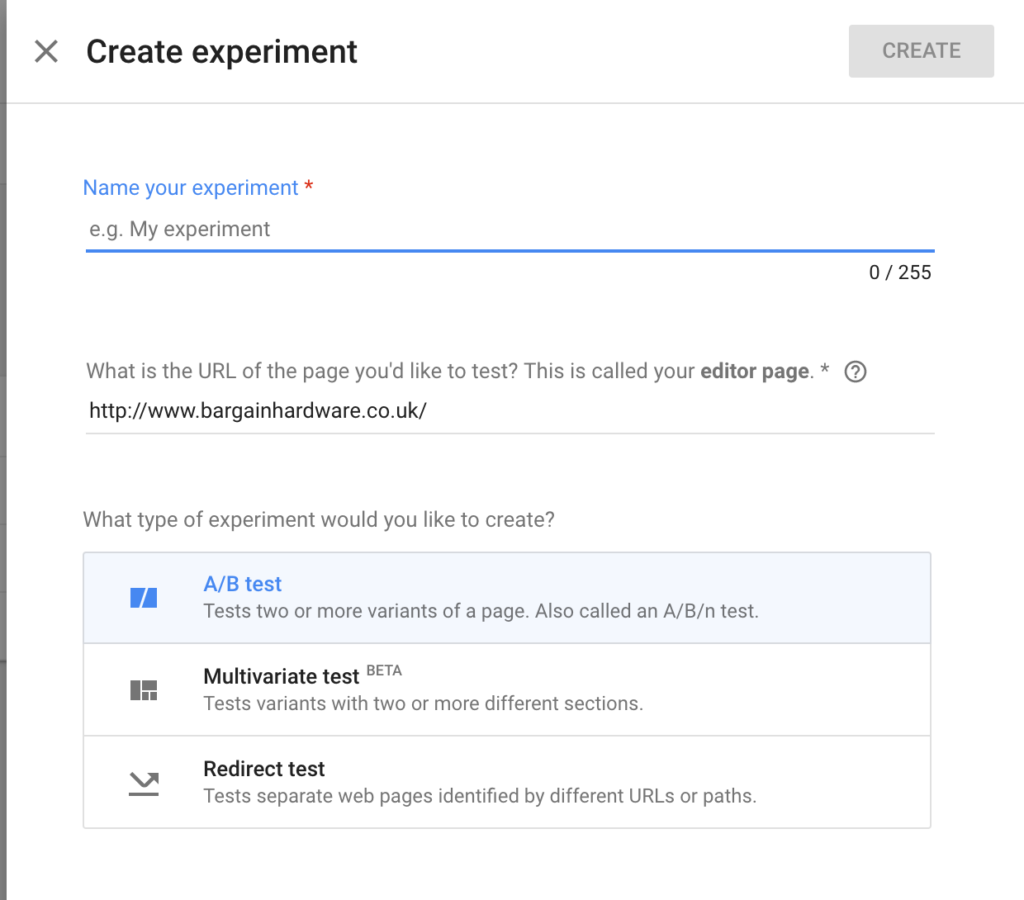

2. Google Optimize

- Summary: A/B testing that seamlessly integrates with Google Analytics

- Price: free

- Who it’s for: anyone, being the easiest to learn of the bunch

Google Optimize is a free, easy-to-use tool that integrates directly with your Google Analytics Events and Goals to make A/B testing quick and easy! It’s ideal for traditional A/B testing, focusing on comparing different CTA (call to action) elements, colors and content.

Developers aren’t required for implementing Google Optimize, since it’s as simple as adding a line of JavaScript to your website and then customising your layout with the visual editor. With this you can change the content, layout, colors, classes and HTML of any element within your page.

It’s not as sophisticated as Optimizely, since it doesn’t allow you to create custom experiments with code/developers, but it’s free. It’s great for those starting out with A/B testing.

For each Google Optimize experiment, you’ll need to specify which Google Analytics Goals or Events will be the baseline for your A/B tests. For example, if you were A/B testing a product page, you could use an “Add To Basket” event that you’ve defined in Google Analytics to evaluate which of your variations converts the best. The Google Analytics report then gives you a clear indication of which variation converts best. It’s ideal for those on a low budget!

Just don’t get carried away, as Google famously once did, by testing 40 different shades of blue to see which converted best!

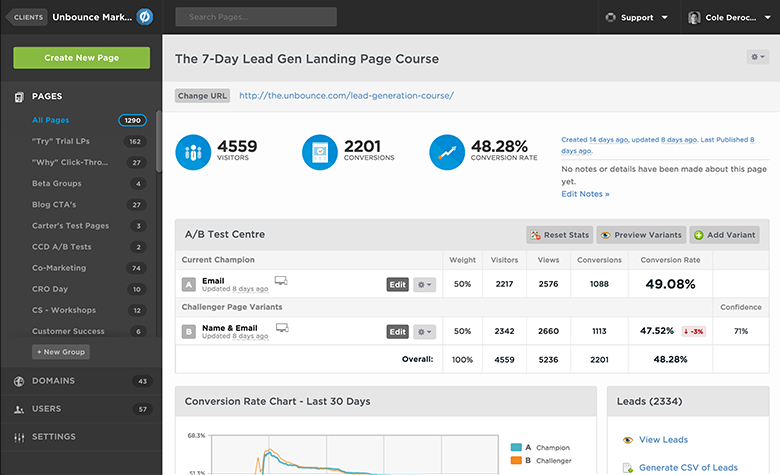

3. Unbounce

- Summary: A/B testing and conversion tools for landing pages

- Price: From $79/month

- Who it’s for: marketers looking to boost conversions on landing pages

Unbounce focuses on landing pages and convertible tools. Convertible tools use triggers, scenario-based overlays and sticky bars to A/B test offers and messages to learn when, where and why your visitors convert. An example? If a user tries to leave your site, they’re shown a discount code in a modal or a sticky header, and a test will determine which is more effective.

Landing pages can be an amazing way to validate your ideas, build excitement around a new product, and/or re-engage dormant customers. The problem with them is that they can result in false positives. If you get very few conversions you may feel like your idea is invalidated or demand for the new product doesn’t exist, when in reality users were just unimpressed and/or unconvinced by the landing. Unbounce helps you to determine what your landing is missing.

While you can choose from over 100 responsive templates designed for many markets, goals and scenarios, and then customize it with your own content using their drag and drop UI, you can also integrate Unbounce with your own design, making a terrific solution for designers and marketers who need to collaborate. Unbounce also works with Zapier and Mailchimp, so data can be transferred across the other apps and tools that marketers use.

It’s basically Optimizely for landing pages.

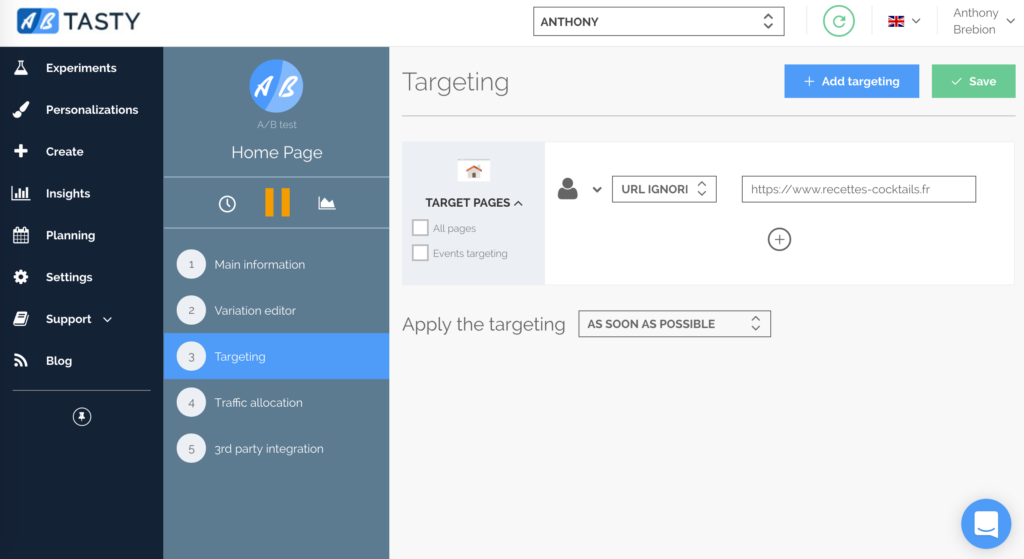

4. AB Tasty

- Summary: A/B testing with CRO features like Exit Intent Detection

- Price: $249+/month

- Who it’s for: designers, marketers, developers and stakeholders

AB Tasty is known for its deeper customizations. Rather than sending X visitors to Test A and Y visitors to Test B, AB Tasty allows you to target visitors based on many different factors, such as user data, user behavior, geolocation, or even the weather in their current location.

The visual editor is also easy to use, offering a widget library to add completely new elements to your variations rather than awkwardly customizing existing ones — allowing you to focus more on the targeting and results than tweaking the design. Think of it as rapid iteration, but for A/B testing. It’s very useful for early-stage websites finding their way.

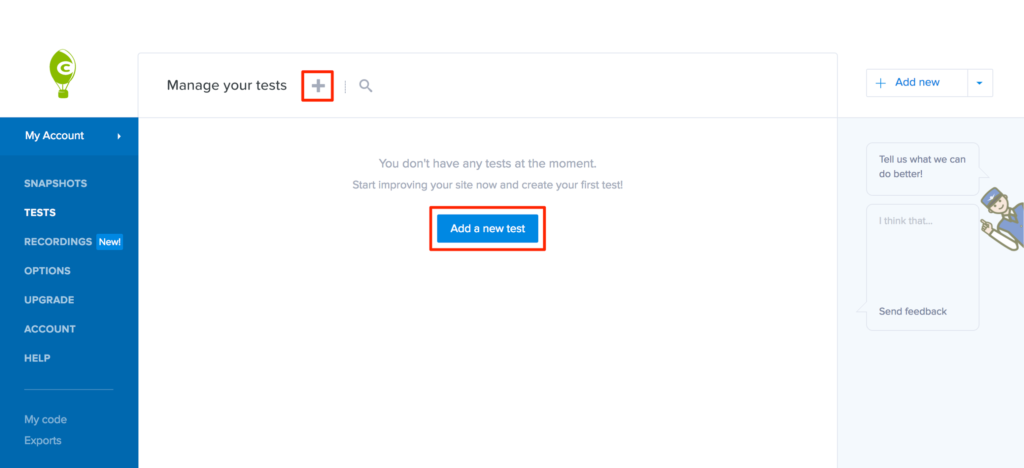

5. Crazy Egg

- Summary: A/B testing combined with heatmaps and screen recordings

- Price: $29+/month

- Who it’s for: anyone, but with a slight focus on designers

Crazy Egg is somewhat new to the A/B testing market, having initially built its audience on heatmap tools and screen recordings. However, the addition of A/B testing tools to a usability testing app makes total sense. If usability testing highlights the problem, then A/B testing can help you narrow down the solution. Also, Crazy Egg’s pricing is very attractive!

Like most other services, Crazy Egg offers a visual editor for creating experiments, making the tool relatively accessible to anyone. It really focuses on making the creation of A/B tests extremely quick and easy, boasting that tests can be created and live within minutes.

In short, CrazyEgg offers you the what, the where, the why, and the ability to test a solution.

Conclusion

With these tools, you can A/B test and begin to improve conversions across practically any scenario and website. You don’t need to be a developer to take advantage of A/B testing tools, but if you do have a developer, you can create really powerful, custom experiments easily.

Whichever tool you use, be sure to establish your baseline performance using Google Analytics to know your conversion rates before you make any changes, and wear each 0.1% improvement as a badge of honor in your quest to maximize conversions! Good luck!

Frequently Asked Questions about A/B Testing Tools

What is the importance of A/B testing in digital marketing?

A/B testing is a crucial component of digital marketing as it allows marketers to compare two versions of a web page, email, or other marketing asset to determine which performs better. It’s a way to test changes to your page against the current design and determine which one produces positive results. It is a method to validate any new design or change to an element on your webpage or mobile app.

How do I choose the right A/B testing tool for my business?

Choosing the right A/B testing tool depends on your business needs and goals. Consider factors such as ease of use, integration capabilities, reporting features, and pricing. Some tools may offer advanced features like multivariate testing or heat mapping, while others might excel in simplicity and user-friendliness. It’s essential to evaluate your needs and test out different tools before making a decision.

Can A/B testing improve my website’s conversion rate?

Yes, A/B testing can significantly improve your website’s conversion rate. By testing different elements of your website, you can identify what works best for your audience and make data-driven decisions. This could lead to improved user experience, increased engagement, and ultimately, higher conversion rates.

What elements of my website should I test with A/B testing tools?

Almost any element of your website that affects visitor behavior can be A/B tested. This includes, but is not limited to, headlines, subheadlines, paragraph text, testimonials, call to action text, call to action buttons, links, images, content near the fold, social proof, media mentions, and awards.

How long should I run an A/B test?

The duration of an A/B test can vary based on several factors, including your website’s traffic, the conversion rates of your original page, and the number of variations you’re testing. However, a good rule of thumb is to run the test until you have a statistically significant result, which typically takes at least two weeks.

Can I perform A/B testing on a low-traffic website?

Yes, you can perform A/B testing on a low-traffic website, but it may take longer to get statistically significant results. You may also need to make larger, more noticeable changes to see a significant impact on your conversion rates.

What is multivariate testing, and how does it differ from A/B testing?

Multivariate testing is a method used to test multiple variables on a webpage simultaneously. Unlike A/B testing, which only tests one variable at a time, multivariate testing can help you understand how different elements interact with each other and affect user behavior.

Are there any risks associated with A/B testing?

While A/B testing is generally beneficial, there are potential risks if not done correctly. These include testing too many changes at once, making decisions based on inconclusive results, or running tests for too short a period. It’s crucial to approach A/B testing strategically and make data-driven decisions.

How do I analyze the results of an A/B test?

A/B testing tools typically provide an analysis of the test results, showing which version of your page performed better and the statistical significance of the results. Look for changes in key metrics like conversion rates, bounce rates, and time spent on page.

Can A/B testing affect my site’s SEO?

If done correctly, A/B testing should not negatively impact your site’s SEO. Google supports A/B testing and has provided guidelines on how to ensure your tests don’t affect your search ranking. However, it’s important to avoid running tests for longer than necessary and to use canonical tags to prevent duplicate content issues.

Jamie has over 7 years experience working for International brands as a full stack developer & e-commerce/conversion optimization expert. Outside of work he likes to hike and is trying to learn new languages.