HTMLInputElement interface with a capture attribute. A basic example would be: <input type="file" capture>.

This capture attribute makes the request to use the media capture tool (camera, microphone, etc.) for capturing media on the spot.

Here is simple declarative example to illustrate its use. The following shows an HTML form using capture alongside the accept attribute, which gives hints on the preferred MIME type for the user to capture media.

<input type="file" accept="image/*" capture><canvas> element and apply some WebGL filters on it.

And thus, we have Media Capture and Streams.

Media Capture and Streams is actually a set of JavaScript APIs. These APIs allow local media (audio and video) to be requested from a platform. In other words, it provides access to the user’s local audio and video input/output devices.

More specifically, we have the MediaStream API, which provides the means to control where multimedia stream data is consumed, and provides some control over the devices that produce the media. Additionally, the MediaStream API exposes information about the devices that are able to capture and render media.

Why is it important? Here’s a history lesson for the future generations that might take this for granted. The media (Audio/Video) capture capability has been the “Nirvana” of web development for some time. Historically, we had to rely on browser plugins (Flash or Silverlight) to achieve this. Then came HTML5 to the rescue. HTML5 brought powerful features that allow access to device hardware natively from Geolocation (GPS) to WebGL (GPU) and much more. These features which are now baked into the browser expose high level JavaScript APIs that sit on top of the device’s hardware capabilities.

So, why would we use it becomes obvious.

One of the most important methods in this API is getUserMedia() and it’s the gateway into that set of APIs. getUserMedia() provides the means to access the user’s local camera/microphone stream.

Nevertheless, Feature detecting is the best way to check for its support, either directly if(navigator.getUserMedia) or using modernizer if(Modernizr.getusermedia).

The basic syntax is:

var stream = navigator.getUserMedia(constraints, successCallback, errorCallback);constraints parameter is actually a MediaStreamConstraints object with two Boolean members: video and audio. These describe the media types supporting the LocalMediaStream object. Either or both must be specified to validate the constraint argument. The LocalMediaStream object is the MediaStream object returned from the call to getUserMedia(). It has all the properties and events of the MediaStream object and the stop method.

Setting the constraints for both audio and video would like the following: { video: true, audio: true }

The successCallback function will be called (on success) with the LocalMediaStream object that contains the media stream. You may assign that object to the appropriate element and work with it, as shown in the following example:

The errorCallback will be invoked when an error arises, it will be called with one of the following code arguments: “permission_denied”, “not_supported_error” or “mandatory_unsatisfied_error”.

A basic example would be:

<!DOCTYPE html>

<a href="Default.html">Default.html</a>

<head>

<meta charset="utf-8"/>

<title></title>

<script type="text/javascript">

if (navigator.getUserMedia) {

navigator.getUserMedia(

// constraints

{

video: true,

audio: true

},

// successCallback

function (localMediaStream) {

var video = document.querySelector('video');

video.src = window.URL.createObjectURL(localMediaStream);

// do whatever you want with the video

video.play();

},

// errorCallback

function (err) {

console.log("The following error occured: " + err);

});

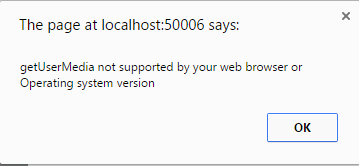

} else {

alert("getUserMedia not supported by your web browser or Operating system version");

}

</script>

</head>

<body>

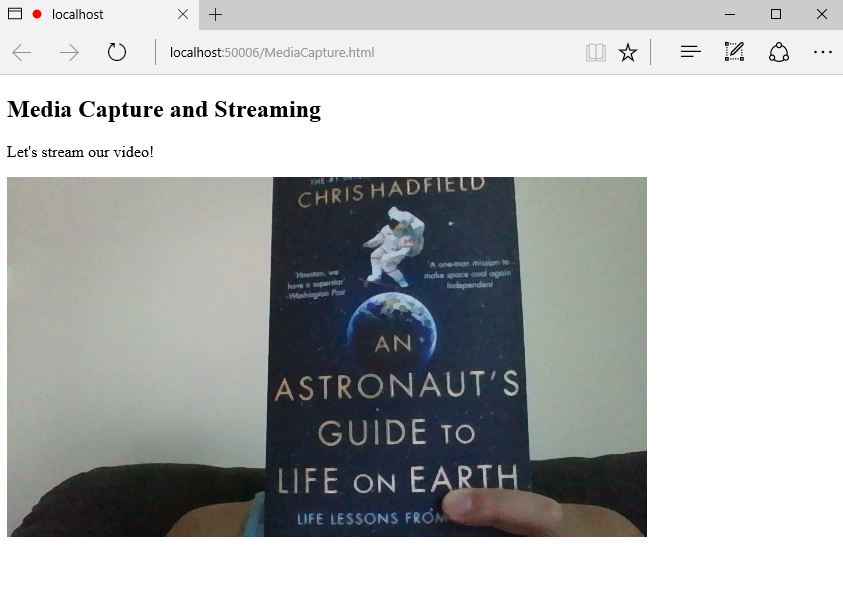

<h2>Media Capture and Streaming</h2>

<p>Let's stream our video!</p>

<video autoplay></video>

</body>

</html>

From here on, you can get creative. Using

From here on, you can get creative. Using getUserMedia, you can record, play, save and load streaming media. Then, if you want, you can apply some visualizations, effects and filters to stream data.

In terms of browser compatibility, getUserMedia API is supported on major modern browsers. Microsoft Edge, Chrome 21+, Opera 18+, and Firefox 17+. Surprisingly, on Chrome the standard function was not recognized. It seems that the function getUserMedia was not recognized. Below is a screenshot from Chrome when I ran the site there.

In order to resolve this, I had to add vendor webkit vendor prefix. I ended up adding all the vendor prefixes to be on the safe side.

Here is the appended script, just before the

In order to resolve this, I had to add vendor webkit vendor prefix. I ended up adding all the vendor prefixes to be on the safe side.

Here is the appended script, just before the if (navigator.getUserMedia)

navigator.getUserMedia = (navigator.getUserMedia ||

navigator.webkitGetUserMedia ||

navigator.mozGetUserMedia

);

More Hands-on with Web Development

This article is part of the web development series from Microsoft evangelists and engineers on practical JavaScript learning, open source projects, and interoperability best practices including Microsoft Edge browser and the new EdgeHTML rendering engine. We encourage you to test across browsers and devices including Microsoft Edge – the default browser for Windows 10 – with free tools on dev.microsoftedge.com:- Scan your site for out-of-date libraries, layout issues, and accessibility

- Download free virtual machines for Mac, Linux, and Windows

- Check Web Platform status across browsers including the Microsoft Edge roadmap

- Remotely test for Microsoft Edge on your own device

- Interoperability best practices (series):

- How to avoid Browser Detection

- Using CSS Prefix best practices

- Keeping your JS frameworks & libs updated

- Building plug-in free web experiences

- Coding Lab on GitHub: Cross-browser testing and best practices

- Woah, I can test Edge & IE on a Mac & Linux! (from Rey Bango)

- Advancing JavaScript without Breaking the Web (from Christian Heilmann)

- Unleash 3D rendering with WebGL (from David Catuhe)

- Hosted web apps and web platform innovations (from Kiril Seksenov)

- vorlon.JS (cross-device remote JavaScript testing)

- manifoldJS (deploy cross-platform hosted web apps)

- babylonJS (3D graphics made easy)

- Visual Studio Code (lightweight code-editor for Mac, Linux, or Windows)

- Visual Studio Dev Essentials (free, subscription-based training and cloud benefits)

- Code with node.JS with trial on Azure Cloud

Frequently Asked Questions about Media Capture and Streams

What is the Media Capture and Streams API?

The Media Capture and Streams API, also known as the MediaStream API, is a web technology that allows web applications to access a user’s camera and microphone. This API is used to capture audio, video, and other types of data in real-time. It is a powerful tool for developers who want to create interactive web applications that require real-time media capture, such as video chat applications, audio recording tools, and more.

How does the Media Capture and Streams API work?

The Media Capture and Streams API works by providing a set of JavaScript interfaces that allow web applications to access and manipulate media streams. The API provides methods for capturing media from different sources, such as the user’s camera or microphone, and for manipulating these streams, for example, by adding effects or combining multiple streams.

What is the ‘capture’ attribute in HTML?

The ‘capture’ attribute in HTML is used with the input element to specify that the user’s camera or microphone should be used for capturing media. This attribute can be used to create a more streamlined user experience by directly opening the camera or microphone interface when the user interacts with the input element.

How can I use the Media Capture and Streams API in my web application?

To use the Media Capture and Streams API in your web application, you first need to request access to the user’s camera or microphone using the navigator.mediaDevices.getUserMedia() method. This method returns a Promise that resolves to a MediaStream object, which can then be used to capture media.

What are the potential security issues with the Media Capture and Streams API?

The Media Capture and Streams API has potential security implications as it allows web applications to access sensitive hardware such as the user’s camera and microphone. To mitigate these risks, browsers require that the API is used in a secure context, typically a page served over HTTPS. Additionally, user permission is required before access to the camera or microphone is granted.

Can I use the Media Capture and Streams API on all browsers?

The Media Capture and Streams API is widely supported across modern browsers, including Chrome, Firefox, Safari, and Edge. However, it may not be available on older browsers or some mobile browsers. It’s always a good idea to check the specific browser compatibility before implementing this API in your web application.

How can I handle errors when using the Media Capture and Streams API?

When using the Media Capture and Streams API, errors can be handled using the Promise rejection mechanism. If an error occurs while trying to access the user’s camera or microphone, the Promise returned by navigator.mediaDevices.getUserMedia() will be rejected with a MediaStreamError.

Can I capture media from other sources besides the camera and microphone?

Yes, the Media Capture and Streams API also allows you to capture media from other sources, such as screen capture. This can be done using the navigator.mediaDevices.getDisplayMedia() method, which works similarly to getUserMedia() but captures the contents of the user’s screen instead of the camera or microphone.

Can I manipulate the captured media streams?

Yes, the Media Capture and Streams API provides several methods for manipulating media streams. For example, you can use the MediaStreamTrack interface to control the characteristics of the media, such as volume, brightness, contrast, and more.

What are some practical applications of the Media Capture and Streams API?

The Media Capture and Streams API has a wide range of practical applications. It can be used to create video chat applications, live streaming platforms, audio recording tools, interactive games, and more. The possibilities are virtually endless, making this API a powerful tool for web developers.

Rami Sarieddine

Rami SarieddineRami Sarieddine is a Technical Evangelist at Microsoft. He is also a published author on web technologies and his latest title on JavaScript Promises. A regular speaker and trainer at Microsoft Developer events. He was awarded Microsoft Valued Professional status on IIS/NET and C#. Prior to Microsoft, Rami had been working as a Software Engineer & Analyst across several industries and have been developing for the web for some good 7 years. When not working, you will catch him running marathons somewhere.