Introduction to Kubernetes: How to Deploy a Node.js Docker App

Key Takeaways

- Kubernetes, an open-source system for automating deployment, scaling, and management of containerized applications, provides powerful abstractions that decouple application operations from underlying infrastructure operations.

- Kubernetes operates on a client/server architecture, with the server running on the cluster that deploys the application. The basic unit that Kubernetes deals with is a pod, which is a group of containers.

- Deploying a Node.js application on Google Container Engine (GKE) using Kubernetes involves creating a Docker image of the application, creating a cluster with instances, uploading the Docker image to Google Container Image Registry, creating a deployment spec file, and exposing the service to the internet.

- Scaling a service in Kubernetes involves editing the deployment.yml file and changing the number of replicas. It is also possible to set up autoscaling, although this is beyond the scope of the tutorial.

While container technology has existed for years, Docker really took it mainstream. A lot of companies and developers now use containers to ship their apps. Docker provides an easy to use interface to work with containers.

However, for any non-trivial application, you will not be deploying “one container”, but rather a group of containers on multiple hosts. In this article, we’ll take a look at Kubernetes, an open-source system for automating deployment, scaling, and management of containerized applications.

Prerequisites: This article assumes some familiarity with Docker. If you need a refresher, check out Understanding Docker, Containers and Safer Software Delivery.

What Problem Does Kubernetes Solve?

With Docker, you have simple commands like docker run or docker stop to start/stop a container respectively. Unlike these simple commands that let you do operations on a single container, there is no docker deploy command to push new images to a group of hosts.

Many tools have appeared in recent times to solve this problem of “container orchestration”; popular ones being Mesos, Docker Swarm (now part of the Docker engine), Nomad, and Kubernetes. All of them come with their pros and cons but, arguably, Kubernetes has the most mileage at this point.

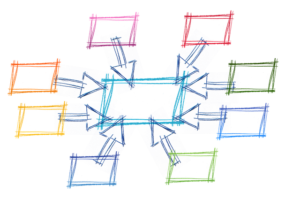

Kubernetes (also referred to as ‘k8s’) provides powerful abstractions that completely decouple application operations such as deployments and scaling from underlying infrastructure operations. So, with Kubernetes, you do not work with individual hosts or virtual machines on which to run you code, but rather Kubernetes sees the underlying infrastructure as a sea of compute on which to put containers.

Kubernetes Concepts

Kubernetes has a client/server architecture. Kubernetes server runs on your cluster (a group of hosts) on which you will deploy your application. And you typically interact with the cluster using a client, such as the kubectl CLI.

Pods

A pod is the basic unit that Kubernetes deals with, a group of containers. If there are two or more containers that always need to work together, and should be on the same machine, make them a pod. A pod is a useful abstraction and there was even a proposal to make them a first class docker object.

Node

A node is a physical or virtual machine, running Kubernetes, onto which pods can be scheduled.

Label

A label is a key/value pair that is used to identify a resource. You could label all your pods serving production traffic with “role=production”, for example.

Selector

Selections let you search/filter resources by labels. Following on from the previous example, to get all production pods your selector would be “role=production”.

Service

A service defines a set of pods (typically selected by a “selector”) and a means by which to access them, such as single stable IP address and corresponding DNS name.

Deploy a Node.js App on GKE using Kubernetes

Now, that we are aware of basic Kubernetes concepts, let’s see it in action by deploying a Node.js application on Google Container Engine (referred to as GKE). You’ll need a Google Cloud Platform account for the same (Google provides a free trial with $300 credit).

1. Install Google Cloud SDK and Kubernetes Client

kubectl is the command line interface for running commands against Kubernetes clusters. You can install it as a part of Google Cloud SDK. After Google Cloud SDK installs, run the following command to install kubectl:

$ gcloud components install kubectl

or brew install kubectl if you are on Mac. To verify the installation run kubectl version.

You’ll also need to setup the Google cloud SDK with credentials for your Google cloud account. Just run gcloud init and follow the instructions.

2. Create a GCP project

All Google Cloud Platform resources are created under a project, so create one from the web UI.

Set the default project ID while working with CLI by running:

gcloud config set project {PROJECT_ID}

3. Create a Docker Image of your application

Here is the application that we’ll be working with: express-hello-world. You can see in the Dockerfile that we are using an existing Node.js image from dockerhub. Now, we’ll build our application image by running:

$ docker build -t hello-world-image .

Run the app locally by running:

docker run --name hello-world -p 3000:3000 hello-world-image

If you visit localhost:3000 you should get the response.

4. Create a cluster

Now we’ll create a cluster with three instances (virtual machines), on which we’ll deploy our application. You can do it from the fairly intuitive web UI by going to container engine page or by running this command:

$ gcloud container clusters create {NAME} --zone {ZONE}

Let’s create a cluster called hello-world-cluster in us-east1-b by running

$ gcloud container clusters create hello-world-cluster --zone us-east1-b --machine-type f1-micro

This starts a cluster with three nodes. We are using f1-micro as machine type because it is the smallest available, to ensure minimal costs.

Connect your kubectl client to your cluster by running:

gcloud container clusters get-credentials hello-world-cluster --zone us-east1-b

So, now we have a docker image and a cluster. We want to deploy that image to our cluster and start the containers, which will serve the requests.

5. Upload Docker Image to Google Container Image Registry

The Google container image registry is a cloud registry where you can push your images and these images automatically become available to your container engine cluster. To push an image, you have to build it with a proper name.

To build the container image of this application and tag it for uploading, run the following command:

$ docker build -t gcr.io/{PROJECT_ID}/hello-world-image:v1 .

v1 is the tag of the image.

Next step is to upload the image we just built:

$ gcloud docker -- push gcr.io/{PROJECT_ID}/hello-world-image:v1

6. First Deployment

Now we have a cluster and an image in the cloud. Let’s deploy that image on our cluster with Kubernetes. We’ll do that by creating a deployment spec file. Deployments are a kubernetes resource and all kubernetes resource can be declaratively defined by a spec file. This spec file dictates the desired state of that resource and Kubernetes figures out how to go from the current state to the desired state.

Let’s create one for our first deployment:

deployment.yml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: hello-world-deployment

spec:

replicas: 2

template:

metadata:

labels: # labels to select/identify the deployment

app: hello-world

spec: # pod spec

containers:

- name: hello-world

image: hello-world-image:v1 # image we pushed

ports:

- containerPort: 3000

This spec file says: start two pods where each pod is defined by the given pod spec. Each pod should have one container containing the hello-world-image:v1 we pushed.

Now, run:

$ kubectl create -f deployment.yml --save-config

You can see your deployment status by running kubectl get deployments. To view the pod created by the deployment, run this command: kubectl get pods. You should see the running pods:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-world-deployment-629197995-ndmrf 1/1 Running 0 27s

hello-world-deployment-629197995-tlx41 1/1 Running 0 27s

Note that we have two pods running because we set the replicas to 2 in the deployment.yml file.

To make sure that the server started, check logs by running:

$ kubectl logs {pod-name} # kubectl logs hello-world-deployment-629197995-ndmrf

7. Expose the Service to Internet

To expose the service to Internet, you have to put your VMs behind a load balancer. To do that we create a Kubernetes Service.

$ kubectl expose deployment hello-world-deployment --type="LoadBalancer"

Behind the scenes, it creates a service object (a service is a Kubernetes resource, like a Deployment) and also creates a Google Cloud load balancer.

Run kubectl get services to see the public IP of your service. The console output should look like this:

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-world-deployment 10.103.254.137 35.185.127.224 3000:30877/TCP 9m

kubernetes 10.103.240.1 <none> 443/TCP 17d

Visit http://<EXTERNAL-IP>:<PORT> to access the service. You can also buy a custom domain name and make it point to this IP.

8. Scaling Your Service

Let’s say your service starts getting more traffic and you need to spin up more instances of your application. To scale up in such a case, just edit your deployment.yml file and change the number of replicas to, say, 3 and then run kubectl apply -f deployment.yml and you will have three pods running in no time. It’s also possible to set up autoscaling, but that’s beyond the scope of this tutorial.

9. Clean Up

Don’t forget to clean up the resources once you are done, otherwise, they’ll keep on eating away your Google credits!

$ kubectl delete service/hello-world-deployment

$ kubectl delete deployment/hello-world-deployment

$ gcloud container clusters delete hello-world-cluster --zone us-east1-b

Wrapping Up

We’ve covered a lot of ground in this tutorial but as far as Kubernetes is concerned, this is barely scratching the surface. There’s a lot more you can do, like scaling your services to more pods with one command, or mounting secret on pods for things like AWS credentials etc. However, this should be enough to get you started. Head over to kubernetes.io to learn more!

This article was peer reviewed by Graham Cox. Thanks to all of SitePoint’s peer reviewers for making SitePoint content the best it can be!

Frequently Asked Questions (FAQs) about Deploying Node.js Docker App with Kubernetes

What are the prerequisites for deploying a Node.js Docker app with Kubernetes?

Before you start deploying a Node.js Docker app with Kubernetes, you need to have a few things set up. First, you need to have Docker installed on your system. Docker is a platform that allows you to automate the deployment, scaling, and management of applications. Second, you need to have a Kubernetes cluster set up. Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. Lastly, you need to have a Node.js application that you want to deploy. Node.js is a JavaScript runtime built on Chrome’s V8 JavaScript engine.

How do I create a Docker image for my Node.js application?

Creating a Docker image for your Node.js application involves writing a Dockerfile. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using docker build users can create an automated build that executes several command-line instructions in succession.

How do I deploy my Docker image to Kubernetes?

Once you have your Docker image, you can deploy it to Kubernetes using the kubectl command-line interface. You first need to create a deployment configuration. This configuration specifies the Docker image to use, the number of replicas to run, and a host of other options. Once you have your configuration, you can use the kubectl apply command to create the deployment on your Kubernetes cluster.

How can I manage my deployed application?

Kubernetes provides several tools to help you manage your deployed application. You can use the kubectl command-line interface to check the status of your deployment, scale your application, or update your application. Additionally, Kubernetes provides a dashboard that gives you a graphical interface to view the state of your cluster and manage your applications.

How can I scale my application with Kubernetes?

Kubernetes makes it easy to scale your application. You can use the kubectl scale command to increase or decrease the number of replicas of your application. Kubernetes will automatically start new pods or terminate existing ones to match the desired number of replicas.

How can I update my application with Kubernetes?

Updating your application with Kubernetes is as simple as changing the Docker image in your deployment configuration and applying the changes with the kubectl apply command. Kubernetes will automatically roll out the changes, ensuring that your application remains available during the update.

How can I monitor my application with Kubernetes?

Kubernetes provides several tools for monitoring your application. You can use the kubectl logs command to view the logs of your application. Additionally, Kubernetes integrates with several monitoring tools like Prometheus and Grafana that provide more detailed metrics and visualizations.

How can I troubleshoot issues with my application?

Kubernetes provides several tools to help you troubleshoot issues with your application. You can use the kubectl describe command to get detailed information about your deployment. Additionally, you can use the kubectl logs command to view the logs of your application.

How can I secure my application with Kubernetes?

Kubernetes provides several features to help secure your application. You can use Kubernetes Secrets to manage sensitive information like passwords and API keys. Additionally, you can use Kubernetes Network Policies to control the network access to your application.

How can I clean up my deployment?

Cleaning up your deployment involves deleting the Kubernetes objects you created. You can use the kubectl delete command to delete your deployment, service, and any other objects you created.

Jatin is an existing software dev, and aspiring stand up comic. Previously he has worked for Amazon. For a long time, he thought that .js referred to his initials.