In my last article, we talked about ways to really nail down your usability goals before you even think about testing.

Once your goals are clear, you’re ready to hone your test planning to meet those specific goals. There are many tests to choose from, and many types of people to recruit, so narrowing your focus really helps get you closer to the result you’re looking for.

Depending on your hypothesis and what you want to learn, sometimes your usability test might not even require testing the actual product.

Source: Usability Testing

Let’s take a look at the different categories of usability tests, how to find the appropriate testing audience, and how to make it digestible with a simple usability plan.

Types of Test

Deciding which style of test to administer is a pivotal decision in the entire process of usability testing, so don’t take it lightly. On the bright side, the more concrete your usability goals are, the more smoothly the selection process will go.

But no matter what type of test you choose, you should always start with a pilot test. Many people like to gloss over this, but sacrificing a little extra time for a pilot test almost always pays off.

What’s a Pilot Test?

Photo: The Library of Congress

Don’t worry, you won’t need make friends with aviators.

Pilot testing is a test run of your greater user test. In A Practical Guide to Usability Testing, Joseph S. Dumas and Janice C. Redish call pilot tests a “dress rehearsal for the usability test to follow.”

You will conduct the test and collect the data in the same way you would a real test, but the difference is that you won’t analyze or include this data. You are, quite literally, testing your test.

This may seem like a waste of time — and you will likely be tempted to just jump head-long into the actual tests — but pilot tests are highly recommended. This is because in most cases, something WILL go wrong with your first test. Whether it is technical problems, human error, or a situational occurrence, it’s rare that a first test session goes well – or even adequately.

However, the idea is that these tests should be as scientific and precise as possible. If you want the most reliable data, run a pilot test or two until you feel you understand all the variables thoroughly, and have ironed out all the kinks.

The Types of Tests

I’ve written a Usability Testing Guide, that delves more deeply into the specifics of each type of user testing method if you’re interested. But for this article, we’ll give you an overview so you know what the landscape looks like.

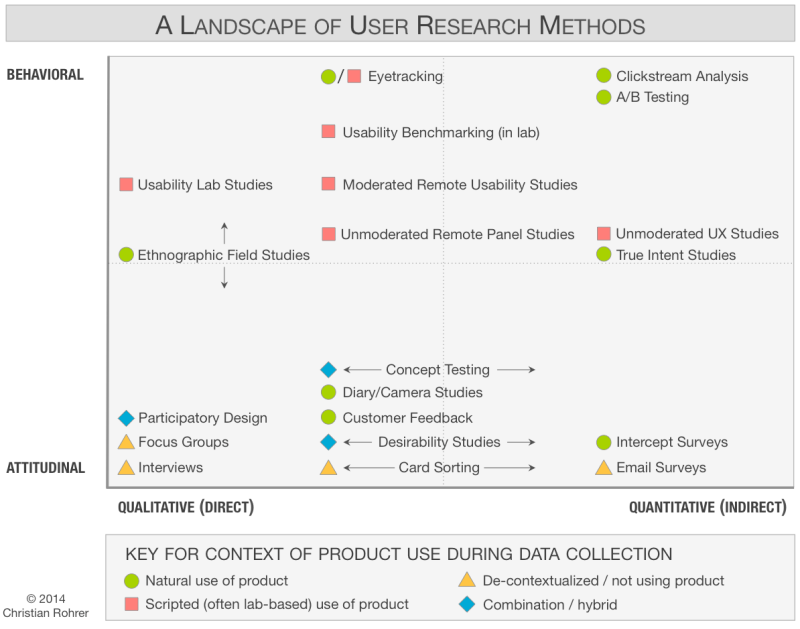

Source: Nielsen Norman Group

Christian Rohrer, McAfee’s Chief Design Officer, explains in an article for the Nielsen Norman Group the distinctions between the types of tests. While Christian uses a complex three-dimensional framework to explain the their differences, for simplicity’s sake we’re going to focus on his division based on how the product is used.

- Scripted use of the product : These tests focus on specific usage aspects. The degree of scripting varies, with more scripting generating more controlled data.

- Decontextualized use of the product : Tests that don’t use the product — at least in the actual testing phase — are designed for broader understanding of topics like UX or generating ideas.

- Natural (and near-natural) use of the product : These tests seek to understand common usage behaviors and trends with the product, prioritizing real user data at the cost of control. This tests fundamental assumptions of how a product is being used.

- Hybrid : Hybrid tests are creative and non-traditional tests. Geared towards understanding the users’ mentality, these tests vary in what they can accomplish.

The Types of Tasks

Each type of test is divided into tasks, the execution of which will affect the validity and overall usefulness of the data collected. While each test will have its own properties for the type of tasks, Tingting Zhao, Usability Specialist for Ubuntu, points out some distinctions to keep in mind when designing tasks.

When creating tasks for participants of usability tasks, there are two choices you need to make. The first choice is whether to phrase your tasks directly or with a scenario.

- Direct Tasks — A direct task is “purely instructional.” These are instructions such as “Find a turkey recipe on the Food Network,” or “Learn about wiener dogs on the blog.” Direct tasks are generally more technical in nature, and could detract from the user’s experience of the product as a whole.

- Scenario Tasks — Scenario tasks phrase the instructions in a real-life example: “You’re going to a high school reunion this weekend. You want to find a nice outfit on the Macy’s website “. Scenario tasks are more common than direct tasks because they help the user forget that they’re taking a test; however, care should be put into making the scenarios as realistic as possible.

Source: Zezz

The second distinction to make when creating tasks is between closed and open-ended tasks.

- Closed: A closed task is one with clearly defined success or failure. These are used for testing specific factors like success rate or time. For example, in our Yelp redesign exercise, we gave participants the following task: “Your friend is having a birthday this weekend. Find a fun venue that can seat up to 15 people.”

- Open-ended: An open-ended task is one where the user can complete it several ways. These are more subjective and most useful when trying to determine how your user behaves spontaneously, or how they prefer to interact with your product. For example: “You heard your coworkers talking about UXPin.com. You’re interested in learning what it is and how it works.”

Finding the Right Usability Test Audience

With all this talk of data and research, it can be easy to forget that the core component of these tests are the actual people. To think of your participants as merely test subjects is a mistake; they are all individuals with their own personalities and their own way of doing things. Deciding the type of people you want to provide you data is a major factor — even if ultimately you decide you want them to be random.

Your Target Users

Unless you’re designing the ‘Beatles-like’ product — where almost everyone can enjoy it — it’s best to narrow down your target audience to the users most likely to use your product.

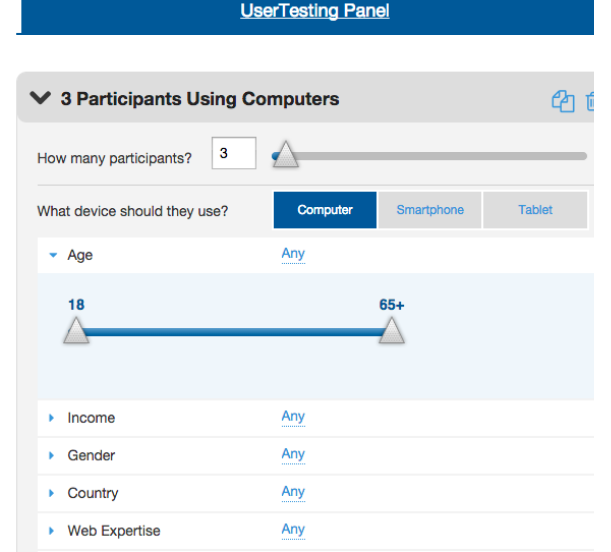

Source: UserTesting Dashboard

Knowing your target audience is not really a topic for usability testing; in theory, this is something you should have already decided in your Product Definition phase.

However, depending on the complexity of your tasks, you may need more than one user group. For example, when conducting user testing for our Yelp redesign, we realized we needed two groups of people: those with Yelp accounts, and those who did not. Once we knew the overall groups, we then decided that both groups needed to have users who were located in the US, used Yelp at most 1-2x a week, and browsed mostly on their desktops.

When focusing in on your test group, it’s also important not to obsess over demographics. The biggest differentiator will likely be whether users have prior experience or are knowledgeable about their domain or industry — not gender, age, or geography. Once you know whom you’re looking for, it’s time to get out there and find them. If you find you have more than one target group, that’s okay; just remember to test each group independently of each other — that will make your data more telling.

Recruiting Users

Knowing who you want for the test is only half the battle; you still need to get them to come (or agree to let you come to them). Luckily, Jeff Sauro, founder of Measuring Usability LLC, lists seven of the most effective methods and usability tools for recruiting people for usability tests. Below, we’ll briefly describe each method (we’re big fans of UserTesting and hallway testing).

- Existing Users — By definition, these are your target users. Try self-promoting on your website, or work with your customer service department to locate interested users. Even if you’re researching a new product or if your company has produced similar products in the past, there’s a chance they both target the same type of person.

- UserTesting (http://www.usertesting.com/) — A website designed specifically for this, UserTesting lets you select users by age, gender, location, and even more customizable options. The site delivers audio and video of users actually testing your site or app.

- Mechanical Turk (https://www.mturk.com/mturk/welcome) — Amazon’s crowdsurfing network is the cheaper version of UserTesting— but just keep in mind that you get what you pay for. The upside, of course, is that if your testing is simple, you can recruit a ton of people for a very low cost.

- Craigslist (http://www.craigslist.org/) — While somewhat random, Craigslist has long been a reliable option for getting people together. Keep in mind that if you’re looking for high-income users or users with highly specialized skills, you likely won’t reach them here.

- Panel Agencies If you’re looking for numbers for an unmoderated test, a panel agency might be the way to go. With vast databases organized by demographics, you can reach your targets for between $15 – $55 per response. Try Op4G,Toluna, or Research Now.

- Market Research Recruiter — This is the option if you’re looking for professionals with a very specific set of skills, like hardware engineers, CFOs, etc. However, these can also be expensive, costing hundreds per participant. If you’re still interested, try Plaza Research (don’t let the outdated site fool you).

- Hallway Testing — “Hallway” testing is a term that means random, as in whoever if walking by the hallway at the moment you’re conducting the test. These may be co-workers, friends, family, or people on the street. While these test subjects may be the easiest to recruit, remember that the farther you get from your target audience, the less helpful the data. DigitalGov provides a live example and a list of tips.

Like all other factors, how you choose to find your participants will depend on your specific needs. Keep in mind the who and why you’re looking for, but don’t neglect the how much. Qualitative tests can be run with as few as 5 people, quantitative tests require at least 20 people for statistical significance. For a full list of user recruiting tips, check out Jakob Nielsen’s list of 234 tips and tricks to recruiting people for usability tests.

If you’re conducting later-stage beta testing, you can recruit beta testers from within your existing user base, as long as it’s large enough. If, however, you need to recruit them elsewhere, Udemy explains the best ways to find them.

Usability Test Plan

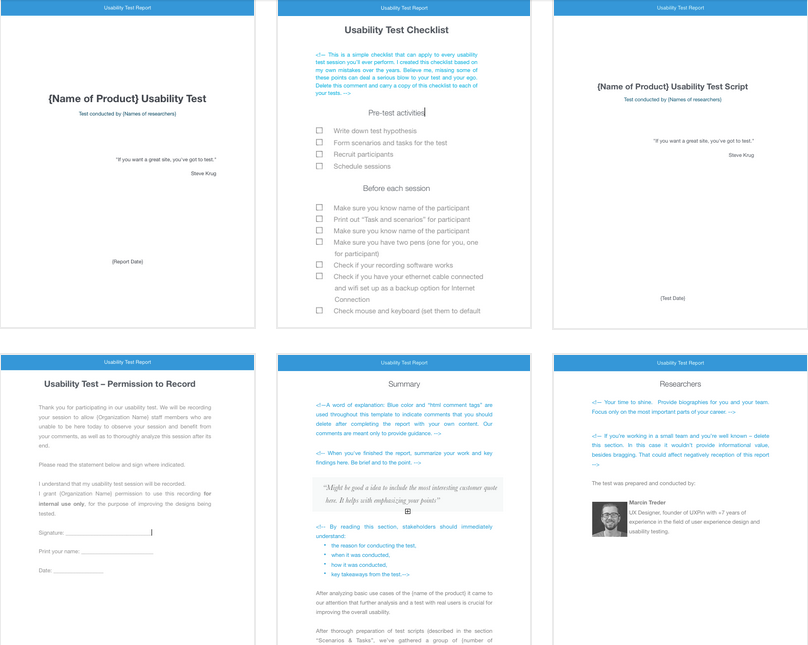

You’re almost ready to dive into your testing, but before you do, there’s just one last thing: a one-page usability checklist. This succinct outline will tell stakeholders everything they need to know about the test, but without boring them with all the details.

Source: Usability Testing Kit

Tomer Sharon, Author and UX Researcher at Google Search, provides a simple outline for your synopsis:

- Title : What you’re studying and the type of test.

- Author and Stakeholders : Everyone involved in conducting the test.

- Date : Don’t forget to update this every time.

- Background : A brief history of the study, under five lines.

- Goals : Try to sum it up with one sentence, but if you have multiple goals, use a short bulleted list.

- Research Questions : Make it clear these are the questions you hope to answer with the study, not the questions you’ll be asking the participants.

- Methodology : Since we’re outside of an academic environment, a simple what, where, and for how long will suffice.

- Participants : The specific characteristics of the people you’re looking for, and why.

- Schedule : Include the three important dates: when recruitment starts, when the study takes place, and when the results will be ready.

- Script Placeholder : Until the full-study script is available, a simple “TBD” is fine.

With the usability checklist in hand, all the key players will be on the same page, so to speak. We’ve provided a free usability testing kit (which includes a testing report) so that you can incorporate these points.

The Takeaway

What we’ve covered today should give you a rock-solid foundation for knowing how to pick both your tests and your participants.

However if you’re looking for even more detail, grab a copy of my free 109-page Guide to Usability Testing. This includes best practices from companies like Apple, Buffer, DirecTV, and others.

If you’re looking for some help in creating your usability test plan, try The Guide to UX Design Process & Documentation.

Finally, we can’t stress enough the importance of these pre-planning phases. The type of test and users you go with will have the biggest impact on your results, and going with the wrong choices will greatly reduce the accuracy.

Having a solid plan can make all the difference, and ensure that you meet your own personal needs.

Frequently Asked Questions on Choosing Usability Test Participants

What factors should I consider when selecting participants for usability testing?

When selecting participants for usability testing, it’s crucial to consider factors such as the target audience of the product, the participants’ familiarity with similar products, and their availability for testing. The participants should ideally represent a cross-section of your product’s user base, including both experienced and novice users. It’s also important to consider the participants’ technical proficiency, as this can significantly impact the results of the usability test.

How many participants do I need for a usability test?

The number of participants needed for a usability test can vary depending on the complexity of the product and the scope of the test. However, research suggests that testing with five users generally allows for the identification of about 85% of usability problems. It’s important to remember that the goal of usability testing is not to identify every single issue, but to uncover trends and major issues that could impact user experience.

How can I avoid bias in usability testing?

To avoid bias in usability testing, it’s important to ensure that the test is conducted in a neutral and controlled environment. The test facilitator should avoid leading questions and should not influence the participants’ actions or responses in any way. It’s also crucial to select a diverse group of participants to ensure a wide range of perspectives.

What methods can I use for usability testing?

There are several methods you can use for usability testing, including moderated in-person testing, remote testing, and unmoderated testing. The choice of method depends on factors such as the nature of the product, the objectives of the test, and the resources available.

How do I interpret the results of a usability test?

Interpreting the results of a usability test involves analyzing the data collected during the test to identify trends and patterns. This can include analyzing the time taken to complete tasks, the number of errors made, and the participants’ subjective feedback. It’s important to consider these results in the context of the product’s goals and the needs of its users.

How can I ensure the reliability of my usability test results?

To ensure the reliability of your usability test results, it’s important to conduct the test in a controlled environment and to use a consistent testing protocol. It’s also crucial to select a representative sample of participants and to conduct multiple rounds of testing if possible.

What is the role of a facilitator in a usability test?

The facilitator plays a crucial role in a usability test. They guide the participant through the test, ensuring that they understand the tasks they are supposed to perform. The facilitator also observes and records the participant’s actions and reactions during the test.

How can I recruit participants for a usability test?

Recruiting participants for a usability test can be done through various methods, including online advertisements, social media, and recruitment agencies. It’s important to clearly communicate the purpose of the test and the expectations for participants.

What should I include in a usability test script?

A usability test script should include a brief introduction explaining the purpose of the test, a description of the tasks the participant will be asked to perform, and a set of questions to gather feedback. The script should be clear, concise, and free of technical jargon.

How can I improve the effectiveness of my usability tests?

To improve the effectiveness of your usability tests, it’s important to clearly define the objectives of the test, select a representative sample of participants, and use a consistent testing protocol. It’s also crucial to thoroughly analyze the test results and to use this analysis to inform improvements to the product.

Jerry Cao is a UX content strategist at UXPin – the wireframing and prototyping app . His Interaction Design Best Practices: Volume 1 ebook contains visual case studies of IxD from top companies like Google, Yahoo, AirBnB, and 30 others.