How to Build a Simple Spellchecker with ChatGPT

In this tutorial, we’ll learn how to build a spellchecker inside a cloud function using ChatGPT.

OpenAI’s large language model ChapGPT is much more than just a chat interface. It’s a powerful tool for a range of tasks including translation, code generation, and, as we’ll see below, even spellchecking. Through its REST API, ChatGPT provides a simple and extremely effective way to add AI language analysis and generation capabilities into a project.

You can find all the code for this tutorial on GitHub.

Key Takeaways

The Cloud Function

Here’s the code for a cloud function:

// Entry point for AWS Lambda.

export async function spellcheck({ body }: { body: string }) {

// Read the text from the request body.

const { textToCheck } = <{ textToCheck: string }>JSON.parse(body);

//... perform spellchecking

// Return an HTTP OK response

return {

statusCode: 200,

body: JSON.stringify(...)

};

}

This Typescript function will be the handler for AWS Lambda, accepting an HTTP request as an input and returning an HTTP response. In the example above, we’re deconstructing the body field from the incoming HTTP request, parsing it to JSON and reading a property textToCheck from the request body.

The openai Package

To implement the spellchecker function, we’re going to send textToCheck off to OpenAI and ask the AI model to correct any spelling mistakes for us. To make this easy, we can use the openai package on NPM. This package is maintained by OpenAI as a handy Javascript/Typescript wrapper around the OpenAI REST API. It includes all the Typescript types we need and makes calling ChatGPT a breeze.

Install the openai package like so:

npm install --save openai

We can then import and create an instance of the OpenAI class in our function handler, passing in our OpenAI API Key which, in this example, is stored in an environment variable called OPENAI_KEY. (You can find your API key in your user settings once you’ve signed up to OpenAI.)

// Import the OpenAI package

import OpenAI from "openai";

export async function spellcheck({ body }: { body: string }) {

const { textToCheck }: { textToCheck: string } = JSON.parse(body);

// Create a new instance of OpenAI...

const openai = new OpenAI({ apiKey: process.env.OPENAI_KEY });

//... perform spellchecking

return {

statusCode: 200,

body: JSON.stringify(...)

};

}

Sample Text

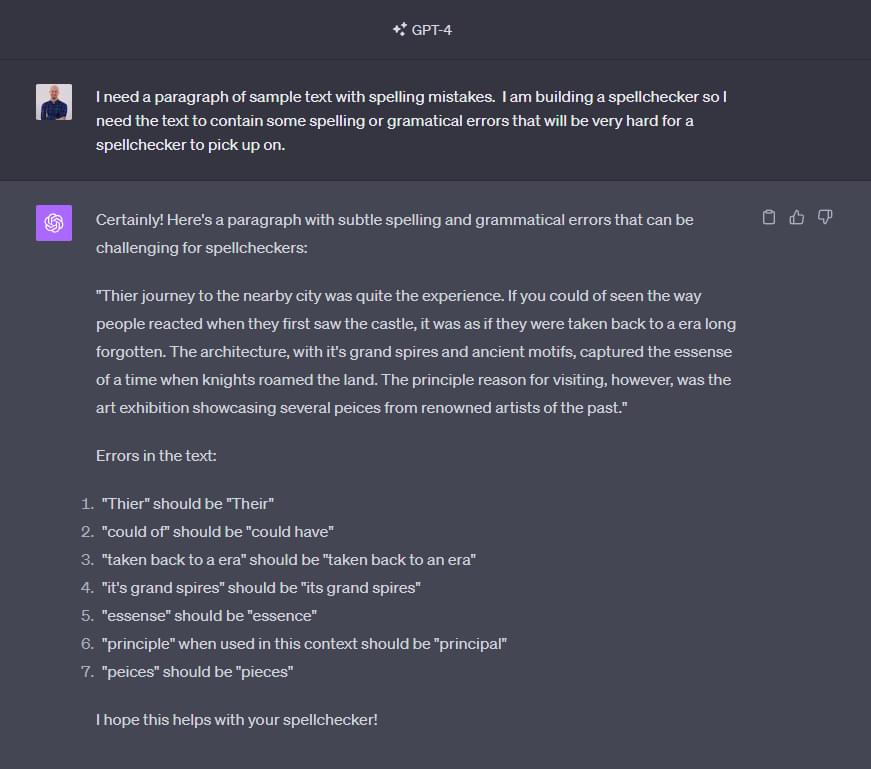

Lastly, we want some sample text with spelling mistakes to test it out with, and what better place to get some than by asking ChatGPT itself!

This text is a good test of our spellchecker, as it contains obvious mis-spellings such as “essense”, but also some more complex grammatical errors such as “principle” instead of “principal”. Errors like this will test our spellchecker beyond the realms of simply looking for words that don’t appear in the dictionary; principle and principal are both valid English words, so our spellchecker is going to need to use the context they appear in to correctly detect this mistake. A real test!

Text In, Text Out

The simplest way to look for spelling mistakes in our textToCheck input is to create a prompt that will ask ChatGPT to perform the spellchecking and return the corrected version back to us. Later on in this tutorial, we’re going to explore a much more powerful way we can get additional data back from the OpenAI API, but for now this simple approach will be a good first iteration.

We’ll need two prompts for this. The first is a user prompt that instructs ChatGPT to check for spelling mistakes:

Correct the spelling and grammatical errors in the following text:

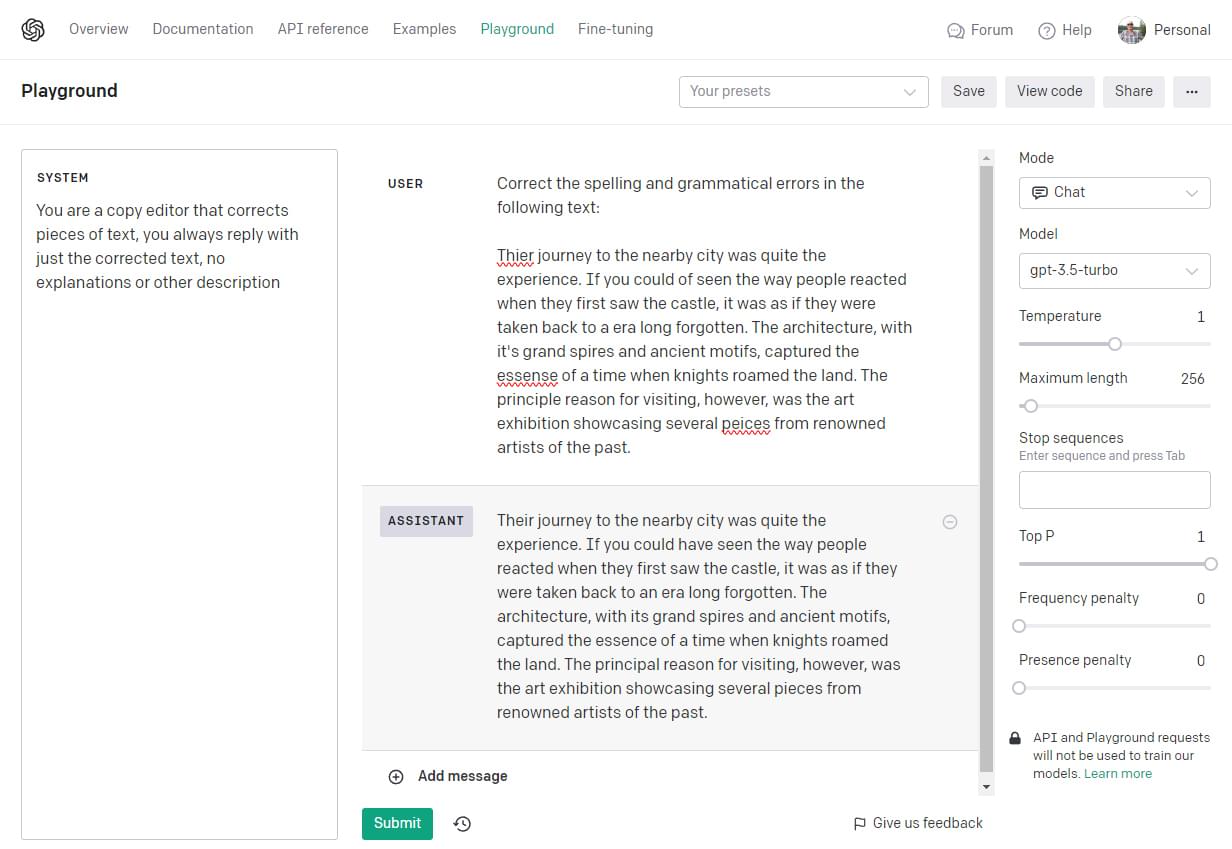

We’ll also need a system prompt that will guide the model to return only the corrected text.

You are a copy editor that corrects pieces of text, you always reply with just the corrected text, no explanations or other description.

System prompts are useful to give the model some initial context and instruct it to behave a certain way for all subsequent user prompts. In the system prompt here, we’re instructing ChatGPT to return only the corrected text, and not dress it up with a description or other leading text.

We can test the system and user prompts in the OpenAI playground.

For the API call, we’ll be using the openai.chat.completions.create({...}) method on the OpenAI class we instantiated above and returning the response message.

Putting it all together, the code below will send these two prompts along with the input text to the openai.chat.completions.create({...}) endpoint on the OpenAI API. Note also that we’re specifying the model to use as gpt-3.5-turbo. We can use any OpenAI model for this, including GPT-4:

// Import the OpenAI package

import OpenAI from "openai";

export async function spellcheck({ body }: { body: string }) {

const { textToCheck }: { textToCheck: string } = JSON.parse(body);

// Create a new instance of OpenAI.

const openai = new OpenAI({ apiKey: process.env.OPENAI_KEY });

const userPrompt = 'Correct the spelling and grammatical errors in the following text:\n\n';

const gptResponse = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: "You are a copy editor that corrects pieces of text, you always reply with just the corrected text, no explanations or other description"

},

{

role: "user",

content: userPrompt + textToCheck

}

]

});

// The message.content will contain the corrected text...

const correctedText = gptResponse.choices[0].message.content;

return {

statusCode: 200,

body: correctedText

};

}

Text In, JSON Out

So far, we’ve written an AWS Lambda cloud function that will send some text to ChatGPT and return a corrected version of the text with the spelling mistakes removed. But the openai package allows us to do so much more. Wouldn’t it be nice to return some structured data from our function that actually lists out the replacements that were made in the text? That would make it much easier to integrate this cloud function with a frontend user interface.

Luckily, OpenAI provides a feature on the API that can achieve just this thing: Function Calling.

Function Calling is a feature present in some OpenAI models that allows ChatGPT to respond with some structured JSON instead of a simple message. By instructing the AI model to call a function, and supplying details of the function it can call (including all arguments), we can receive a much more useful and predictable JSON response back from the API.

To use function calling, we populate the functions array in the chat completion creation options. Here we’re telling ChatGPT that a function called makeCorrections exists and that it can call with one argument called replacements:

const gptResponse = await openai.chat.completions.create({

model: "gpt-3.5-turbo-0613",

messages: [ ... ],

functions: [

{

name: "makeCorrections",

description: "Makes spelling or grammar corrections to a body of text",

parameters: {

type: "object",

properties: {

replacements: {

type: "array",

description: "Array of corrections",

items: {

type: "object",

properties: {

changeFrom: {

type: "string",

description: "The word or phrase to change"

},

changeTo: {

type: "string",

description: "The new word or phrase to replace it with"

},

reason: {

type: "string",

description: "The reason this change is being made",

enum: ["Grammar", "Spelling"]

}

}

}

}

}

}

}

], });

The descriptions of the function and all arguments are important here, because ChatGPT won’t have access to any of our code, so all it knows about the function is contained in the descriptions we provide it. The parameters property describes the function signature that ChatGPT can call, and it follows JSON Schema to describe the data structure of the arguments.

The function above has a single argument called replacements, which aligns to the following TypeScript type:

type ReplacementsArgType = {

changeFrom: string,

changeTo: string,

reason: "Grammar" | "Spelling"

}[]

Defining this type in JSON Schema will ensure that the JSON we get back from ChatGPT will fit this predictable shape, and we can use the JSON.parse() to deserialize it into an object of this type:

const args = <ReplacementsArgType>JSON.parse(responseChoice.message.function_call!.arguments);

Putting It All Together

Here’s the final code for our AWS Lambda function. It calls ChatGPT and returns a list of corrections to a piece of text.

A couple of extra things to note here. As mentioned previously, only a few OpenAI models support function calling. One of these models is gpt-3.5-turbo-0613, so this has been specified in the call to the completions endpoint. We’ve also added function_call: { name: 'makeCorrections' } to the call. This property is an instruction to the model that we expect it to return the arguments needed to call our makeCorrections function, and that we don’t expect it to return a chat message:

import OpenAI from "openai";

import { APIGatewayEvent } from "aws-lambda";

type ReplacementsArgType = {

changeFrom: string,

changeTo: string,

reason: "Grammar" | "Spelling"

}[]

export async function main({ body }: { body: string }) {

const { textToCheck }: { textToCheck: string } = JSON.parse(body);

const openai = new OpenAI({ apiKey: process.env.OPENAI_KEY });

const prompt = 'Correct the spelling and grammatical errors in the following text:\n\n';

// Make ChatGPT request using Function Calling...

const gptResponse = await openai.chat.completions.create({

model: "gpt-3.5-turbo-0613",

messages: [

{

role: "user",

content: prompt + textToCheck

}

],

functions: [

{

name: "makeCorrections",

description: "Makes spelling or grammar corrections to a body of text",

parameters: {

type: "object",

properties: {

replacements: {

type: "array",

description: "Array of corrections",

items: {

type: "object",

properties: {

changeFrom: {

type: "string",

description: "The word or phrase to change"

},

changeTo: {

type: "string",

description: "The new word or phrase to replace it with"

},

reason: {

type: "string",

description: "The reason this change is being made",

enum: ["Grammar", "Spelling"]

}

}

}

}

}

}

}

],

function_call: { name: 'makeCorrections' }

});

const [responseChoice] = gptResponse.choices;

// Deserialize the "function_call.arguments" property in the response

const args = <ReplacementsArgType>JSON.parse(responseChoice.message.function_call!.arguments);

return {

statusCode: 200,

body: JSON.stringify(args)

};

}

This function can be deployed to AWS Lambda and called over HTTP using the following request body:

{

"textToCheck": "Thier journey to the nearby city was quite the experience. If you could of seen the way people reacted when they first saw the castle, it was as if they were taken back to a era long forgotten. The architecture, with it's grand spires and ancient motifs, captured the essense of a time when knights roamed the land. The principle reason for visiting, however, was the art exhibition showcasing several peices from renowned artists of the past."

}

It will return the list of corrections as a JSON array like this:

[

{

"changeFrom": "Thier",

"changeTo": "Their",

"reason": "Spelling"

},

{

"changeFrom": "could of",

"changeTo": "could have",

"reason": "Grammar"

},

{

"changeFrom": "a",

"changeTo": "an",

"reason": "Grammar"

},

//...

]

Conclusion

By leveraging the OpenAI API and a cloud function, you can create applications that not only identify spelling errors but also understand context, capturing intricate grammatical nuances that typical spellcheckers might overlook. This tutorial provides a foundation, but the potential applications of ChatGPT in language analysis and correction are vast. As AI continues to evolve, so too will the capabilities of such tools.

You can find all the code for this tutorial on GitHub.

James is a software engineering manager and educator with a background in both front-end and back-end development. Behind traintocode.com, he offers tutorials and sample projects for developers keen to improve their skills. Blogger, YouTuber, and author of programming books — most recently Developing on AWS with C# (O'Reilly) — James has a passion for teaching and coding. He lives in sunny Sheffield in the UK and away from the keyboard writes punk songs and builds drum kits. Connect with him on Twitter @jcharlesworthuk.