A/B Testing: Here’s How You Could Be Doing It Wrong

Key Takeaways

- A/B testing can often be done incorrectly, with 90% of internal A/B tests failing and only 14% producing a statistically significant result, indicating a need for a more strategic approach to testing.

- Rote testing, or mindlessly applying A/B testing strategies found in case studies and blogs to your unique website, is unlikely to yield significant improvements in conversion rates.

- Shotgun testing, a high velocity approach with a poor win rate and low average impact, can lead to wasted resources and minimal impact on key business metrics.

- To achieve significant results from A/B testing, one should test big, test often, have a large sample size, avoid calling tests too early, and be open to retesting if results seem too good to be true.

David Ogilvy, one of the founding fathers of modern advertising once said “Never stop testing, and your advertising will never stop improving.” Back in 2008, Bill Gates remarked that “we should use the A/B testing methodology a lot more than we do.”

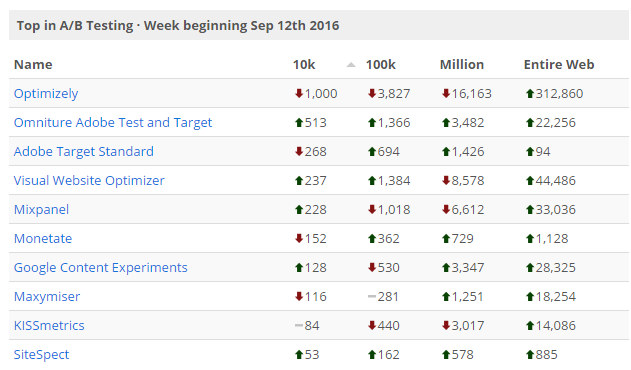

In the modern web world, thanks to a variety of user-friendly software tools, split testing and conversion optimization are becoming more commonplace and accessible. With over 330,000 active websites using A/B split testing tool Optimizely, and over 78,000 active websites using Visual Website Optimizer, it’s no secret that split testing is gaining in popularity. Of the leading online retailers and businesses, nearly 12,000 have either one or the other of these popular services running.

Despite the proliferation of A/B testing software so easy your grandmother could launch her first A/B test, UserTesting.com found that 90% of their internal A/B tests failed. A similar study by VWO found that only 1 in every 7 A/B tests (14%) produced a statistically significant winning result. That means that typically only one test out of ten will have any significant impact on conversion rates, and even then, in most cases, the average level of conversion rate improvement on the most important metrics (online purchases, revenue, leads) is low.

Is the push toward embracing A/B testing just a bunch of hype with no substance? We don’t think so. Chances are you’re just doing it wrong. Here’s what we’ve observed in the last 5 years of having done A/B testing for more global brands (and smaller companies) than you can shake a (very scientific) stick at.

Rote Testing

In school, Rote learning was when your teachers decided it would be easier to simply get you to memorize the answers or mnemonics so you could pass the test.

The A/B testing equivalent of this is peeking at A/B testing case studies and blogs and mindlessly trying them yourself, on your website with its own unique audience of website traffic and visitors.

If you have a brief search around for A/B testing case studies and blogs, you’ll often come across overly simplistic ideas (either explicitly or implied):

- ‘Your call to action has to be ABOVE the fold!’

- ‘Having a video converts better!’

- ‘Long copy beats short copy!’

- ‘Short copy beats long copy!’

- ‘Single-page check-out beats multi-step check-out!’

- ‘Multi-step check-out outperforms single-page check-out!’

- ‘Less form fields are ALWAYS better than more form fields!’

For each of the above, I have seen winning A/B tests that both ‘prove’ and ‘disprove’ each of the above (across various market segments and audiences).

Here’s an example from WhichTestWon where leading Australian home loan and mortgage broker brand, Aussie, undertook an A/B test with the variation including a video (in addition to some other changes).

I’ve often heard people talk in very general terms about web video as if it is a certainty that having a video will always make your page perform better. Contrary to that, the version without the video actually generated 64% more mortgage application leads.

If you’re thinking about A/B testing in a simplistic ‘rote’ way, it’s very unlikely that you’re going to have an A/B test that produces a 100% or greater improvement in conversion rate or have a greater than average percentage of winning tests.

These dogmatic ideas indicate that you’re most likely thinking about A/B testing in such a way that will not produce optimal testing results.

Human beings taken in reasonable sample sizes (after all, that’s who we’re attempting to influence with our experiments) are more complicated than simply being significantly persuaded by such changes. We’re also all quite different, with unique dreams, hopes, aspirations, goals, needs, wants, personalities, problems and psychograms.

“Shotgun” Testing

If you review the past test results of many organizations heavily involved and invested in A/B testing, you’ll often come across a phenomenon that I like to call ‘Shotgun Testing’.

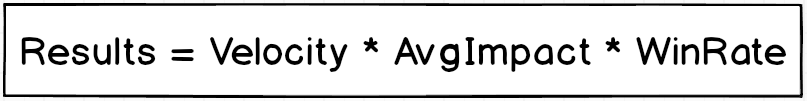

To maximize your A/B testing results, you should work on improving your test velocity, average impact per test and win rate. ‘Shotgun’ testing is when you have the velocity without a high average impact test and a poor win rate.

‘Shotgun Testing’ is when an optimization team has a very high velocity rate in their A/B testing approach but the win rate and average impact are terrible. They’re testing everything under the sun and the output is a very high volume and velocity of A/B tests. But when you review the results of each, you notice they’re basically throwing a bunch of stuff against a wall and hoping something will stick.

Occasionally, you’ll hear statements like ‘well, at least we’re learning’ (which is true) — but the value of learning without impacting your business’ key metrics is difficult to quantify.

In organizations where shotgun testing is prevalent, little thought is put into attempting to determine which test ideas are likely to have high impact or low impact. Most will not have heard of WhichTestWon and it’s almost a certainty that no specific methodology is followed for test ideation (there’s a reason why we came up with our conversion methodology at Web Marketing ROI).

The Dreaded Button Color Test

It’s not difficult to come up with test ideas. It is, however, a challenge to come up with (and implement) split testing ideas with high impact potential for your target audience.

When you read ideas like a 77% jump in CTR just by changing the wording on a button, or that Google split tested 41 shades of blue to find the one that performs best, you may be inclined to think that these kinds of tests involving minutiae are simple to implement and always bring about big results.

But nothing could be further from the truth.

In order to produce large winners, it helps to think big. You shouldn’t be settling for simple changes. If your A/B test can be implemented in a couple lines of jQuery — it’s probably too small.

Think about your customers. What are they really after in this market? Is this what you think they’re after, or do you know for a fact? If you’re not sure, run a Survey Poll with Hotjar or Qualaroo on the thank-you page and ask them “What’s one thing that nearly stopped you from purchasing/registering today?”

What is your value proposition? Is it communicated clearly in your copy? What would the ideal value proposition in your market be?

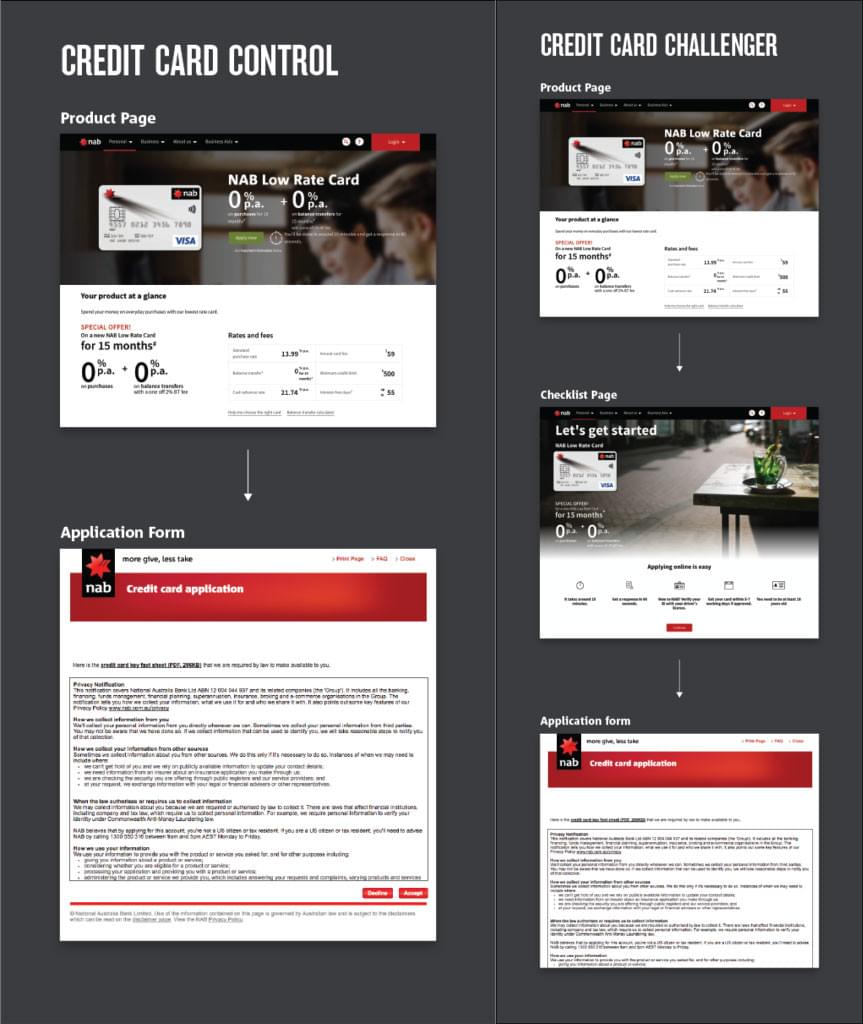

Look at this example, from WhichTestWon, showing two sign-up flows for National Australia Bank (NAB).

So, which sign-up flow won? The second. About 40% of those surveyed on WhichTestWon incorrectly guessed the ‘Credit Card Control’ (Version A). The results were that form conversion rate increased 23.6% and total applications per visit increased by 9.5% (with 99% confidence). This is a great illustration of the fact that predicting how users will behave isn’t always easy.

Swing for the Fences

It’s really tempting to look up the “best split tests” to get an idea of what changes have worked for others. It’s also a recipe for disaster.

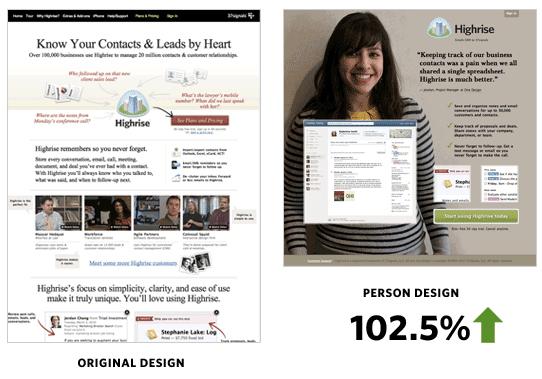

When 37signals attempted to impact conversion rate for Highrise, they completely redesigned the home-page, as per below:

The result was a 102.50% improvement in conversion rate. They didn’t just change one call-to-action or headline, they completely redesigned the home-page (with some expert educated guesses of what was likely to perform) and changed:

- Headline

- Copy

- Background image

- Entire visual design

- Call to action text

- Call to action color

- The way the value proposition was communicated

And it paid off. Big. They doubled their sign-ups on the same level of traffic. It also could have tanked, but if you don’t swing for the fences — it’s almost a certainty you’ll never achieve big wins. The other advantage is that you get to statistical significance much quicker.

Failing the Statistics Part

Up until now, we’ve mostly dealt with the psychology of split testing — how to make sure you’re attracting the right audience and making your offer compelling. But what about the tests themselves? By far, the biggest mistakes you can make are:

- Calling a test too early and/or;

- Not using a large enough sample size

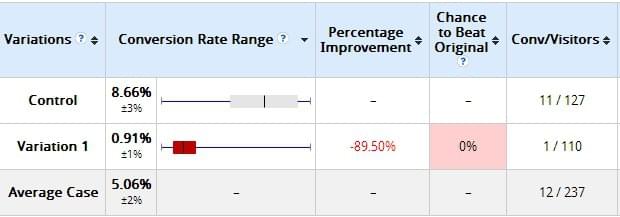

A common scenario that you will have come across if you’ve done a substantial amount of testing is a situation where you launch a new A/B test and in the first couple days, it shows terrible results — indicating that the test is a possible failure, such as below:

Image Source: ConversionXL

In the above test screenshot from VWO, we see the variation is tanking. Badly. It’s losing by nearly 90%.

The temptation when someone new to A/B testing sees this is to scrap the variation and try something else.

The ultimate rule of A/B testing (and statistics in general) is don’t call your test too early. The same test ran through for an additional week or so (it takes courage and dedication to data accuracy to keep a test running when it appears to be failing) and sure enough, the results showed:

Image Source: ConversionXL

Ten days later, the variation that was being badly beaten in the competition was now running full speed ahead — with a 95% chance to beat the control. This is information you never could have known if you had called the test too early.

We recommend setting your minimum test duration (based upon your traffic estimates) for a test before even beginning and locking it in stone.

If in doubt (or if a result seems too good to be true), don’t be afraid to re-test the exact same experiment in a new A/B test.

A/B Testing Done Right

Small, incremental changes and changing one factor at a time is great when you’re getting started with A/B testing, but the key to true A/B testing results is to test big, test often, ideate correctly, have a large sample size and avoid calling your test too quickly.

If the results seem to good to be true, don’t be afraid to test and retest. A/A tests are your friend.

By taking the time to better understand your goals and how they align with the action(s) you want your audience to take, as well as what happens after prospects take those actions, you’ll be setting your tests up for success right from the start. And in the process, you’ll be leaving your competition behind.

Frequently Asked Questions (FAQs) about A/B Testing

What is the importance of A/B testing in digital marketing?

A/B testing is a crucial component of digital marketing as it allows marketers to compare two versions of a web page, email, or other marketing asset to determine which one performs better. It’s a way to test changes to your webpage against the current design and determine which one produces positive results. It is a method to validate any new design or change to an element on your webpage.

How does A/B testing work?

A/B testing involves two versions of a webpage: A (the control) and B (the variant). These versions are identical except for one variation that might affect a user’s behavior. Half of the users are shown the original (A) and the other half are shown the modified page (B). The engagement is then measured and analyzed through statistical methods to determine which version performed better.

What elements can be tested through A/B testing?

Almost any element of a webpage or marketing asset can be tested through A/B testing. This includes, but is not limited to, headlines, subheadlines, paragraph text, testimonials, call to action text, call to action buttons, links, images, content near the fold, social proof, media mentions, and awards and badges.

What are some best practices for A/B testing?

Some best practices for A/B testing include testing one thing at a time to ensure you know exactly what caused the change, running the test long enough to get statistically significant results, and testing early and often to continually improve your results. It’s also important to have a clear hypothesis and goal before starting the test.

How can I ensure my A/B testing is successful?

To ensure your A/B testing is successful, it’s important to have a clear hypothesis and goal, test one thing at a time, and run the test long enough to get statistically significant results. It’s also crucial to analyze your results thoroughly and apply what you’ve learned to future tests.

What are some common mistakes in A/B testing?

Some common mistakes in A/B testing include not running the test long enough to get statistically significant results, testing too many things at once, and not having a clear hypothesis and goal. It’s also a mistake to not analyze your results thoroughly and apply what you’ve learned to future tests.

How can A/B testing improve my conversion rates?

A/B testing can improve your conversion rates by allowing you to test changes to your webpage or marketing asset and see which version your audience responds to better. By continually testing and optimizing your pages, you can improve your conversion rates and overall marketing performance.

What tools can I use for A/B testing?

There are many tools available for A/B testing, including Google Optimize, Optimizely, VWO, and Unbounce. These tools allow you to easily create and run tests, and analyze the results.

Can A/B testing be used for email marketing?

Yes, A/B testing can be used for email marketing. You can test different subject lines, email content, call to actions, and more to see what your audience responds to best and improve your email marketing performance.

How does A/B testing relate to user experience (UX)?

A/B testing is a key part of improving user experience (UX). By testing different versions of your webpage or marketing asset, you can see what your audience responds to best and make changes to improve their experience.

James Spittal is the founder of Conversion Rate Optimisation and A/B testing obsessed digital marketing agency, Web Marketing ROI. They help brands with high-traffic websites optimize their conversion rate using A/B testing and personalization.