This is the editorial for the July 25th edition of the SitePoint PHP newsletter.

Why is a string called a string? Have you ever given this some thought? We never use such a word in contexts other than programming for a set of letters sticking together, and yet – in programming it’s as pervasive as the word “variable”. Why is that, and where does it come from?

To find out, we have to tackle some related terms first. History lesson time!

The word font is derived from the French fonte – something that has been melted; a casting. Given that letters for printing presses were literally made of metal and smelted at type foundries, that makes sense.

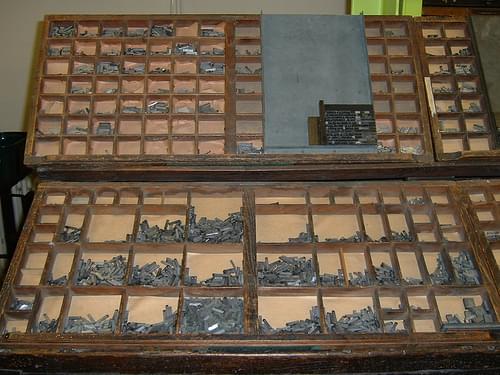

The terms uppercase and lowercase refer to the literal part of the case in which the font was transported. So the printer (person) had a heavy case he lugged around or had set up at a printing press, and in this case were two “levels” – an upper case, and a lower case. The upper case contained only – you guessed it – UPPERCASE letters, while the lower case only contained lowercase ones.

You’ll notice that there were more lowercase letters than uppercase ones. This was to be expected – a letter could only be used once on a single page and after all, a written body of text will have many more lowercase letters than uppercase ones, as there was no such thing as Youtube comments and CAPS LOCK yet.

So how does all this relate to strings?

Well, as printing became more mainstream and printing presses began offering their services to individuals, not just newspapers and publishers, it is said they decided to charge based on the length of the printed material – length in feet. Granted, a lot of this is speculative, but if they strung together the produced, printed material, they could easily estimate the costs and bill customers. So we can conclude with a reasonable degree of certainty that they used the word string in this context as a sequence of characters.

Edit, July 26th 2017: As pointed out in the comments below, it seems that there was an actual string in use to tie the character blocks together as they were transported to the press after being assembled! A Twitter follower even sent me the following video, demonstrating the process!

Still, how does this relate to the programming field? I mean, you could say a string of anything in regards to anything at all and it would make a degree of sense in the non-programming world. It’s just a word that can be applied generally quite easily to things, even though it generally isn’t.

What if we look across academia for first references?

In 1944’s Recursively enumerable sets of positive integers and their decision problems we have a mention that could vaguely resemble the modern definition:

For working purposes, we introduce the letter 6, and consider “strings” of 1’s and b’s such as 11b1bb1.

In this paper, the term refers to a sequence of identical symbols, so a string of 1’s or a string of b’s. Not exactly our definition but it’s a start.

Then, a full 14 years later, in 1958’s A Programming Language for Mechanical Translation, the word is used thusly, and only once:

Each continuous string of letters between punctuation marks or spaces is looked up in the dictionary.

Okay, kiiiind of similar to our notion of strings, but it seems like he’s just describing, well, words. Obviously, that cannot apply – it’s too generic. For some reason, though, it seems to have stuck.

In 1958’s A command language for handling strings of symbols, the word string is used in exactly the same way we use it today, albeit not defined as such.

We find one more reference in 1959, The COMIT system for mechanical translation:

If we want to replace D SIN(F) by COS(F) D (F), where F is unrestricted and may be any arbitrary sequence of constituents, we use the notation $ to stand for this string.

Interesting! Here’s the dollar sign we all know from PHP, and which was (is?) actually the string symbol in BASIC.

Again in 1959 we have a more direct definition in The Share 709 System: Machine Implementation of Symbolic Programming :

The text is a linearly ordered string of bits representing the rest of the information required in the loading and listing processes.

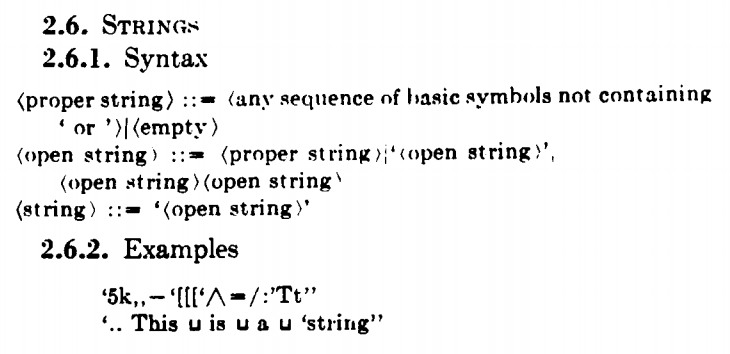

In fact, it was through ALGOL in April of 1960 that string seems to have taken its modern-day shorthand form “string” (up until then people said string of [something]). See this paper’s abstract.

Then finally, in May 1960, the Report on the Algorithmic Language Algol 60 mentions it in a form that hits home.

From there, it just takes off like a modern day meme.

In 1963 METEOR: A LISP Interpreter for String Transformations goes with the rather unspecific “[…] but certain simple transformations of linear lists (strings) are awkward to define in this notation.”.

In 1964, On declaring arbitrarily coded alphabets mentions “character strings”.

Searching ACM reveals a bunch of other resources in the 60s and later which all now use the term regularly, so it seems the 60s were a catalyst in the term’s evolution and made it what it is today, slowly, through the needs of the systems it found itself in. Kind of funny that it ended up representing a similar concept as in the printing press days – a set of characters which has a meaning and carries with it some costs (only this time, in memory).

As a side note – consider all those papers from over 60 years ago. 60 years ago they had computer science problems they were solving on punch cards, and writing about in academic papers. And here we are in 2017 with 2017 JavaScript frameworks, fighting about who can have sex with whom in Drupal’s community and trying to redefine the word Facade over and over again. While we’re arguing about the rocket science of “stuff goes into a box, stuff comes out of a box” that is modern web development, those people back then shaped the entire world by translating the analog environments they found themselves in into digital, by essentially tricking a little bit of sand into remembering numbers.

Conclusion

So now we know – or at least think we know – where string comes from. Computer science has always been a dark space of mysteries and slow evolution, and just like we now know that the human eye has had half-stages and semi-eyes in its past, so too have terms in computer science evolved past and around their original meaning, until they gave us what we have today. The 1960’s have, in various locations all at once, given birth to the same concept with the same name, until it evolved into one unified term that we all understand and use today and, most importantly, can agree on.

When you think about it, was there a better word we could have used? While string hardly feels natural due to the complete detachment from a similar term in the “real world” (we don’t call words on a book’s page “strings”), I fail to think of any term which would fit this popular data type better. Can you? Let me know.

Frequently Asked Questions (FAQs) about Strings in Computer Programming

What is the historical origin of the term ‘string’ in computer science?

The term ‘string’ in computer science is believed to have originated from the phrase ‘string of characters’. It was first used in this context during the early days of programming, when data was often represented as a sequence or ‘string’ of alphanumeric characters. This term was adopted to describe data types in programming languages that are sequences of characters, and it has stuck around ever since.

How does a string differ from other data types in programming?

A string is a sequence of characters, which can include letters, numbers, and special characters. Unlike other data types such as integers or booleans, which represent numerical or true/false values respectively, strings are used to represent and manipulate text. They are a fundamental data type in virtually all programming languages, and they come with a variety of built-in methods for manipulation and analysis.

Why are strings immutable in some programming languages?

In some programming languages like Java and Python, strings are immutable. This means once a string is created, it cannot be changed. This design choice is primarily for efficiency and security reasons. Since strings are often used extensively in programs, making them immutable allows the system to optimize memory usage and processing speed. It also prevents potential security risks associated with mutable strings.

How are strings stored in memory?

Strings are typically stored in memory as a sequence of characters, each occupying a certain number of bytes depending on the character encoding used. For example, in ASCII encoding, each character occupies one byte of memory, while in Unicode encoding, characters can occupy two or more bytes. The end of the string is usually marked with a special null character.

What are some common operations that can be performed on strings?

There are many operations that can be performed on strings, including concatenation (joining two strings together), substring extraction (getting a part of the string), string comparison (checking if two strings are equal), and string searching (finding a particular character or substring within a string). These operations are typically provided as built-in methods in programming languages.

How can strings be converted to other data types?

Most programming languages provide functions or methods to convert strings to other data types. For example, in Python, you can use the int() function to convert a numeric string to an integer, or the float() function to convert it to a floating-point number. However, these conversions will fail if the string does not represent a valid number.

What is string interpolation?

String interpolation is a programming technique where variables or expressions are inserted directly into a string. This is often used to format strings in a more readable and convenient way. The syntax for string interpolation varies between programming languages.

What is the difference between a string and a character array?

A string is a sequence of characters, while a character array is an array where each element is a character. In some programming languages like C, strings are represented as character arrays with a null character at the end. However, in many high-level languages, strings are a separate data type with their own methods and properties.

What is a string literal?

A string literal is a string that is written directly into the source code of a program. It is typically enclosed in quotes, either single or double depending on the programming language. String literals are treated as constant values, and in some languages, they are immutable.

How are special characters represented in strings?

Special characters in strings, such as newline, tab, or quote characters, are typically represented using escape sequences. An escape sequence is a backslash () followed by a character or sequence of characters. The exact syntax and available escape sequences vary between programming languages.

Bruno is a blockchain developer and technical educator at the Web3 Foundation, the foundation that's building the next generation of the free people's internet. He runs two newsletters you should subscribe to if you're interested in Web3.0: Dot Leap covers ecosystem and tech development of Web3, and NFT Review covers the evolution of the non-fungible token (digital collectibles) ecosystem inside this emerging new web. His current passion project is RMRK.app, the most advanced NFT system in the world, which allows NFTs to own other NFTs, NFTs to react to emotion, NFTs to be governed democratically, and NFTs to be multiple things at once.