Which IDEs do Rubyists Use?

Key Takeaways

- The majority of Rubyists prefer not to use an IDE (Integrated Development Environment), with 74% of interviewees opting for text editors over IDEs. Notable figures in the Ruby community, Yukihiro Matusmoto (Matz) and David Heinemeier Hansson (DHH), also prefer text editors, specifically Emacs and TextMate respectively.

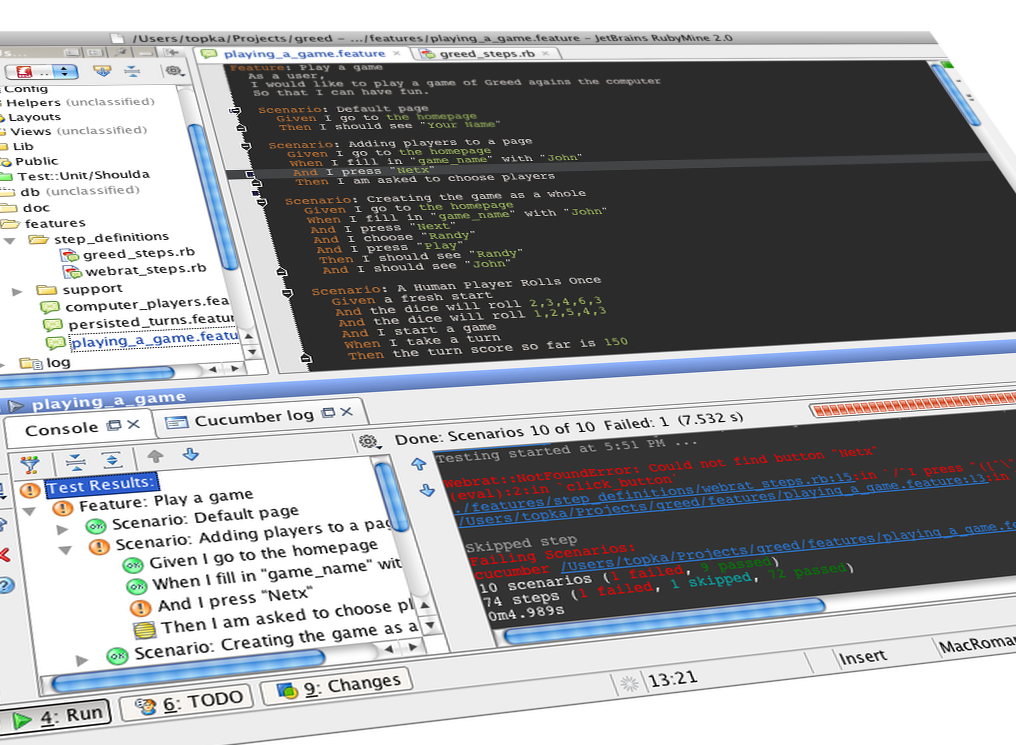

- Among those who do use an IDE, RubyMine is the most popular choice. It offers features such as ‘click and follow’, Git annotate, Git compare, refactoring, and splitting views. These features provide benefits such as easy navigation, tracking of code changes, comparison of different versions of a file, automatic code cleanup and the ability to view multiple sections of code simultaneously.

- Despite the benefits and features offered by IDEs like RubyMine, many Rubyists choose text editors for their simplicity and flexibility. These tools can be customized with plugins and modifications to gain certain IDE-like features, although this requires advanced skills.

In a previous article, I interviewed several well-known Rubyists about their favorite editor. Some of the readers were disappointed that the article focused only on text editors and not IDEs. So, I decided to interview Rubyists about their favorite IDE and report back my findings (we hate having disappointed readers at SitePoint…).

Before getting to the meat of the article, I’ll quickly talk about the differences between text editors and IDEs, as I found that there seems to be some confusion between these two kinds of tools.

Text Editor vs. IDE

In my article about Ruby editors, many commenters and tweeters asked about RubyMine. I replied that RubyMine is an IDE, not a text editor. But, then I got to thinking, is there really a difference between a text editor and an IDE? Or, can they simply be used interchangeably?

Based on a Beginner’s Guide to Using an IDE Versus a Text Editor, the text editor is a tool that creates and edits files that only have plain text. It is a simple way to write code. Once the code is written using a text editor, you can compile and run that code using a command-line tool.

Many text editors come with features like syntax highlighting, which makes reading the code easier, as well as enabling the programmer to compile and execute the program without the need to navigate to a command-line tool.

An IDE (Integrated Development Environment), however, is a more powerful tool that provides many features, including the text editor features.

Other features that IDEs provide are:

- automatic code completion, presenting the programmer with a list of methods or variables that complete the currently typed text.

- version control, allowing the programmer to commit and rollback code changes.

In truth, a text editor can be equipped with just about any feature that an IDE offers. The difference is the effort to gain the features. Text editors (like Vim, Emacs) require plugins or other modifications to gain certain IDE-like features. The skills needed to install these features are, often, advanced. IDEs typically come with features like syntax highlighting and version control integration built right in.

To IDE or Not to IDE?

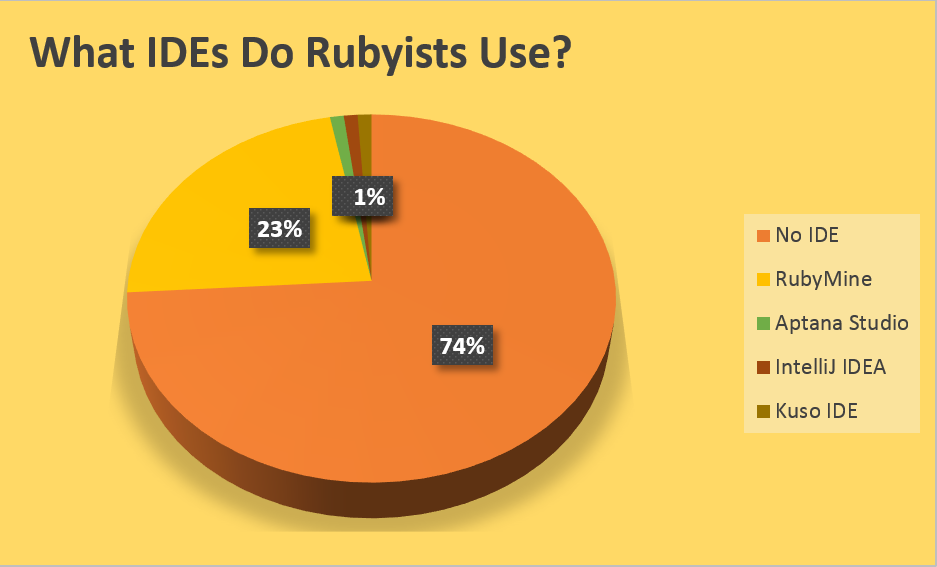

I spoke to 100 Rubyists. Some replied explicitly that they don’t use IDEs, while others mentioned what we call text editors. For those whom replied with a text editor preference, I am including that in the No IDE option.

Here are the results of the interviews:

- No IDE (Rubyists not favoring IDEs)

- RubyMine

- Aptana Studio

- IntelliJ IDEA

- Kuso IDE

No IDE was, by far, the most preferred choice, being mentioned by 74% of the interviewees. The charts below tell the tale:

As you can guess, I spoke to two of the most well-known Rubyists: Yukihiro Matusmoto (Matz) and David Heinemeier Hansson (DHH). If you don’t know, Matz is the creator of Ruby as a language, and DHH is responsible for Ruby on Rails. Matz prefers Emacs, while DHH uses the original version of TextMate. I find it very interesting that two pillars of Ruby don’t use IDEs, surely something to consider when choosing your toolset.

In the Ruby editors article, I had a chance to talk a bit about Vim, the editor of choice for Rubyists based on the sample set I interviewed. While here, I find myself a bit embarrassed when it comes to this part of the article, since the preferred IDE for Rubyists is No IDE!

RubyMine

Although No IDE was, by far, the choice of the Rubyists interviewed, I cannot end this article without talking about the features of the most-chosen Ruby IDE, at least for the sample interviewed in this article.

From the charts above, we can see that RubyMine is the IDE of choice for Rubyists. But, what’s so special about RubyMine?

Let’s ask Natasha, who used TextMate and Sublime Text as the editors of choice before starting to use RubyMine. Natasha states that there is no going back, and mentions some features that she loves about RubyMine.

One of those features is click and follow. Here, when you click on a function, RubyMine will take you to the function being called. This feature is very useful if you have multiple functions with the same name, and residing in different files or folders.

Another feature RubyMine provides is Git annotate. This feature is nice when working on team projects. It allows you to see who was the last person on the team to write or change a function, for instance. It makes it very easy to get in touch with that person if you have any question on why such changes were made.

Another Git feature is Git compare. This wonderful features allows you to compare the current file with the same file on a different branch. If you want, you can also make the comparison with a previous version of that file on the same branch. That could be very, very useful.

Jorge also used to use TextMate before falling in love with RubyMine. Jorge really loves the Refactoring feature of RubyMine. This feature enables you to automatically execute well-known refactorings, such as rename or extract method/variables, quickly cleaning up the code.

RubyMine also offers the capability of Splitting views, such that you can split the editor in independent views, while also keeping a set of tabs opened in each view. For instance, you can have the code and its test displayed in parallel.

IDEs typically have a ton of integrated tools, and RubyMine is no different. You can run your web server or rake tasks within RubyMine. Run you tests with the built-in test runner, then easily browse to the failing tests. Also, code completion is a huge benefit, where RubyMine almost finishes your code thoughts before you do.

Are you surprised the more Rubyists don’t use an IDE? After reading this article, are you ready to try one out for yourself? Why do you think that most Rubyists prefer to go with editors rather than IDEs? Looking forward to your thoughts on that.

Frequently Asked Questions about Ruby IDEs

What are the key features to look for in a Ruby IDE?

When choosing a Ruby IDE, there are several key features to consider. Firstly, look for an IDE that offers syntax highlighting and code completion. These features can significantly speed up your coding process by providing visual cues and suggestions as you type. Secondly, consider whether the IDE supports debugging and testing. These features can help you identify and fix errors in your code more efficiently. Thirdly, check if the IDE offers version control integration. This can make it easier to manage different versions of your code and collaborate with others. Lastly, consider the IDE’s performance and ease of use. A good IDE should be fast, responsive, and intuitive to use.

How does RubyMine compare to other Ruby IDEs?

RubyMine, developed by JetBrains, is one of the most popular IDEs for Ruby. It offers a wide range of features, including intelligent code completion, on-the-fly error checking, and quick-fixes. It also supports debugging, testing, and version control. Compared to other Ruby IDEs, RubyMine stands out for its robustness, performance, and comprehensive feature set. However, it’s worth noting that RubyMine is a commercial product, so it may not be the best choice for those on a tight budget.

Are there any free IDEs for Ruby?

Yes, there are several free IDEs available for Ruby. These include Atom, Visual Studio Code, and Sublime Text. While these IDEs may not offer as many features as commercial products like RubyMine, they are still powerful tools that can meet the needs of many Ruby developers. They also offer the advantage of being customizable, allowing you to add features and functionality through plugins and extensions.

How important is it to use an IDE specifically designed for Ruby?

Using an IDE specifically designed for Ruby can offer several advantages. These IDEs are typically equipped with features that can help you write, debug, and test Ruby code more efficiently. They also offer syntax highlighting and code completion for Ruby, which can speed up your coding process. However, it’s worth noting that many general-purpose IDEs also support Ruby and can be a good choice if you work with multiple programming languages.

Can I use a text editor instead of an IDE for Ruby development?

Yes, you can use a text editor for Ruby development. Text editors like Atom, Sublime Text, and Visual Studio Code are lightweight and fast, making them a good choice for simple projects or for developers who prefer a minimalist setup. However, text editors typically don’t offer as many features as IDEs, so you may need to install additional plugins or extensions to get the functionality you need.

What are the system requirements for running a Ruby IDE?

The system requirements for running a Ruby IDE can vary depending on the specific IDE. However, most IDEs require a modern operating system (Windows, macOS, or Linux), a certain amount of RAM (usually at least 2GB), and enough disk space to store the IDE and your projects. Some IDEs may also require a specific version of Ruby to be installed on your system.

How can I improve the performance of my Ruby IDE?

There are several ways to improve the performance of your Ruby IDE. Firstly, make sure your system meets the IDE’s minimum requirements and consider upgrading your hardware if necessary. Secondly, disable any unnecessary plugins or extensions, as these can slow down the IDE. Lastly, regularly update your IDE to the latest version, as updates often include performance improvements and bug fixes.

Can I use a Ruby IDE for Rails development?

Yes, many Ruby IDEs also support Rails, a popular framework for building web applications with Ruby. These IDEs offer features like Rails-specific code completion, navigation, and refactoring, making them a good choice for Rails development.

How can I learn to use a new Ruby IDE effectively?

When learning to use a new Ruby IDE, start by familiarizing yourself with its interface and basic features. Most IDEs offer documentation and tutorials to help you get started. Practice using the IDE with small projects before moving on to larger ones. Don’t hesitate to use online resources and communities for help and advice.

How can I choose the best Ruby IDE for my needs?

Choosing the best Ruby IDE depends on your specific needs and preferences. Consider factors like the IDE’s feature set, performance, ease of use, and cost. Try out several IDEs to see which one you prefer. Remember that the best IDE for you is the one that helps you code most efficiently and enjoyably.

Doctor and author focussed on leveraging machine/deep learning and image processing in medical image analysis.