Performance, accessibility, and security are the most discussed topics of the last months, at least in my opinion. I’m very interested in them and I try to get my head around each subject by reading the new techniques and best practices unveiled by gurus of these fields. If you’re a front-end developer you should too, because these are the hottest subjects right now.

In this article I’ll focus on performance by discussing a JavaScript library I’ve developed, called Saveba.js. It tries to improve the performance of a website, and thus the experience of users, by avoiding the download of some resources based on the user’s connection. I’ll also explain why I developed it, detailing what the problems with the current approaches used by developers.

The Problem

When people talk about performance, the discussion always ends up including mobile. It’s certainly true that a website should be optimized for any device and connection, but often home and office connections are faster than mobile ones. Some of the most common techniques to optimize a website today are combining and minifying CSS and JavaScript files, loading JavaScript files asynchronously, providing modern font formats (WOFF and WOFF2), optimizing for the critical rendering path, etc.

Another important concept to take into account is the optimization of images. Based on the last report of HTTPArchive, images represent more than the 60% of a page’s total weight on average. To address this issue, many developers use tools like Grunt or Gulp, or services like TinyPNG or JPEGMini, to reduce their weight. Another practice is to employ the new srcset attribute and the new picture element to provide versions of the images optimized for the size of the viewport. But this is not enough.

Back in August, I wrote an article about the Network Information API, where I expressed my concern about the limitations of this approach. In particular I wrote:

While this approach works well for serving up images of the right size and resolution, it isn’t ideal in all situations, video content being one example. What we really need in these cases is more information about the device’s network connection.

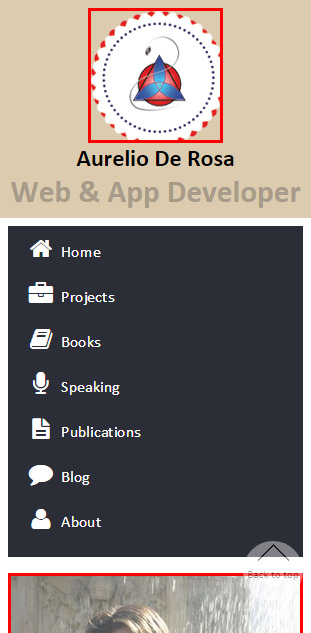

What I wanted to express is that if a user is on a really, really slow connection he/she may not care about some embellishment images or resources in general and want to focus on what really matters. Consider the following images that represents the current version of my website as seen on a Samsung Galaxy S3:

In this screenshot I have marked with a red border two images: a logo and an image of me. Now, the question is: “would a user with a 2G connection care about those images, even if they are heavily optimized?” Not surprisingly, the answer is “No!” So, even if I can optimize those images for small devices, what I really need is to avoid their download completely for users having a given type of connection or a set of connections like GPRS, EDGE, and UMTS. On the other hand, I do want to show those images for users visiting my website on a small device that are using a fast connection. My attempt to solve this issue ended up with the creation of Saveba.js.

Introducing Saveba.js

Saveba.js is a JavaScript library that, relying on the Network Information API, tries to save bandwidth for users with a slow connection by removing unnecessary resources (at the moment images only). By “removing” I mean that Saveba.js replaces the images with a 1×1 px transparent GIF, so that users won’t have broken images while browsing the website. In regard to what’s considered unnecessary, the library considers any non-cached image as unnecessary if the user’s connection is slow. Any non-content image (images having an empty alt attribute) that aren’t in the browser’s cache are considered unnecessary for average connections. If the user has a fast connection the library won’t perform any operation.

For more information about how connections are classified, refer to the README of the library. Note that because Saveba.js is in a very early stage I strongly suggest you to not use it in production. However, you may want to keep an eye on it.

Key Points of Saveba.js

In this section I’ll highlight some parts of the code to show you how I made the library. First, I set up some default values that will help in classifying the connection in use and in avoiding any changes on any resource the developer wants to be ignored:

// Default values.

// Later exposed as saveba.defaults

var defaults = {

// A NodeList or an Array of elements the library must ignore

ignoredElements: [],

// A Number specifying the maximum speed in MB/s after which

// a connection isn't considered slow anymore

slowMax: 0.5,

// A Number specifying the minimum speed in MB/s after which

// a connection is considered fast

fastMin: 2

};The second step is to detect if the browser in use supports the Network Information API. If the API isn’t implemented, I terminate the execution of the code:

var connection = window.navigator.connection ||

window.navigator.mozConnection ||

null;

// API not supported. Can't optimize the website

if (!connection) {

return false;

}The third step is to classify the connection in use based on the current configuration and on the version of the API supported:

// Test whether the API supported is compliant with the old specifications

var oldApi = 'metered' in connection;

var slowConnection = (oldApi && (connection.metered || connection.bandwidth < defaults.slowMax)) ||

(!oldApi && (connection.type === 'bluetooth' || connection.type === 'cellular'));

var averageConnection = oldApi &&

!connection.metered &&

connection.bandwidth >= defaults.slowMax &&

connection.bandwidth < defaults.fastMin;Next, I retrieve the resources the library can optimize (at the moment images only) and filter those that are in the browser’s cache or the developer wants to be ignored:

var elements;

if (slowConnection) {

// Select all images (non-content images and content images)

elements = document.querySelectorAll('img');

} else if (averageConnection) {

// Select non-content images only

elements = document.querySelectorAll('img[alt=""]');

}

elements = [].slice.call(elements);

if (!(defaults.ignoredElements instanceof Array)) {

defaults.ignoredElements = [].slice.apply(defaults.ignoredElements);

}

// Filter the resources specified in the ignoredElements property and

// those that are in the browser's cache.

// More info: http://stackoverflow.com/questions/7844982/using-image-complete-to-find-if-image-is-cached-on-chrome

elements = elements.filter(function(element) {

return defaults.ignoredElements.indexOf(element) === -1 ? !element.complete : false;

});Finally, I replace the remaining resources with the placeholder by keeping a reference to the original source in an attribute called data-saveba:

// Replace the targeted resources with a 1x1 px, transparent GIF

for(var i = 0; i < elements.length; i++) {

elements[i].dataset.saveba = elements[i].src;

elements[i].src = transparentGif;

}How to Use It In Your Website

To use Saveba.js, download the JavaScript file contained in the “src” folder and include it in your web page.

<script src="path/to/saveba.js"></script>The library will automatically do its work, so you don’t have to call any method. Saveba.js also exposes a global object called saveba, available as a property of the window object, in case you want to configure it or undo its changes via the destroy() method.

In the next section we’ll briefly discuss how to use the destroy() method, while for the configuration you can refer to the official documentation (I don’t want to duplicate content).

destroy()

In case you want to remove the changes performed by Saveba.js, you can invoke the destroy() method of the saveba object. For example, let’s say that the page on which the changes have been performed has a button with ID of show-images-button. You can add an event listener to the click event that restores all the resources as shown below:

<script>

document.getElementById('show-images-button').addEventListener('click', function(event) {

saveba.destroy();

});

</script>Supported Browsers

Saveba.js relies completely on the presence of the Network Information API, so it works in the same browsers that support this API, which are:

- Firefox 30+. Prior to Firefox 31, the browser supports the oldest version of the API. In Firefox 31 the API has been disabled on desktop

- Chrome 38+, but it’s only available in Chrome for Android, Chrome for iOS, and ChromeOS

- Opera 25+

- Browser for Android 2.2+

Demo

To see Saveba.js in action you can take a look at the live demo.

Conclusion

In this article I described some limitations of the current practices to optimize a website that lead me to create Saveba.js. The latter is a JavaScript library that, relying on the Network Information API, tries to save bandwidth to users having a slow connection by removing unnecessary resources. After introducing it, I explained how the library works and how you can use it in your website, although at the moment you really shouldn’t use it in production.

Once again, I want to reinforce that this is a heavily experimental library and the solution used in not bullet-proof. Whether you liked it or disliked it, I’d really love to know your opinion, so I invite you to comment.

I'm a (full-stack) web and app developer with more than 5 years' experience programming for the web using HTML, CSS, Sass, JavaScript, and PHP. I'm an expert of JavaScript and HTML5 APIs but my interests include web security, accessibility, performance, and SEO. I'm also a regular writer for several networks, speaker, and author of the books jQuery in Action, third edition and Instant jQuery Selectors.