A Designer’s Guide to Fast Websites and Perceived Performance

Key Takeaways

- Faster websites improve SEO, boost conversions, and enhance user experience. This can be achieved through methods such as implementing a Content Delivery Network (CDN), prefetching resources, compressing images, and leveraging browser caching.

- Perceived performance, or how quickly the above-the-fold content renders, can make a website feel faster. This involves displaying vital content first and gradually filling the webpage with content, a method known as using a skeleton screen.

- The concept of occupied time versus unoccupied time plays a crucial role in user perception. Users prefer to be engaged with content rather than waiting for it to load, and every 1-second delay can result in a 7% reduction in conversions.

- Reducing the content required for loading above-the-fold content, prioritizing vital content, and inserting critical CSS in the head of the HTML document can significantly improve perceived performance. Heavy features such as large images, videos, unnecessary JavaScript, and animations should be minimized.

Faster websites do better across a whole variety of metrics. Speeding up websites improves SEO and therefore click-through rates, so faster websites rank better, and it boosts conversions, so it definitely improves user experience as well.

As a web designer you’ll want to do everything that you possibly can do to make your website faster, including implementing a CDN (Content Delivery Network), prefetching resources, compressing images, leveraging browser caching, and a bunch of other backend optimisations. However, there is a way that we can make websites feel faster, even if the website already loads at lightening speed. It’s a technique called improving perceived performance.

Perceived Performance is how fast the above-the-fold content renders—this is the content that the users digest first, while the rest of the content (the below-the-fold content) is loading.

When optimising websites for better perceived performance, you’ll still reap the same benefits as a website that is truly fast, which includes site visitors staying longer, increased conversions, and a better user experience overall, however, the boost in metrics is even greater. Let’s look at the top strategies to implement when dealing with impatient users. Many of these strategies are based on human psychology, and how users interpret/evaluate content.

Occupied vs. Unoccupied Time

Occupied time is when you’re actually doing something, while unoccupied time means you’re waiting to do something. For example, if you’re waiting at the airport baggage claim to collect your luggage, it’s going to feel really long and maybe even torturous. However, the difference between waiting for baggage and waiting for a website to load, is that you have to collect your baggage, whereas website visitors can choose (and will choose) to try another website.

Yes, users are impatient. Every 1-second delay results in a 7% reduction in conversions, according to a Kissmetrics study.

———

Editors note:

Interesting ideas about occupied time. See, I become quite stressed when ideas and tasks build up, so I use up unoccupied time almost religiously. If I’m walking down the street, I consciously brainstorm. If I’m waiting for the microwave to beep three times, I’m standing there reorganizing my Wunderlist. I thought I was crazy, but this reaffirms that users simply hate unoccupied time.

———

Skeleton Screens

Progress bars and spinners are useful for letting the user know that something is happening, but only on a smaller scale (like when a file is uploading). On a larger scale, like when an entire screen is loading, a spinner is nothing more than an awkward reminder that you’re making the user wait, and since most browsers display a spinner in the tab anyway, it’s relatively useless.

An effective way to make a website feel faster is to use a skeleton screen. A skeleton screen fills the webpage with content gradually—this shows the user that something is actually happening, and also gives the user something to focus on (something is better than nothing).

Luke Wroblewski, Product Designer at Google, conducted a study that compared user happiness with and without using a loading spinner. Soon after implementing the spinner, the team received complaints that the spinner-version of the app was taking longer to load than the non-spinner version that used the skeleton screen technique (which is the default behaviour of the browser, btw). In reality, both versions took the same amount of time to load, but the perceived loading times were higher when content wasn’t being displayed immediately.

Since new content is displayed on the screen bit-by-bit, it creates a feeling of continuous progress, and the user has content to digest while the rest of it is rendering/downloading. How to implement, you ask? Just don’t use a loading icon/spinner.

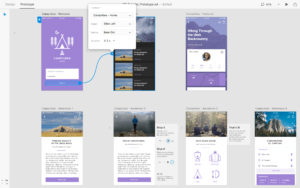

Vital Content Needs Priority Loading

When you focus on making the most important content on the webpage load first, the user has something to occupy their time, and it no longer matters if the rest of the webpage is still loading. If the user wants to read an article on a blog, yet unessential content like ads, background videos and “other content you might enjoy” sections are appearing first, it’s easy to see why such a scenario would lead to an impression of a slow site, as there’s a huge difference between delivering a section of content, and delivering the section that the user wants to see.

A Nielsen Norman Group study confirmed that loading vital content first (and quickly) leads to a higher user-engagement rate, where the user that saw what they wanted right away spent 20% of their time with it, whereas the user with an 8-second delay spent 1% of their time with it. If the vital content is restricted by a loading delay, engagement will drop significantly.

Heavy Features Above-the-Fold

Perceived performance ultimately comes down to reducing the content that’s required for loading your above-the-fold content. Here are the worst offenders:

- Animations and fancy transitions

- JavaScript that adds little value/unnecessary functionality

- Heavy media (large images and videos, especially when high-res/uncompressed)

- Abstract bitmap visuals that can easily be accomplished with SVG/CSS

It’s not to say that any of these things are bad, but if you can’t think of a real reason to include them, you probably don’t need them, and in that case they’re probably doing your website harm.

Inserting Critical CSS

Warning: dev-speak. Although. since many designers are also developers these days, and since it’s always useful when designers know a little bit about the development process, you should read this section too. A little more knowledge never hurts anybody.

So, we already know that heavy features, or at least, too many heavy features above-the-fold don’t exactly do wonders for perceived performance. Really, the priority should be on the (CSS) styles that display the vital content.

But even then, external CSS files have to be fully downloaded before the web browser can begin applying the styles to the webpage, so that’s why it’s useful to display those styles in between <style> tags in the <head> of the HTML document. This way, those important styles that render vital above-the-fold content are loaded right away. We call these styles critical CSS.

It would look something like this:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Document</title>

<!-- critical CSS here -->

<style>

html { /* styles here */ }

</style>

<!-- below the fold CSS here -->

<link rel="stylesheet" href="//cdn.example.com/stylesheet.css">

</head>

<body></body>

</html>.

Bottom Line: Why Perceived Performance Matters

Bottom line: faster websites boost conversions. On the internet, where content is easily accessible and users are finicky and impatient, slower sites will lose revenue. Psychology is extremely important in web design, so anything you can do to increase the mere perception of your site speed will contribute to these higher metrics, and combined with the techniques used to actually make websites load faster, you can make your websites stand out from the crowd.

Curious how to measure web performance overall?

Pingdom Tools will tell you how long it takes for your website to load, as well as suggest a number of tweaks to improve to the TTFB (Time to First Byte), although you can also use Google’s very own PageSpeed tool. Both calculate the length of time it takes the browser to download the very first byte of a response from the server, and unsurprisingly, this is important for SEO. It also explains which images could be compressed further, which resources should be could from a CDN, and as mentioned above, which critical CSS could be inserted in the <head> (also called internal CSS by the way).

Frequently Asked Questions on Performance-Driven Web Design

What is the importance of performance-driven web design?

Performance-driven web design is crucial in today’s digital age as it directly impacts user experience and engagement. A fast, responsive, and efficient website not only enhances user satisfaction but also improves search engine rankings. It reduces bounce rates and increases the time spent on the site, leading to higher conversion rates. Moreover, it ensures that your website is accessible to users across various devices and platforms, thereby expanding your reach and visibility.

How does performance-driven web design impact SEO?

Performance-driven web design plays a significant role in SEO. Search engines like Google prioritize websites that load quickly and provide a seamless user experience. A well-optimized, fast-loading website is more likely to rank higher in search engine results, leading to increased visibility and organic traffic. Additionally, performance-driven design also reduces bounce rates, a factor that search engines consider when ranking websites.

What are the key elements of performance-driven web design?

The key elements of performance-driven web design include fast loading times, mobile optimization, user-friendly navigation, and high-quality content. Other important factors include efficient use of multimedia, minimalistic design, and effective use of calls to action. These elements ensure a seamless user experience, leading to higher engagement and conversion rates.

How can I improve the speed of my website?

There are several ways to improve your website’s speed. This includes optimizing images and videos, minimizing HTTP requests, enabling browser caching, reducing server response time, and using a content delivery network (CDN). Additionally, minimizing the use of plugins and scripts, and using efficient coding practices can also significantly improve your website’s loading speed.

What is the role of mobile optimization in performance-driven web design?

Mobile optimization is a critical aspect of performance-driven web design. With the increasing use of mobile devices to access the internet, it’s essential to ensure that your website is mobile-friendly. A mobile-optimized website not only provides a better user experience but also improves your website’s search engine rankings, as Google prioritizes mobile-friendly websites.

How does user-friendly navigation contribute to performance-driven web design?

User-friendly navigation is a key component of performance-driven web design. It allows users to easily find the information they’re looking for, thereby enhancing user experience and engagement. A well-structured, intuitive navigation system can reduce bounce rates and increase the time spent on your website, leading to higher conversion rates.

How does the use of multimedia affect website performance?

While multimedia elements like images, videos, and animations can enhance the visual appeal of your website, they can also slow down your website if not properly optimized. Therefore, it’s important to use multimedia judiciously and ensure that they are optimized for web use to maintain fast loading times.

What is the importance of minimalistic design in performance-driven web design?

Minimalistic design is a popular trend in performance-driven web design. It focuses on simplicity and functionality, eliminating unnecessary elements that can clutter the website and slow down loading times. A clean, simple design can improve user experience, increase website speed, and enhance overall website performance.

How can effective use of calls to action (CTAs) improve website performance?

Effective use of CTAs can significantly improve website performance by guiding users towards desired actions, such as making a purchase, signing up for a newsletter, or downloading a resource. This can increase engagement and conversion rates, thereby enhancing the overall performance of your website.

What are some common mistakes to avoid in performance-driven web design?

Some common mistakes to avoid in performance-driven web design include not optimizing for mobile, using too many plugins or scripts, not optimizing images and videos, having a complex navigation system, and not using CTAs effectively. Avoiding these mistakes can significantly improve your website’s performance and user experience.

Marc is a copywriter who runs the MSC Agency, a copywriting and marketing agency. An expert in business and marketing, he helps businesses of all sizes get the most bang for their ad bucks.

Published in

·Design·Design & UX·Photography & Imagery·Resources·Review·Software·UI Design·UX·September 23, 2015

Published in

·Accessibility·Design·Design & UX·Freelancing·Illustration·Sketch·Software·UI Design·Usability·UX·Web·March 23, 2016

Published in

·Accessibility·Animation·Animation·Design·Design & UX·Technology·Usability·UX·March 22, 2017

Published in

·Design·Design & UX·Prototypes & Mockups·Statistics and Analysis·Typography·UI Design·Usability·UX·November 1, 2016

Published in

·Accessibility·Content·Content strategy·Copywriting·Design·Design & UX·Typography·UI Design·Usability·UX·July 13, 2017

Published in

·Design·Design & UX·HTML & CSS·Photoshop·Prototypes & Mockups·Review·Sketch·Software·Technology·UI Design·UX·Web·March 11, 2019