Distributed App Deployment with Kubernetes & MongoDB Atlas

Key Takeaways

- Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. Used with MongoDB Atlas, it can help reduce the amount of work your team will have to do when deploying your application.

- MongoDB Atlas, when used with Kubernetes, provides a persistent data-store for your application data without the need to manage the actual database software, replication, upgrades, or monitoring.

- The combination of Kubernetes and MongoDB Atlas allows for quick production and deployment of applications without much concern over infrastructure management.

- The Google Kubernetes Engine, built into the Google Cloud Platform, can be used to quickly deploy containerized applications.

- Atlas is available across most regions on GCP, meaning that no matter where your application lives, you can keep your data close by or distributed across the cloud.

This article was originally published on mongoDB. Thank you for supporting the partners who make SitePoint possible.

Storytelling is one of the parts of being a Developer Advocate that I enjoy. Sometimes the stories are about the special moments when the team comes together to keep a system running or build it faster. But there are less than glorious tales to be told about the software deployments I’ve been involved in. And for situations where we needed to deploy several times a day, now we are talking nightmares.

For some time, I worked at a company that believed that deploying to production several times a day was ideal for project velocity. Our team was working to ensure that advertising software across our media platform was always being updated and released. One of the issues was a lack of real automation in the process of applying new code to our application servers.

What both ops and development teams had in common was a desire for improved ease and agility around application and configuration deployments. In this article, I’ll present some of my experiences and cover how MongoDB Atlas and Kubernetes can be leveraged together to simplify the process of deploying and managing applications and their underlying dependencies.

Let’s talk about how a typical software deployment unfolded:

- The developer would send in a ticket asking for the deployment

- The developer and I would agree upon a time to deploy the latest software revision

- We would modify an existing bash script with the appropriate git repository version info

- We’d need to manually back up the old deployment

- We’d need to manually create a backup of our current database

- We’d watch the bash script perform this “Deploy” on about six servers in parallel

- Wave a dead chicken over my keyboard

Some of these deployments would fail, requiring a return to the previous version of the application code. This process to “rollback” to a prior version would involve me manually copying the repository to the older version, performing manual database restores, and finally confirming with the team that used this system that all was working properly. It was a real mess and I really wasn’t in a position to change it.

I eventually moved into a position which gave me greater visibility into what other teams of developers, specifically those in the open source space, were doing for software deployments. I noticed that — surprise! — people were no longer interested in doing the same work over and over again.

Developers and their supporting ops teams have been given keys to a whole new world in the last few years by utilizing containers and automation platforms. Rather than doing manual work required to produce the environment that your app will live in, you can deploy applications quickly thanks to tools like Kubernetes.

What’s Kubernetes?

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. Kubernetes can help reduce the amount of work your team will have to do when deploying your application. Along with MongoDB Atlas, you can build scalable and resilient applications that stand up to high traffic or can easily be scaled down to reduce costs. Kubernetes runs just about anywhere and can use almost any infrastructure. If you’re using a public cloud, a hybrid cloud or even a bare metal solution, you can leverage Kubernetes to quickly deploy and scale your applications.

The Google Kubernetes Engine is built into the Google Cloud Platform and helps you quickly deploy your containerized applications.

For the purposes of this tutorial, I will upload our image to GCP and then deploy to a Kubernetes cluster so I can quickly scale up or down our application as needed. When I create new versions of our app or make incremental changes, I can simply create a new image and deploy again with Kubernetes.

Why Atlas with Kubernetes?

By using these tools together for your MongoDB Application, you can quickly produce and deploy applications without worrying much about infrastructure management. Atlas provides you with a persistent data-store for your application data without the need to manage the actual database software, replication, upgrades, or monitoring. All of these features are delivered out of the box, allowing you to build and then deploy quickly.

In this tutorial, I will build a MongoDB Atlas cluster where our data will live for a simple Node.js application. I will then turn the app and configuration data for Atlas into a container-ready image with Docker.

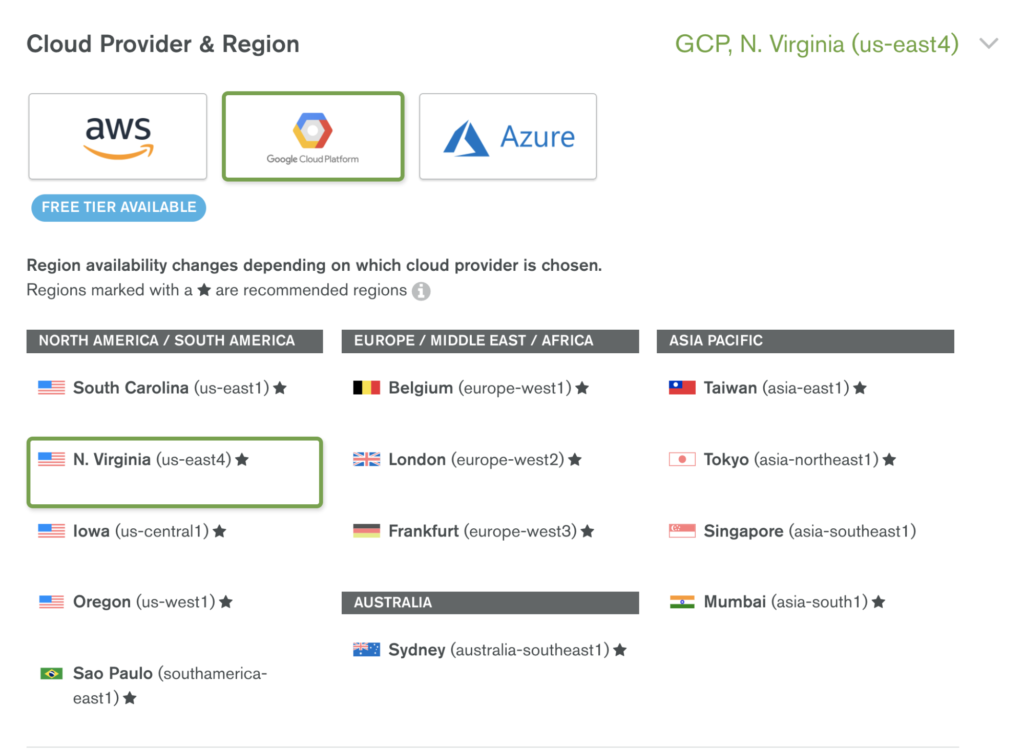

MongoDB Atlas is available across most regions on GCP so no matter where your application lives, you can keep your data close by (or distributed) across the cloud.

Requirements

To follow along with this tutorial, users will need some of the following requirements to get started:

- Google Cloud Platform Account (billing enabled or credits)

- MongoDB Atlas Account (M10+ dedicated cluster)

- Docker

- Node.js

- Kubernetes

- Git

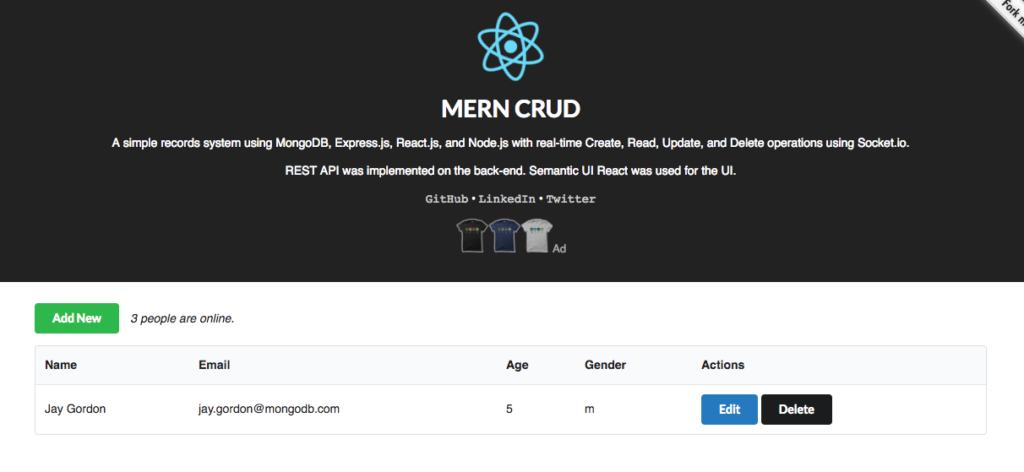

First, I will download the repository for the code I will use. In this case, it’s a basic record keeping app using MongoDB, Express, React, and Node (MERN).

bash-3.2$ git clone git@github.com:cefjoeii/mern-crud.git

Cloning into 'mern-crud'...

remote: Counting objects: 326, done.

remote: Total 326 (delta 0), reused 0 (delta 0), pack-reused 326

Receiving objects: 100% (326/326), 3.26 MiB | 2.40 MiB/s, done.

Resolving deltas: 100% (137/137), done.

cd mern-crud

Next, I will npm install and get all the required npm packages installed for working with our app:

> uws@9.14.0 install /Users/jaygordon/work/mern-crud/node_modules/uws

> node-gyp rebuild > build_log.txt 2>&1 || exit 0

Selecting Your GCP Region for Atlas

Each GCP region includes a set number of independent zones. Each zone has power, cooling, networking, and control planes that are isolated from other zones. For regions that have at least three zones (3Z), Atlas deploys clusters across three zones. For regions that only have two zones (2Z), Atlas deploys clusters across two zones.

The Atlas Add New Cluster form marks regions that support 3Z clusters as Recommended, as they provide higher availability. If your preferred region only has two zones, consider enabling cross-region replication and placing a replica set member in another region to increase the likelihood that your cluster will be available during partial region outages.

The number of zones in a region has no effect on the number of MongoDB nodes Atlas can deploy. MongoDB Atlas clusters are always made of replica sets with a minimum of three MongoDB nodes.

For general information on GCP regions and zones, see the Google documentation on regions and zones.

Create Cluster and Add a User

In the provided image below you can see I have selected the Cloud Provider “Google Cloud Platform.” Next, I selected an instance size, in this case an M10. Deployments using M10 instances are ideal for development. If I were to take this application to production immediately, I may want to consider using an M30 deployment. Since this is a demo, an M10 is sufficient for our application. For a full view of all of the cluster sizes, check out the Atlas pricing page. Once I’ve completed these steps, I can click the “Confirm & Deploy” button. Atlas will spin up my deployment automatically in a few minutes.

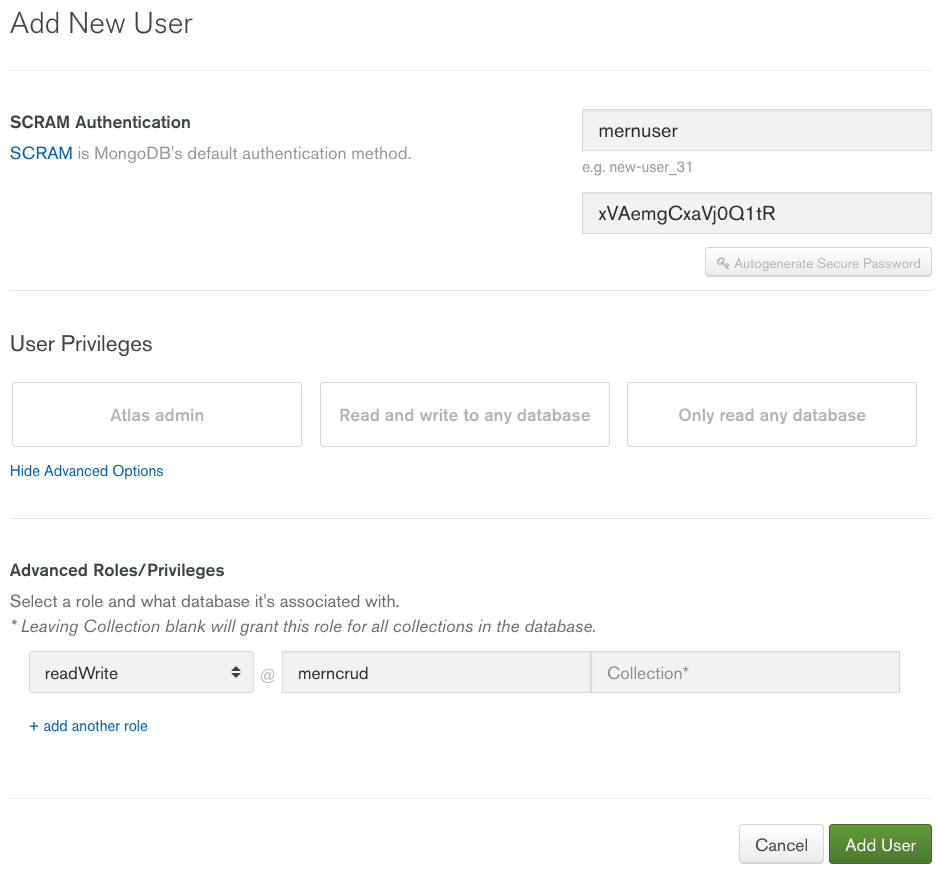

Let’s create a username and password for our database that our Kubernetes deployed application will use to access MongoDB.

- Click “Security” at the top of the page.

- Click “MongoDB Users”

- Click “Add New User”

- Click “Show Advanced Options”

- We’ll then add a user “

mernuser” for ourmern-crudapp that only has access to a database named “mern-crud” and give it a complex password. We’ll specify readWrite privileges for this user:

Click “Add User”:

Your database is now created and your user is added. You still need our connection string and to whitelist access via the network.

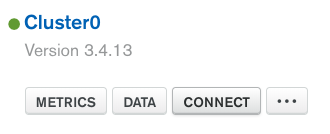

Connection String

Get your connection string by clicking “Clusters” and then clicking “CONNECT” next to your cluster details in your Atlas admin panel. After selecting connect, you are provided several options to use to connect to your cluster. Click “connect your application.”

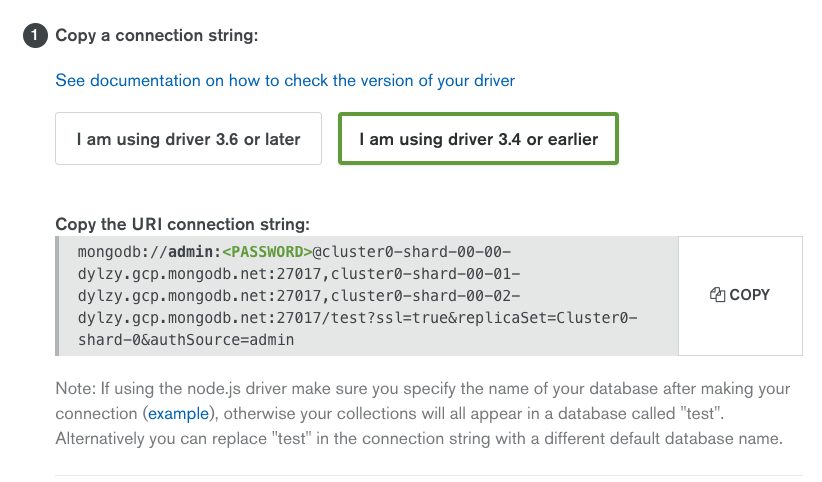

Options for the 3.6 or the 3.4 versions of the MongoDB driver are given. I built mine using the 3.4 driver, so I will just select the connection string for this version.

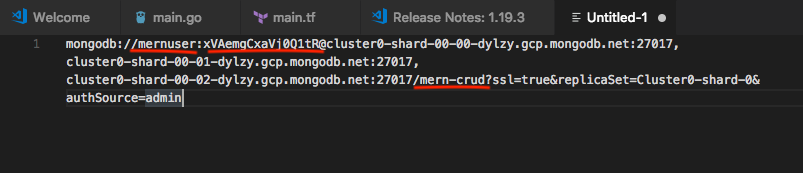

I will typically paste this into an editor and then modify the info to match my application credentials and my database name:

I will now add this to the app’s database configuration file and save it.

Next, I will package this up into an image with Docker and ship it to Google Kubernetes Engine!

Docker and Google Kubernetes Engine

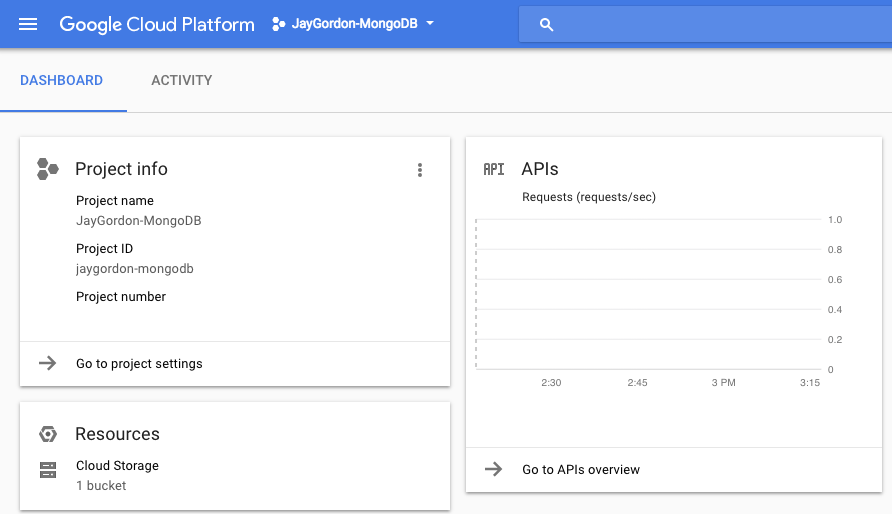

Get started by creating an account at Google Cloud, then follow the quickstart to create a Google Kubernetes Project.

Once your project is created, you can find it within the Google Cloud Platform control panel:

It’s time to create a container on your local workstation:

Set the PROJECT_ID environment variable in your shell by retrieving the pre-configured project ID on gcloud with the command below:

export PROJECT_ID="jaygordon-mongodb"

gcloud config set project $PROJECT_ID

gcloud config set compute/zone us-central1-b

Next, place a Dockerfile in the root of your repository with the following:

FROM node:boron

RUN mkdir -p /usr/src/app

WORKDIR /usr/src/app

COPY . /usr/src/app

EXPOSE 3000

CMD [npm, start]

To build the container image of this application and tag it for uploading, run the following command:

bash-3.2$ docker build -t gcr.io/${PROJECT_ID}/mern-crud:v1 .

Sending build context to Docker daemon 40.66MB

Successfully built b8c5be5def8f

Successfully tagged gcr.io/jgordon-gc/mern-crud:v1

Upload the container image to the Container Registry so we can deploy to it:

Successfully tagged gcr.io/jaygordon-mongodb/mern-crud:v1

bash-3.2$ gcloud docker -- push gcr.io/${PROJECT_ID}/mern-crud:v1The push refers to repository [gcr.io/jaygordon-mongodb/mern-crud]

Next, I will test it locally on my workstation to make sure the app loads:

docker run --rm -p 3000:3000 gcr.io/${PROJECT_ID}/mern-crud:v1

> mern-crud@0.1.0 start /usr/src/app

> node server

Listening on port 3000

Great — pointing my browser to http://localhost:3000 brings me to the site. Now it’s time to create a Kubernetes cluster and deploy our application to it.

Build Your Cluster With Google Kubernetes Engine

I will be using the Google Cloud Shell within the Google Cloud control panel to manage my deployment. The cloud shell comes with all required applications and tools installed to allow you to deploy the Docker image I uploaded to the image registry without installing any additional software on my local workstation.

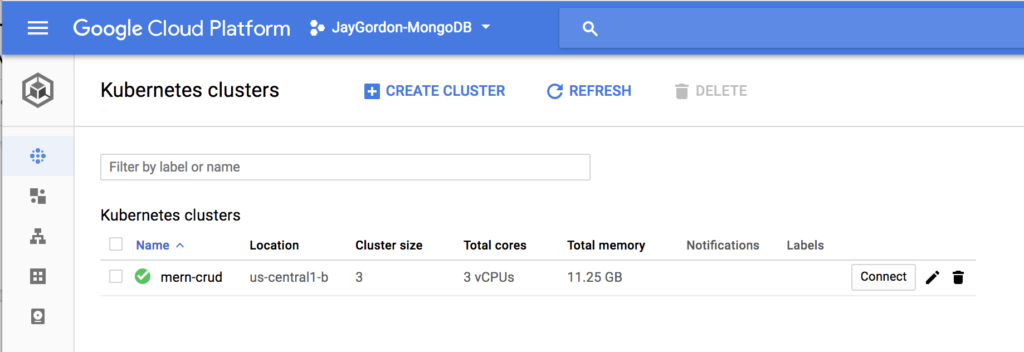

Now I will create the Kubernetes cluster where the image will be deployed that will help bring our application to production. I will include three nodes to ensure uptime of our app.

Set up our environment first:

export PROJECT_ID="jaygordon-mongodb"

gcloud config set project $PROJECT_ID

gcloud config set compute/zone us-central1-b

Launch the cluster

gcloud container clusters create mern-crud --num-nodes=3

When completed, you will have a three node Kubernetes cluster visible in your control panel. After a few minutes, the console will respond with the following output:

Creating cluster mern-crud...done.

Created [https://container.googleapis.com/v1/projects/jaygordon-mongodb/zones/us-central1-b/clusters/mern-crud].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/us-central1-b/mern-crud?project=jaygordon-mongodb

kubeconfig entry generated for mern-crud.

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

mern-crud us-central1-b 1.8.7-gke.1 35.225.138.208 n1-standard-1 1.8.7-gke.1 3 RUNNING

Just a few more steps left. Now we’ll deploy our app with kubectl to our cluster from the Google Cloud Shell:

kubectl run mern-crud --image=gcr.io/${PROJECT_ID}/mern-crud:v1 --port 3000

The output when completed should be:

jay_gordon@jaygordon-mongodb:~$ kubectl run mern-crud --image=gcr.io/${PROJECT_ID}/mern-crud:v1 --port 3000

deployment "mern-crud" created

Now review the application deployment status:

jay_gordon@jaygordon-mongodb:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mern-crud-6b96b59dfd-4kqrr 1/1 Running 0 1m

jay_gordon@jaygordon-mongodb:~$

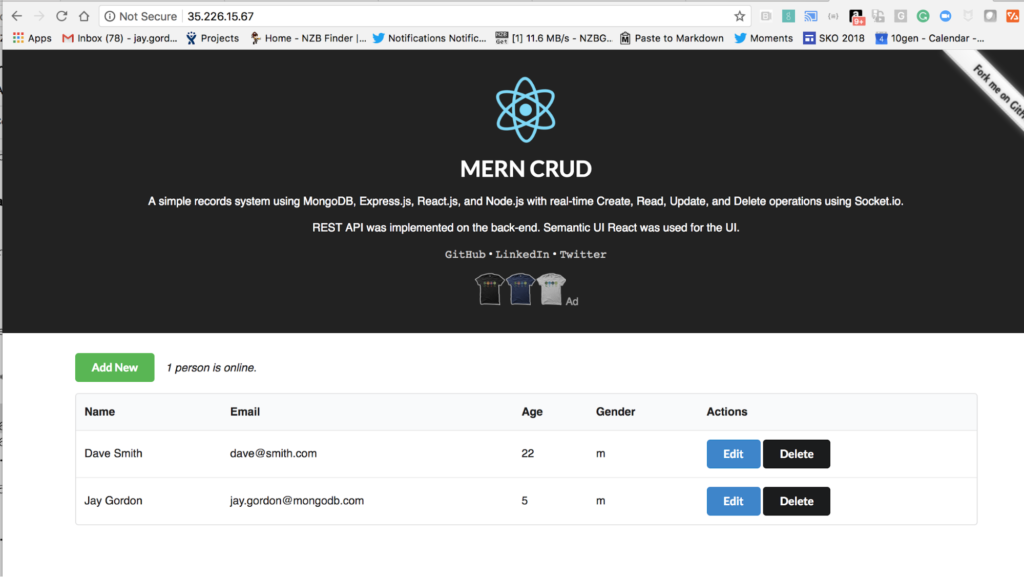

We’ll create a load balancer for the three nodes in the cluster so they can be served properly to the web for our application:

jay_gordon@jaygordon-mongodb:~$ kubectl expose deployment mern-crud --type=LoadBalancer --port 80 --target-port 3000

service "mern-crud" exposed

Now get the IP of the loadbalancer so if needed, it can be bound to a DNS name and you can go live!

jay_gordon@jaygordon-mongodb:~$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.27.240.1 443/TCP 11m

mern-crud LoadBalancer 10.27.243.208 35.226.15.67 80:30684/TCP 2m

A quick curl test shows me that my app is online!

bash-3.2$ curl -v 35.226.15.67

* Rebuilt URL to: 35.226.15.67/

* Trying 35.226.15.67...

* TCP_NODELAY set

* Connected to 35.226.15.67 (35.226.15.67) port 80 (#0)

> GET / HTTP/1.1

> Host: 35.226.15.67

> User-Agent: curl/7.54.0

> Accept: */*

>

< HTTP/1.1 200 OK

< X-Powered-By: Express

I have added some test data and as we can see, it’s part of my deployed application via Kubernetes to GCP and storing my persistent data in MongoDB Atlas.

When I am done working with the Kubernetes cluster, I can destroy it easily:

gcloud container clusters delete mern-crud

What’s Next?

You’ve now got all the tools in front of you to build something HUGE with MongoDB Atlas and Kubernetes.

Check out the rest of the Google Kubernetes Engine’s tutorials for more information on how to build applications with Kubernetes. For more information on MongoDB Atlas, click here.

Have more questions? Join the MongoDB Community Slack!

Continue to learn via high quality, technical talks, workshops, and hands-on tutorials. Join us at MongoDB World.

Frequently Asked Questions (FAQs) on Modern Distributed App Deployment with Kubernetes & MongoDB Atlas

How does Kubernetes work with MongoDB Atlas for app deployment?

Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. When used with MongoDB Atlas, a fully managed cloud database service, it provides a powerful, scalable solution for deploying modern distributed applications. Kubernetes orchestrates and manages the deployment of containers, which can include MongoDB Atlas instances. This combination allows developers to focus on writing code without worrying about the underlying infrastructure.

What are the benefits of using Kubernetes with MongoDB Atlas?

Using Kubernetes with MongoDB Atlas offers several benefits. It simplifies the management of MongoDB instances, allowing for easy scaling and replication. It also provides automated rollouts and rollbacks, service discovery and load balancing, secret and configuration management, and storage orchestration. Moreover, MongoDB Atlas offers built-in security features, automated patching, and backups, making it a robust solution for deploying applications.

How do I deploy a MongoDB instance on Kubernetes?

Deploying a MongoDB instance on Kubernetes involves creating a Kubernetes Deployment configuration. This configuration specifies the Docker image to use, the number of replicas, and other settings. Once the configuration is created, you can use the Kubernetes command-line interface, kubectl, to create the Deployment on your Kubernetes cluster.

How can I scale my MongoDB deployment on Kubernetes?

Kubernetes allows you to scale your MongoDB deployment easily. You can adjust the number of replicas in your Deployment configuration and Kubernetes will automatically create or remove Pods to match the desired state. This makes it easy to scale your application to meet demand.

How does MongoDB Atlas ensure data security in Kubernetes deployments?

MongoDB Atlas provides several features to ensure data security in Kubernetes deployments. It offers built-in encryption at rest and in transit, role-based access control, IP whitelisting, and more. Additionally, MongoDB Atlas is compliant with several industry standards, including GDPR, HIPAA, and ISO 27001, providing further assurance of its security measures.

Can I use Kubernetes and MongoDB Atlas for stateful applications?

Yes, you can use Kubernetes and MongoDB Atlas for stateful applications. Kubernetes provides StatefulSets, a feature that manages the deployment and scaling of a set of Pods and provides guarantees about the ordering and uniqueness of these Pods. Combined with the persistent storage options of MongoDB Atlas, this makes it possible to deploy stateful applications.

How does MongoDB Atlas handle backups in a Kubernetes environment?

MongoDB Atlas provides automated backups that can be configured to run on a schedule. These backups are stored in a separate cloud storage system, ensuring that they are safe even if your Kubernetes cluster encounters issues. You can also restore these backups to any point in time, providing a robust disaster recovery solution.

What is the role of a MongoDB Kubernetes Operator?

A MongoDB Kubernetes Operator extends the Kubernetes API to create, configure, and manage instances of complex stateful applications on behalf of a Kubernetes user. It builds upon the basic Kubernetes resource and controller concepts, but also includes domain or application-specific knowledge to automate common tasks.

How can I monitor my MongoDB deployment on Kubernetes?

MongoDB Atlas provides built-in monitoring tools that allow you to track the performance of your MongoDB instances. You can view metrics such as CPU usage, memory usage, and operation execution times. Additionally, Kubernetes provides monitoring tools that can track the performance and health of your Pods and Nodes.

Can I migrate my existing MongoDB databases to Kubernetes?

Yes, you can migrate your existing MongoDB databases to Kubernetes. This typically involves creating a backup of your existing database, creating a MongoDB instance on Kubernetes, and then restoring the backup to the new instance. MongoDB Atlas provides tools to simplify this process, including live migration features for moving data from on-premises deployments to the cloud.

Jay Gordon is a developer advocate at mongoDB.