This article is part of a web dev series from Microsoft. Thank you for supporting the partners who make SitePoint possible.

Before the Web Audio API, HTML5 gave us the audio element. It might seem hard to remember now, but prior to the audio element, our best option for sound in a browser was a plugin! The audio element was, indeed, exciting but it had a pretty singular focus. It was essentially a video player without the video, good for long audio like music or a podcast, but ill-suited for the demands of gaming. We put up with (or found workarounds for) looping issues, concurrent sound limits, glitches and total lack of access to the sound data itself.

Fortunately, our patience has paid off. Where the audio element may have been lacking, the Web Audio API delivers. It gives us unprecedented control over sound and it’s perfect for everything from gaming to sophisticated sound editing. All this with a tidy API that’s really fun to use and well supported.

Let’s be a little more specific: Web Audio gives you access to the raw waveform data of a sound and lets you manipulate, analyze, distort or otherwise modify it. It is to audio what the canvas API is to pixels. You have deep and mostly unfettered access to the sound data. It’s really powerful!

This tutorial is the second in a series on Flight Arcade – built to demonstrate what’s possible on the web platform and in the new Microsoft Edge browser and EdgeHTML rendering engine. Interactive code and examples for this article are also located at: http://www.flightarcade.com/learn/

[youtube xyaq9TPmXrA]Flight Sounds

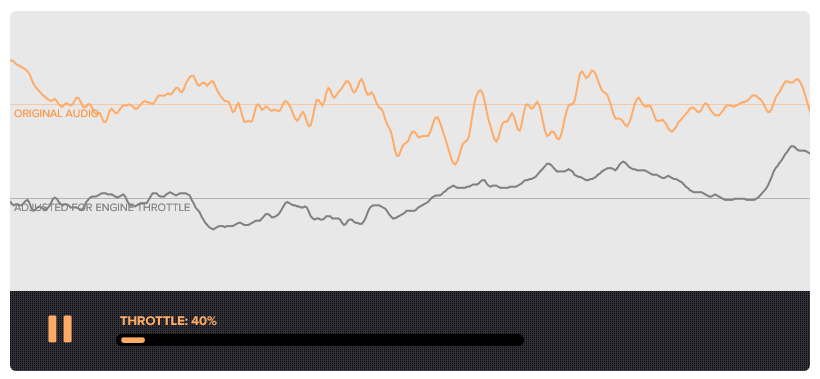

Even the earliest versions of Flight Simulator made efforts to recreate the feeling of flight using sound. One of the most important sounds is the dynamic pitch of the engine which changes pitch with the throttle. We knew that, as we reimagined the game for the web, a static engine noise would really seem flat, so the dynamic pitch of the engine noise was an obvious candidate for Web Audio.

You can try it out interactively here.

Less obvious (but possibly more interesting) was the voice of our flight instructor. In early iterations of Flight Arcade, we played the instructor’s voice just as it had been recorded and it sounded like it was coming out of a sound booth! We noticed that we started referring to the voice as the “narrator” instead of the “instructor.” Somehow that pristine sound broke the illusion of the game. It didn’t seem right to have such perfect audio coming over the noisy sounds of the cockpit. So, in this case, we used Web Audio to apply some simple distortions to the voice instruction and enhance the realism of learning to fly!

There’s a sample of the instructor audio at the end of the article. In the sections below, we’ll give you a pretty detailed view of how we used the Web Audio API to create these sounds.

Using the API: AudioContext and Audio Sources

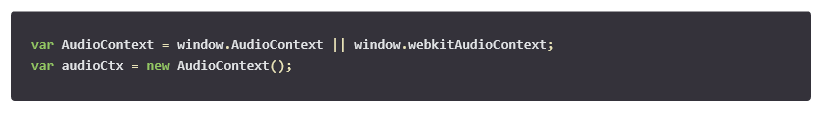

The first step in any Web Audio project is to create an AudioContext object. Some browsers (including Chrome) still require this API to be prefixed, so the code looks like this:

Then you need a sound. You can actually generate sounds from scratch with the Web Audio API, but for our purposes we wanted to load a prerecorded audio source. If you already had an HTML audio element, you could use that but a lot of times you won’t. After all, who needs an audio element if you’ve got Web Audio? Most commonly, you’ll just ‘download the audio directly into a buffer with an http request:

Now we have an AudioContext and some audio data. Next step is to get these things working together. For that, we need…

AudioNodes

Just about everything you do with Web Audio happens via some kind of AudioNode and they come in many different flavors: some nodes are used as audio sources, some as audio outputs and some as audio processors or analyzers. You can chain them together to do interesting things.

You might think of the AudioContext as a sort of sound stage. The various instruments, amplifiers and speakers that it contains would all be different types of AudioNodes. Working with the Web Audio API is a lot like plugging all these things together (instruments into, say, effects pedals and the pedal into an amplifiers and then speakers, etc.).

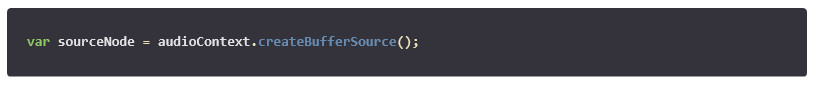

Well, in order to do anything interesting with our newly acquired AudioContext audio sources, we need to first encapsulate the audio data as a source AudioNode.

Playback

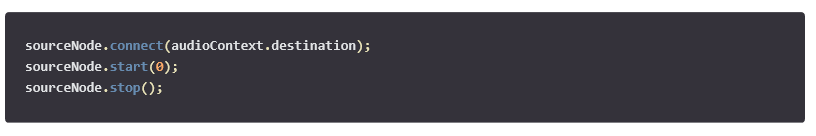

That’s it. We have a source. But before we can play it, we need to connect it to a destination node. For convenience, the AudioContext exposes a default destination node (usually your headphones or speakers). Once connected, it’s just a matter of calling start and stop.

It’s worth noting that you can only call to start() once on each source node. That means “pause” isn’t directly supported. Once a source is stopped, it’s expired. Fortunately, source nodes are inexpensive objects, designed to be created easily (the audio data itself, remember, in a separate buffer). So, if you want to resume a paused sound you can simply create a new source node and call start() with a timestamp parameter. AudioContext has an internal clock that you can use to manage timestamps.

The Engine Sound

That’s it for the basics, but everything we’ve done so far (simple audio playback) could have done with the old audio element. For Flight Arcade, we needed to do something more dynamic. We wanted the pitch to change with the speed of the engine.

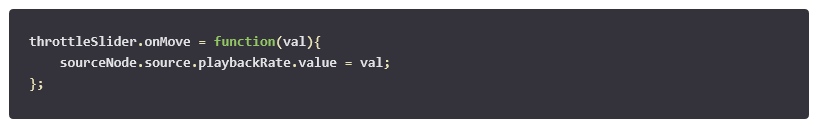

That’s actually pretty simple with Web Audio (and would have been nearly impossible without it)! The source node has a rate property which affects the speed of playback. To increase the pitch we just increase the playback rate:

The engine sound also needs to loop. That’s also very easy (there’s a property for it too):

But there’s a catch. Many audio formats (especially compressed audio) store the audio data in fixed size frames and, more often than not, the audio data itself won’t “fill” the final frame. This can leave a tiny gap at the end of the audio file and result in clicks or glitches when those audio files get looped. Standard HTML audio elements don’t offer any kind of control over this gap and it can be a big challenge for web games that rely on looping audio.

Fortunately, gapless audio playback with the Web Audio API is really straightforward. It’s just a matter of setting a timestamp for the beginning and end of the looping portion of the audio (note that these values are relative to the audio source itself and not the AudioContext clock).

The Instructor’s Voice

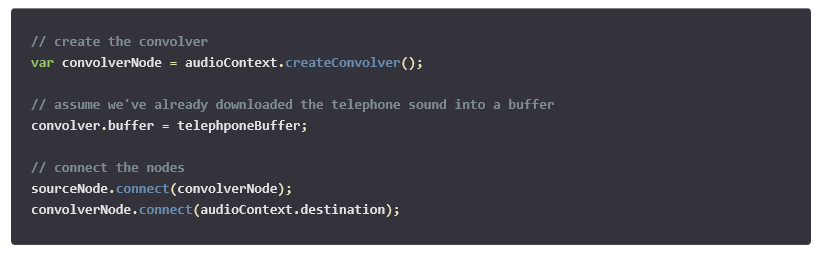

So far, everything we’ve done has been with a source node (our audio file) and an output node (the sound destination we set early, probably your speakers), but AudioNodes can be used for a lot more, including sound manipulation or analysis. In Flight Arcade, we used two node types (a ConvolverNode and a WaveShaperNode) to make the instructor’s voice sounds like it’s coming through a speaker.

Convolution

From the W3C spec:

Convolution is a mathematical process which can be applied to an audio signal to achieve many interesting high-quality linear effects. Very often, the effect is used to simulate an acoustic space such as a concert hall, cathedral, or outdoor amphitheater. It can also be used for complex filter effects, like a muffled sound coming from inside a closet, sound underwater, sound coming through a telephone, or playing through a vintage speaker cabinet. This technique is very commonly used in major motion picture and music production and is considered to be extremely versatile and of high quality.

Convolution basically combines two sounds: a sound to be processed (the instructor’s voice) and a sound called an impulse response. The impulse response is, indeed, a sound file but it’s really only useful for this kind of convolution process. You can think of it as an audio filter of sorts, designed to produce a specific effect when convolved with another sound. The result is typically far more realistic than simple mathematical manipulation of the audio.

To use it, we create a convolver node, load the audio containing the impulse response and then connect the nodes.

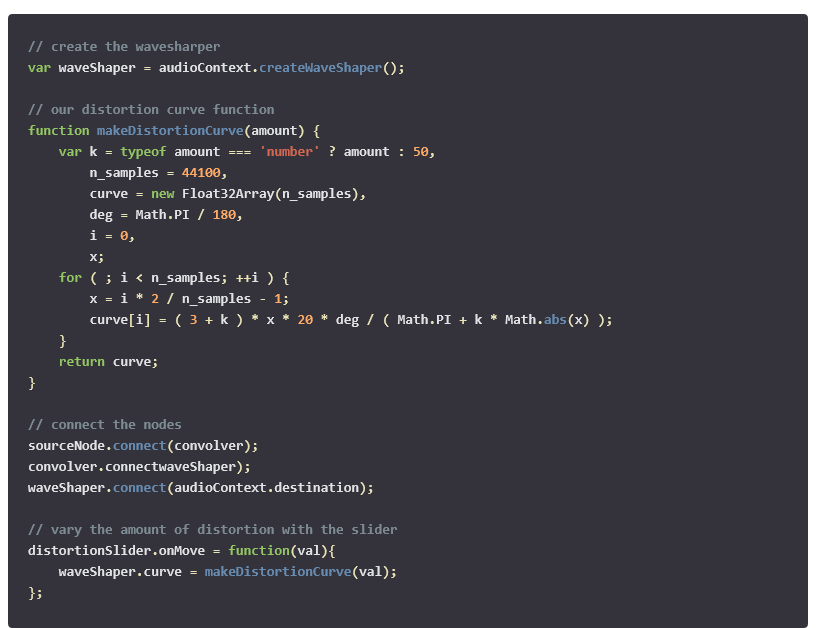

Wave Shaping

To increase the distortion, we also used a WaveShaper node. This type of node lets you apply mathematical distortion to the audio signal to achieve some really dramatic effects. The distortion is defined as a curve function. Those functions can require some complex math. For

the example below, we borrowed a good one from our friends at MDN.

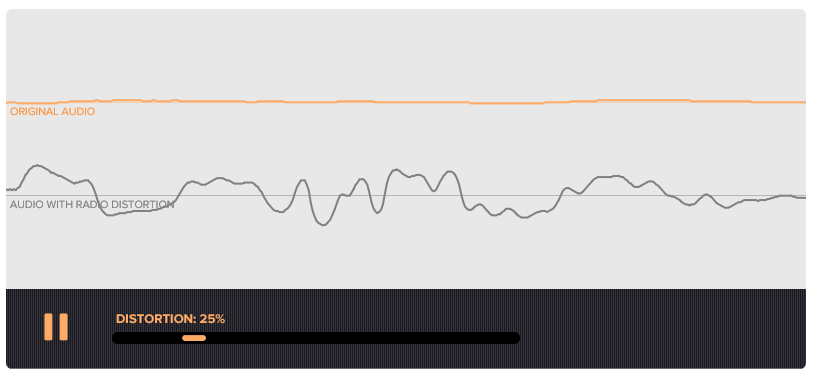

Notice the stark difference between the original waveform and the waveform with the WaveShaper applied to it.

You can try it out interactively here.

The example above is a dramatic representation of just how much you can do with the Web Audio API. Not only are we making some pretty dramatic changes to the sound right from the browser, but we’re also analyzing the waveform and rendering it into a canvas element! The Web Audio API is incredibly powerful and versatile and, frankly, a lot of fun!

More hands-on with JavaScript

Microsoft has a bunch of free learning on many open source JavaScript topics and we’re on a mission to create a lot more with Microsoft Edge. Here are some to check-out:

- Microsoft Edge Web Summit 2015 (a complete series of what to expect with the new browser, new web platform features, and guest speakers from the community)

- Build of //BUILD/ and Windows 10 (including the new JavaScript engine for sites and apps)

- Advancing JavaScript without Breaking the Web (Christian Heilmann’s recent keynote)

- Hosted Web Apps and Web Platform Innovations (a deep-dive on topics like manifold.JS)

- Practical Performance Tips to Make your HTML/JavaScript Faster (a 7-part series from responsive design to casual games to performance optimization)

- The Modern Web Platform JumpStart (the fundamentals of HTML, CSS, and JS)

And some free tools to get started: Visual Studio Code, Azure Trial, and cross-browser testing tools – all available for Mac, Linux, or Windows.

This article is part of the web dev tech series from Microsoft. We’re excited to share Microsoft Edge and the new EdgeHTML rendering engine with you. Get free virtual machines or test remotely on your Mac, iOS, Android, or Windows device at modern.IE.

Frequently Asked Questions (FAQs) about Dynamic Sound with the Web Audio API

How can I start using the Web Audio API for my web applications?

To start using the Web Audio API, you first need to create an instance of the AudioContext interface. This is the primary ‘container’ for your audio project and is usually created upon page load. Once you have an instance of AudioContext, you can create nodes in this context, connect them together to form an audio routing graph, and then manipulate the audio data. Remember to check for browser compatibility as not all browsers fully support the Web Audio API.

What are the different types of audio nodes available in the Web Audio API?

The Web Audio API provides several types of audio nodes, each with a specific purpose. Some of the most commonly used nodes include: GainNode for controlling volume, OscillatorNode for generating sound, BiquadFilterNode for applying audio effects, and AudioBufferSourceNode for playing sound samples. Each node can be connected to other nodes to form an audio routing graph.

How can I control the volume of audio using the Web Audio API?

You can control the volume of audio using the GainNode. This node is used to control the loudness of the audio. You can create a GainNode using the createGain() method of the AudioContext. Once created, you can adjust the gain (volume) by setting the value of the gain attribute.

How can I generate sound using the Web Audio API?

You can generate sound using the OscillatorNode. This node generates a periodic waveform. You can create an OscillatorNode using the createOscillator() method of the AudioContext. Once created, you can set the type of waveform to generate (sine, square, sawtooth, or triangle) and the frequency.

How can I apply audio effects using the Web Audio API?

You can apply audio effects using the BiquadFilterNode. This node represents a second order filter that can be used to create various effects such as tone control. You can create a BiquadFilterNode using the createBiquadFilter() method of the AudioContext. Once created, you can set the type of filter (lowpass, highpass, bandpass, etc.) and the frequency, Q, and gain.

How can I play sound samples using the Web Audio API?

You can play sound samples using the AudioBufferSourceNode. This node is used to play audio data directly from an AudioBuffer. You can create an AudioBufferSourceNode using the createBufferSource() method of the AudioContext. Once created, you can set the buffer to the audio data you want to play and then start the playback using the start() method.

How can I connect audio nodes together in the Web Audio API?

You can connect audio nodes together using the connect() method. This method is used to form an audio routing graph. You can connect one node to another, or one node to multiple nodes. The audio data flows from the source node to the destination node(s).

How can I manipulate audio data in the Web Audio API?

You can manipulate audio data using the various methods and attributes provided by the audio nodes. For example, you can change the frequency of an OscillatorNode, adjust the gain of a GainNode, or apply a filter to a BiquadFilterNode. You can also use the AnalyserNode to capture real-time frequency and time-domain data.

What is the role of the AudioContext in the Web Audio API?

The AudioContext is the primary ‘container’ for your audio project. It is used to create the audio nodes, manage the audio routing graph, and control the playback. You can create an instance of the AudioContext upon page load, and then use this instance to create and manipulate the audio nodes.

What is the browser compatibility of the Web Audio API?

The Web Audio API is widely supported in modern browsers, including Chrome, Firefox, Safari, and Edge. However, not all features are fully supported in all browsers. It is recommended to check the specific feature support before using it in your web application.

Robby Ingebretsen is the founder and creative director of PixelLab.