As designers and developers, we all make design decisions every day. We scribble thumbnail layouts, we research heatmaps, we crunch designs onscreen, we try to think like the user, and we ask colleagues and friends for their opinions and insights on a complex range of issues:

- Which keywords should I use for my main navigation?

- How should I arrange the layout to keep people from dropping

off? - Where are my ads most effective?

- Can people find my search box easily enough?

In short, we all do our best to weigh up all the variables, choose the best combination, build it and get it online.

But can you prove it’s the best option?

With the official release of Google’s “I Told You So” Tool (otherwise known as the Website Optimizer Tool), perhaps now you can.

We were able to run a tidy little test last night that gave us some great results. I’ll run you through our trial.

The Test: To Underline Or Not?

If you’ve visited sitepoint.com in the last few days, you would have found it difficult to avoid seeing our founders (Mark and Matt) in a chicken suit and Moose hat respectively (perhaps courtesy of some “Photoshop persuasion” from yours truly).

As we have never really discounted any of our kits before, my design brief was to make sure everyone who visits the site knew about it. I’m generally loathe to fiddle with our site header banner area but made this a temporary exception.

The sticking point was the “Our founders have gone crazy!” text. Luke, our Managing Director, was understandably concerned that users may well notice the image, but not understand that it was clickable. He was keen to have the text underlined to reinforce the idea that it was a link.

My personal view was that the image and its positioning was radical enough to motivate users to interact with it, and that the underlining would visually detract from the site without getting many more clicks.

My task was to use Google Website Optimizer to prove it either way via a simple A/B split test. (Note: The service is free but you will need a Google AdWords or Google Analytics account to run your own tests. However your testing doesn’t need to involve AdWords.)

The Process

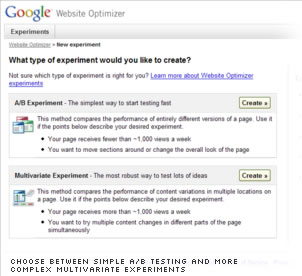

First up, create a new experiment: Once you’ve activated the Website Optimizer on your account, you will be asked if you want to create a new experiment.

Multivariate experiments require more set up time, more traffic data to be effective, so I’d recommend sticking to the simpler A/B Experiment option.

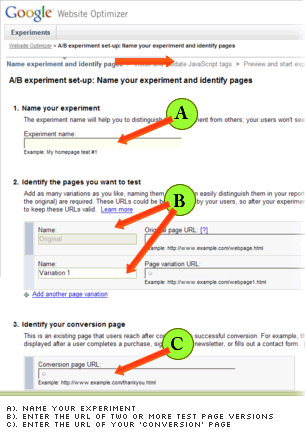

Next, set up your test pages: Once you’ve created your experiment, you need two standalone versions of the page you want to test — perhaps index.html and index2.html. This can create other issues, but we’ll talk about them later.

You also need to identify a conversion page — the destination page inside your site to which you’re trying to move users. This could be anything from a blog post or product page, to a checkout page or navigation tab. You’ll need to put code on this page, so it will probably need to be a page on your site, rather than an external destination.

Next, you’ll be asked to name your experiment and tell the Website Optimizer where your three test pages live. You’ll then be asked if it’s you who will be adding the JavaScript tags to your test pages, or if a separate developer team will be doing the deed.

I’m assuming that if you’re reading this article, you’re probably a D.I.Y type.

Either way, the person adding these scripts doesn’t really need any JavaScript know-how, they just have to know their HTML well enough to be able to accurately copy and paste code snippets into their pages.

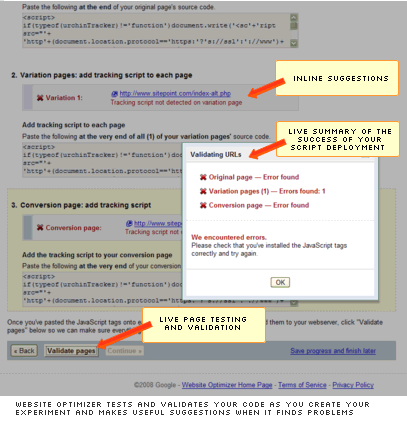

Add the “JavaScript tags” to your pages. Although this is the most technical part of the procedure, Google does a nice job of making it easy.

You’ll have 4 JavaScript snippets to add to your pages (add one more for each new page variation).

- one at the top of your original page

- one at the bottom of your original page

- one at the bottom of your alternate page

- one at the bottom of your conversion page

Now, to validate your JavaScript. This is one of the nicer aspects of the service. The biggest problem with most services like this is it’s easy to make small cut-n-pasting error at this stage, and I’m sure many people get lost and give up when they can’t figure out where the problem is.

Thankfully Google have implemented a nifty inline checker, which allows you add your code, upload your pages and test them without leaving the Website Optimizer tool. And if it does find problems, it points the issues out and offers reasonably helpful advice.

When Google gives your green ticks for all your scripts, the only thing left to do is wait for the users to roll in. The tool will automatically divvy up your incoming traffic between your test pages and track the behaviour of users as they exit the page.

The Results

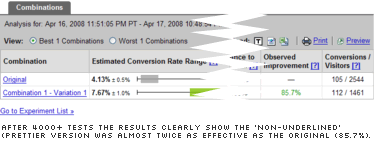

SitePoint gets sufficient traffic to give us usable data in around twelve hours. After setting up the test pages on Thursday night, we had a clear cut result by Friday morning.

My main motivation in setting up the test was simply to prove that the underlined text version wouldn’t perform significantly better than the clean version — if they were performed roughly the same, picking the prettier version was a no-brainer.

In the end, even I was surprised with the actual results. After 4,000 tests, the pretty version was proving almost twice as effective as the uglier underlined version (85.7%). That is a remarkable difference for a seemingly small change!

It also makes you wonder what other things you could be testing…

The Downsides

There are a couple of downsides to this technique.

Firstly, the scripts snippets break HTML validation. Even more infuriating, when I tried to rewrite them so they would validate — i.e. I changed <script> to <script type="text/javascript"> — Google couldn’t use them anymore.

It may be possible to work around this issue, but it’s a sloppy piece of coding from Google.

Secondly, creating two versions of a page does mean that the traffic to the original page will be split. This means your web stats will show a dramatic drop off for that original page for the period of the experiment.

It’s hard to prove either way, but there may be some effect on the PageRank of your original page if you left the experiment running for a long time.

For me, the slightly disappointing thing is that currently neither Google Analytics or PageRank appear to compensate or adjust their behaviour for their own tool. Hopefully this will be rectified in the future.

Of course, the idea is to determine which page works best as quickly as possible, and make that one the only page. How long this takes is obviously heavily dependent on your traffic levels. We were able to get more than enough data to make a decision in 12 hours, but it would be no good making important decision based on the behaviour of 10 or 20 users.

Despite a few niggling issues, I’d have to say this is a remarkable service that should be a huge benefit to almost anyone coding pages.

No matter how much you think you know, this tool will very likely teach you something new.

Alex Walker

Alex WalkerAlex has been doing cruel and unusual things to CSS since 2001. He is the lead front-end design and dev for SitePoint and one-time SitePoint's Design and UX editor with over 150+ newsletter written. Co-author of The Principles of Beautiful Web Design. Now Alex is involved in the planning, development, production, and marketing of a huge range of printed and online products and references. He has designed over 60+ of SitePoint's book covers.