If you’ve been following along with my previous articles about Github’s API, you know that Github’s developers are doing their best to ease the pain of interacting with Github data. In this article, we’re going to take a look at the Github public events API and we will build a small demo along the way.

What are Github Public Events?

Github events are interactions made by users, like pushing, merging, creating repositories, etc. It’s like storing the history of Github. However, we are limited to the last 300 events, and that’s a problem if you want to search through the data of the whole history.

Ilya Grigorik wanted to keep track of his open source project, but due to the limitation of the Github public events API, he decided to create the GithubArchive website where he queries the Github API and stores the results. You can query the archive with links like http://data.githubarchive.org/2015-01-01-15.json.gz and you’ll get an archive containing the JSON payload. This solution is not really efficient and doesn’t help with querying the history for data, so, when Google BigQuery was released, Grigorik moved all of his data to the new storage and made it public.

Why BigQuery?

Google BigQuery was created to solve the queries latency problem. When you’re dealing with big data, you need to have the right hardware and infrastructure to handle the load properly. Now, you have the opportunity to move your append-only database to Google’s infrastructure and see millions of data rows processed in seconds.

If you’re not familiar with Google APIs, be sure to check this guide about starting a new Google API project. After that, be sure to enable the BigQuery API on your Google developers console.

Setting Up the Environment

I will be using Laravel as my framework of choice, and Homestead Improved for my VM. We will grab our Google credentials from the config/google.php file, and to follow Laravel’s security conventions I will store those credentials inside the .env file.

// config/google.php

return [

'app_name' => env('app_name'),

'client_id' => env('client_id'),

'client_secret' => env('client_secret'),

'api_key' => env('api_key')

];// .env

APP_DEBUG=true

app_name='Optional app name'

client_id='CHANGE IT'

client_secret='CHANGE IT'

api_key='CHANGE IT'I will also create a new Service Provider to hold our Google services bindings.

// app/Providers/BigQueryServiceProvider.php

class BigQueryServiceProvider extends ServiceProvider

{

public function register()

{

$this->app->bind('GoogleClient', function () {

$googleClient = new \Google_Client();

$googleClient->setAccessToken(\Session::get("token"));

return $googleClient;

});

$this->app->bind('bigquery', function () {

$googleClient = \App::make('GoogleClient');

$bigquery = new \Google_Service_Bigquery($googleClient);

return $bigquery;

});

}

}// config/app.php

// ...

'providers' => [

// ...

'App\Providers\BigQueryServiceProvider',

]Since our application will require the user authorization to query the API, I tried to make the process easier by creating a Middleware for guarding our routes.

// app/Http/Middleware/GoogleLogin.php

class GoogleLogin

{

public function handle($request, Closure $next)

{

$ga = \App::make('\App\Services\GoogleLogin');

if (!$ga->isLoggedIn()) {

return redirect('login');

}

return $next($request);

}

}// app/Kernel.php

protected $routeMiddleware = [

// ...

'google_login' => '\App\Http\Middleware\GoogleLogin'

];Google BigQuery Query Console

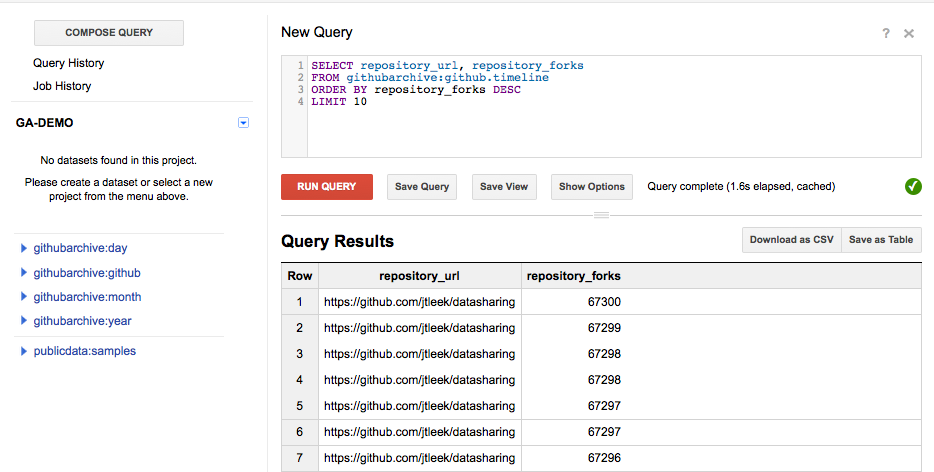

Before we start working with BigQuery using the PHP library, lets start exploring the BigQuery Query console. The console has a Compose Query button to let you write your queries. Underneath, we have a Query History link which shows our queries history. Since our queries will take a while to respond, we can execute a query as a job and ask for its stats after a while – the Job History link holds the list of the current jobs.

If you want to take a look at the table schema and explore the available fields, you can navigate to the left panel and select the Github timeline table.

Google BigQuery API and Github

As an example, let’s try to retrieve the top ten popular projects on Github. I will define popularity as the number of forks. The Google_Service_Bigquery class is responsible for all types of interactions with the Google BigQuery API. I previously introduced the term jobs, which means that we can execute a query in the background and we can fetch its status over time to see if it completed or not.

// app/Http/routes.php

Route::any('/topTen', ['middleware' => 'google_login', 'as' => 'topTen', 'uses' => 'BigQueryAPIController@topTen']);

// app/Http/Controllers/BigQueryAPIController.php

class BigQueryAPIController extends Controller

{

public function __construct()

{

$this->bigqueryService = \App::make("bigquery");

}

public function topTen()

{

$projectID = 'modular-robot-22';

$query_str = "SELECT repository_url, MAX(repository_forks) as max_forks" .

" FROM githubarchive:github.timeline" .

" GROUP EACH BY repository_url" .

" ORDER BY max_forks DESC" .

" LIMIT 10";

$query = new \Google_Service_Bigquery_QueryRequest();

$query->setQuery($query_str);

$result = $this->bigqueryService->jobs->query($projectID, $query);

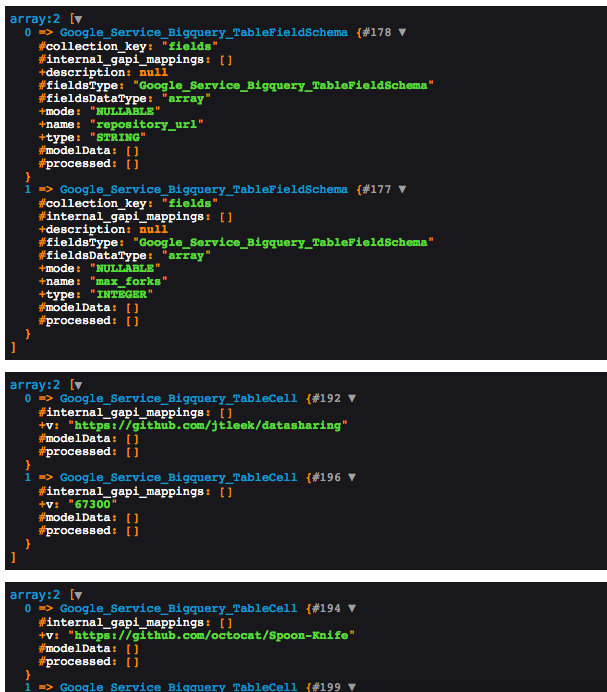

$fields = $result->getSchema()->getFields();

$rows = $result->getRows();

dump($fields);

foreach ($rows as $row) {

dump($row->getF());

}

}

}The project ID can be found on the Google Developers Console. The query string is fetching the respository_url, and MAX(repository_forks). The getSchema method returns a Google_Service_Bigquery_TableSchema instance which contains the selected column names from the response, and the getRows method returns an iterable list of Google_Service_Bigquery_TableRow. The output looks like the following.

The githubarchive:github.timeline dataset has been deprecated and won’t be updated anymore. The new way to work with data is through a specific time range (year dataset, month dataset, day dataset); you can visit githubarchive to learn more about this. Now we must update our query above to work with last year’s data.

// app/Http/Controllers/BigQueryAPIController.php

public function topTen()

{

$projectID = 'modular-robot-22';

$query_str = "SELECT repository_url, MAX(repository_forks) as max_forks" .

" FROM githubarchive:year.2014" .

" GROUP EACH BY repository_url" .

" ORDER BY max_forks DESC" .

" LIMIT 10";

//...

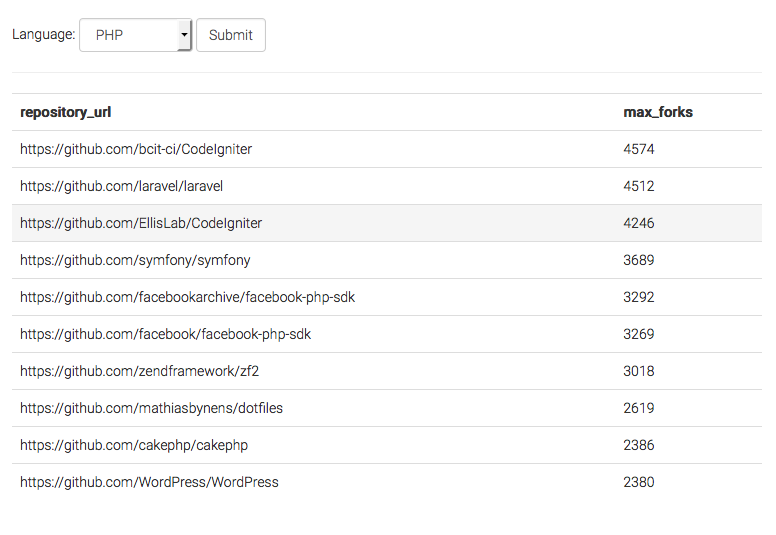

}Top Ten Repositories by Language

We will adapt the previous query to pull repositories of a specific language. Our view markup will contain a form and a set of language options.

// resources/views/home.blade.php

<form action="/" method="POST" class="form-inline">

<label for="language">Language: </label>

<select name="language" id="language" class="form-control">

<option value="PHP">PHP</option>

<option value="JavaScript">JavaScript</option>

<option value="CSS">CSS</option>

<option value="Python">Python</option>

</select>

<input type="submit" class="form-control" value="Submit"/>

</form>When the user submits the form, we retrieve the selected language and fill the condition in our SQL query. Because we will print our query result as a table, we will split the table header part from the table body.

// app/Http/Controllers/BigQueryAPIController.php

public function topTen()

{

if (!\Input::has('language')) {

return \View::make('home', ['repos' => null]);

}

$language = \Input::get('language', 'PHP');

$projectID = 'modular-robot-647';

$query_str = "SELECT repository_url, MAX(repository_forks) as max_forks" .

" FROM githubarchive:year.2014" .

" WHERE repository_language='$language'" .

" GROUP EACH BY repository_url" .

" ORDER BY max_forks DESC" .

" LIMIT 10";

$query = new \Google_Service_Bigquery_QueryRequest();

$query->setQuery($query_str);

$result = $this->bigqueryService->jobs->query($projectID, $query);

// getting the table header

$fields = $result->getSchema()->getFields();

// query response rows

$repos = $result->getRows();

return \View::make('home', ['repos' => $repos, 'tableHeader' => $fields]);

}The only thing changed from the previous query is the where condition, which filters languages by user input. When printing the result table I tried to keep things generic for most queries.

// resources/views/home.blade.php

@if($repos)

<table class="table table-hover">

<tr>

@foreach($tableHeader as $item)

<th>{{$item->getName()}}</th>

@endforeach

</tr>

@foreach($repos as $repo)

<tr>

@foreach($repo->getF() as $item)

<td>{{$item->getV()}}</td>

@endforeach

</tr>

@endforeach

</table>

@else

<h2>Nothing to show</h2>

@endifThe getF() method returns the row content, while the getV() method returns the cell content. You can inspect result values by dumping the class names and going through properties.

It looks like CodeIgniter was on top when I tested this, but this is not the case at the time of writing – Laravel is the most popular PHP framework on Github. Make sure to check the Github trending repositories page to see almost realtime data.

If you chose to work with data added post 01/01/2015, the scheme will also contain nested records plus a JSON encoded payload field. This applies to working with the day datasets as well – you can use the Google BigQuery console to inspect the dataset schema. BigQuery has some useful JSON functions to work with JSON encoded data, be sure to check them out.

Conclusion

In this article, we introduced the Github Public Events and how you can use them through BigQuery. The final demo includes a basic usage example, but you may want to experiment with the API and get yourself ready for the annual Github data challenge with some cool Github data facts. What do you think about the Github Events API? Do you have any cool ideas for the data challenge? Let us know!

Frequently Asked Questions (FAQs) about GitHub’s Public Events with PHP and Google BigQuery

How can I use PHP to interact with Google BigQuery?

PHP can interact with Google BigQuery through the Google Cloud PHP library. This library provides an interface for making requests and handling responses from BigQuery. To use it, you need to install it via Composer, a dependency management tool for PHP. Once installed, you can use the BigQuery client in your PHP script to create, manage, and query datasets and tables.

What are GitHub’s public events?

GitHub’s public events are activities that occur on public repositories on GitHub. These events include actions such as pushing code, creating issues, and commenting on pull requests. GitHub provides an API that allows developers to retrieve these events and use them in their applications.

How can I use Google BigQuery to analyze GitHub’s public events?

Google BigQuery is a powerful tool for analyzing large datasets. To analyze GitHub’s public events, you first need to import the data into BigQuery. This can be done by downloading the data from GitHub’s API and then uploading it to BigQuery. Once the data is in BigQuery, you can use SQL-like queries to analyze it.

How can I authenticate my PHP application with Google BigQuery?

To authenticate your PHP application with Google BigQuery, you need to create a service account in the Google Cloud Console. This service account will have the necessary permissions to access BigQuery. Once the service account is created, you can download a JSON key file that contains the credentials for the service account. You can then use these credentials in your PHP application to authenticate with BigQuery.

How can I handle errors when using the Google Cloud PHP library?

The Google Cloud PHP library uses exceptions to handle errors. When an error occurs, the library will throw an exception that you can catch and handle in your PHP code. The exception will contain information about the error, such as the error message and the HTTP status code.

How can I optimize my queries in Google BigQuery?

There are several ways to optimize your queries in Google BigQuery. One way is to use partitioned tables, which can reduce the amount of data that needs to be scanned by your queries. Another way is to use clustered tables, which can improve the performance of your queries by grouping related data together.

How can I use PHP to retrieve data from Google BigQuery?

To retrieve data from Google BigQuery with PHP, you can use the runQuery method of the BigQuery client. This method takes a SQL query as a parameter and returns a result set that you can iterate over to retrieve the rows of data.

How can I use PHP to insert data into Google BigQuery?

To insert data into Google BigQuery with PHP, you can use the insert method of the Table object. This method takes an array of rows as a parameter. Each row is an associative array where the keys are the column names and the values are the column values.

How can I use PHP to update data in Google BigQuery?

To update data in Google BigQuery with PHP, you can use the update method of the Table object. This method takes a row as a parameter. The row is an associative array where the keys are the column names and the values are the new column values.

How can I use PHP to delete data from Google BigQuery?

To delete data from Google BigQuery with PHP, you can use the delete method of the Table object. This method takes a row as a parameter. The row is an associative array where the keys are the column names and the values are the column values.

Younes Rafie

Younes RafieYounes is a freelance web developer, technical writer and a blogger from Morocco. He's worked with JAVA, J2EE, JavaScript, etc., but his language of choice is PHP. You can learn more about him on his website.