An Introduction to AI Chatbots: ChatGPT vs. Bing Chat vs. Bard

Artificial intelligence (AI) has been in the spotlight for a while now, and it’s dividing opinions across the world. While some are enthusiastic about the advances in AI and welcome it, others have raised concerns surrounding job security, ethics, and privacy of users’ data—all of which are valid concerns. Artificial intelligence is by no means a new topic or area of tech, but developers have spent years trying to improve on the technology and introduce new tools that are powered by AI.

In this series, we’ll look at some of the applications of AI we’re seeing today, and how AI can be a very useful tool in our day-to-day work as engineers. Innovations in AI have introduced some obvious benefits, such as efficiency and increased productivity. A nice example is GitHub Copilot, an AI-powered, peer-coding partner that helps engineers improve their standard practices, write better tests, and be more productive.

The introduction of ChatGPT—a language model developed by OpenAI —has led to a recent buzz around AI. Several other AI-powered chatbots have also been introduced by rival companies. Given the current proliferation of AI-powered chatbots, it’s a good time to take a look at chatbots and how they work.

In this part, we’ll be looking at what chatbots are, their history, and the state of chatbots today. We’ll also compare the newly introduced chatbots like ChatGPT, Bing Chat, and Bard.

Introducing Chatbots

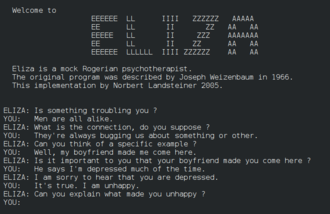

A chatbot is a software application that aims to mimic human conversation through text or voice interactions, typically online. Chatbots first came into existence in 1966 when an MIT professor named Joseph Weizenbaum created ELIZA, an early natural language processing computer program created to explore communication between humans and machines.

The image below (from Wikipedia) shows ELIZA in action.

In 1994, computer scientist Michael Mauldin decided to call this kind of program a “chatterbot”, after inventing Verbot, a chatterbot program and artificial intelligence software development kit for Windows and the Web.

The Evolution of Chatbots

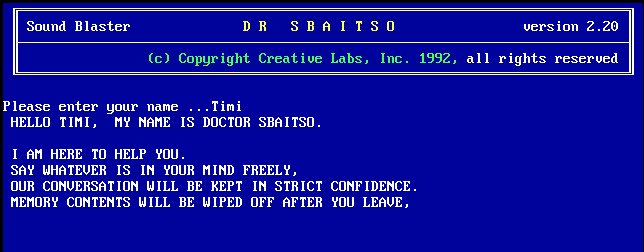

Chatbots continued to evolve after ELIZA, finding different purposes ranging from entertainment (with Jabberwacky) to healthcare (with PARRY). The chatbots created during this period were intended to mimic human interaction under different circumstances. But in 1992, Creative Labs built Dr Sbaitso, a chatbot with speech synthesis. This was the first time machine learning was integrated into a chatbot, though it only recognized limited or pre-programmed responses and commands.

The image below shows the Dr Sbaitso interface.

Another chatbot called ALICE (Artificial Linguistic Internet Computer Entity) was developed in 1995—a program engaging in human conversation using heuristic pattern matching to conduct conversations.

All the chatbots released during this period are termed “Rule-based chatbots”, because they all operated on a set of predefined rules and patterns created by human developers or conversational designers to generate responses. This means these chatbots had limited flexibility, due to their reliance on predetermined rules. They lacked the ability to learn from a user’s message and generate a new response to it. Examples of such rules include:

- If a user asks about product pricing, respond with information about pricing plans.

- If a user mentions a technical issue, provide troubleshooting steps.

- If a user expresses gratitude, respond with a thankyou message.

In 2001, ActiveBuddy, Inc. publicly launched a new chatbot that was called SmarterChild. It was an intelligent bot distributed across global instant messaging networks (AIM, MSN, and Yahoo Messenger) that was capable of providing information ranging from news, weather, sports, stock information, and so on, and that allowed users to play games and also access the START Natural Language Question Answering System by MIT’s Boris Katz. It was revolutionary, as it demonstrated the power of conversational computing, and in many ways it can be said to have been a precursor of Siri.

The next set of remarkable developments in chatbots came in the 2010s, partly due to the growth of the Web and the availability of raw data. During this period, great progress was made in natural language processing (NLP), as representation learning and deep neural network-style machine learning methods became widespread in NLP.

Some of the achievements of this period include:

Deep learning and neural networks. Significant developments were made in recurrent neural networks (RNNs) that made them capable of capturing complex linguistic patterns, contextual relationships, and semantic understanding, contributing to significant improvements in chatbot performance.

Sentiment analysis and emotion understanding. Sentiment analysis and emotion understanding were added to NLP techniques in the 2010s. Chatbots also incorporated these capabilities, allowing them to recognize user sentiments and emotions while responding appropriately to them. This development enhanced the chatbot’s ability to provide empathetic and personalized interactions.

Named entity recognition and entity linking. The process of named entity recognition (NER) and entity linking also got better when Alan Ritter used a hierarchy based on common Freebase entity types in ground-breaking experiments on NER over social media text.

Contextual understanding and dialogue management. Language models became more proficient at understanding and maintaining contexts within a conversation, and consequently chatbots got better at handling conversations while providing more coherent responses. The flow and quality of interactions also improved as a result of reinforcement-learning techniques.

Voice-activated virtual assistants. There was massive development in areas like NLP, AI, and voice recognition technologies from the 1990s to the 2010s. The combination of these led to the development of smart, voice-activated virtual assistants with better audio than Dr Sbaitso, which was the first voice-operated chatbot. A notable example of assistants developed in this era was Apple’s Siri, which was released in 2011, and which played a pivotal role in popularizing voice-based interactions with chatbots.

Integration of messaging platforms and APIs. As a result of the progress being made in the field of AI, there’s been a rise in the adoption of chatbots by messaging platforms such as Facebook, Slack, WhatsApp, and so on. These platforms have also made it possible for users to develop and integrate into them their personalized chatbots with different capabilities, by providing them with APIs and developer tools—all of which have ultimately led to the adoption of chatbots across various industries.

All of these advancements made it possible to build chatbots that were capable of having better conversations. They had a better understanding of topics, and they offered an experience that was better than the scripted feel of their predecessors.

Large Language Models

In the early days of the Internet, search engines weren’t as accurate as they are now. Ask.com (originally known as Ask Jeeves) was the first search engine that allowed users to get answers to questions in everyday, natural language. Natural language search uses NLP, a process which uses a vast amount of data to run statistical and machine learning models to infer meaning in complex grammatical sentences. This has made it possible for computers to understand and interact with human language, and it has paved the way for various applications. NLP has facilitated a remarkable evolution, with the emergence of large language models.

A large language model (LLM) is a computerized language model that can perform a variety of natural language processing tasks, including generating and classifying text, answering questions in a human-like fashion, and translating text from one language to another. It’s trained on a massive trove of articles, Wikipedia entries, books, internet-based resources and other input, so it can learn how to generate responses based on data from these sources.

The underlying architecture of most LLMs is one of two types:

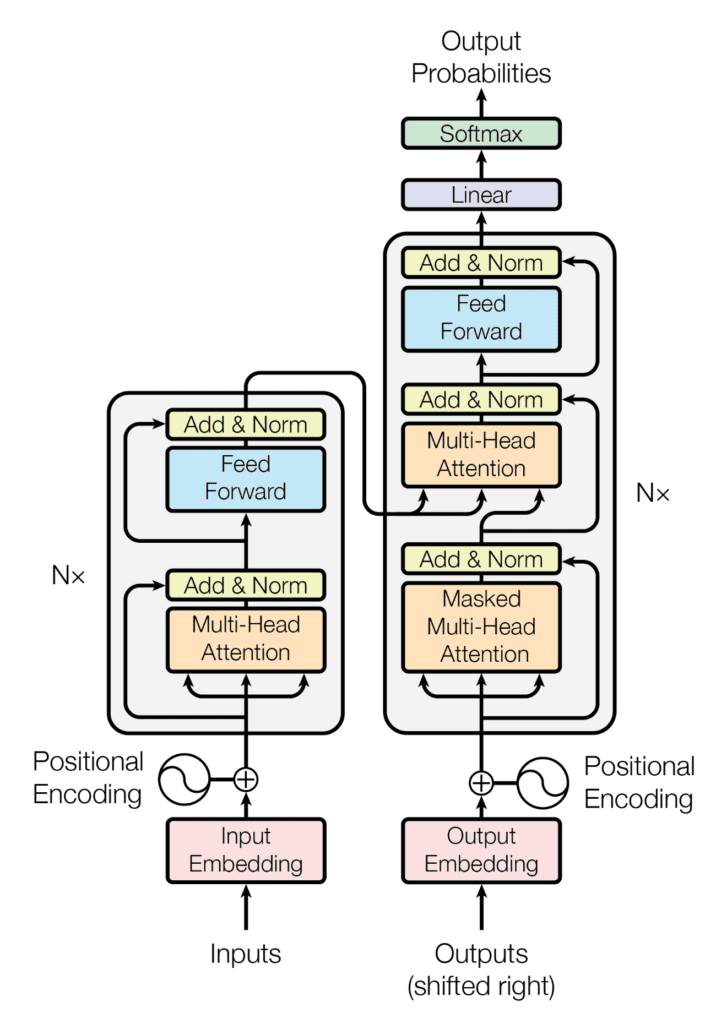

These LLMs are all based on the transformer model architecture. Transformers are a type of neural network architecture that has revolutionized the field of natural language processing and enabled the development of powerful large language models.

It uses self-attention mechanisms to calculate a weighted sum for an input sequence and dynamically determine which tokens in the sequence are most relevant to each other.

The image below depicts how the transformer model architecture works.

How LLMs Work

In order to understand how LLMs work, we must first look at how they’re trained. Using large amounts of text from books, articles, and various parts of the Internet, they learn the patterns and connections between words. This is the first step, known as pre-training. It utilizes distributed computing frameworks and specialized hardware such as graphics processing units (GPUs) or tensor processing units (TPUs), which allow for efficient parallel processing. After this is done, the pre-trained model still needs to know how to perform specific tasks effectively, and this is where fine-tuning comes in.

Fine-tuning is the second step in training LLMs. It involves training the model on specific tasks or data sets to make it more specialized and useful for particular applications. For example, the LLM can be fine-tuned on tasks like text completion, translation, sentiment analysis, or question-answering.

The State of Chatbots Today

Today, we have chatbots that are more powerful than ever before. They can perform more complex tasks and are also better at handling conversations. This is because there have been significant advancements in AI, NLP, machine learning, and an increase in computing power and internet speed.

Chatbots have continued to take advantage of these advancements. Some of the notable aspects of these advancements include:

Advanced AI models. The introduction of advanced AI models has revolutionized the capabilities of chatbots in recent years. Models such as OpenAI’s GPT series have immensely helped to push the boundaries of natural language processing and machine learning. These models are trained on extensive datasets and can generate contextually relevant responses, making conversations with chatbots more engaging and human-like.

Multichannel and multimodal capabilities. Chatbots are no longer limited to a single platform or interface, as they can seamlessly operate across channels like websites, messaging apps/platforms, and mobile apps. Although they’re often behind a paywall, chatbots have also expanded beyond text-based interactions and now support multimodal inputs, including images and voice, providing users with the freedom to engage through different mediums.

Continuous learning and adaptability. Continuously learning and improving from user interactions, chatbots employ reinforcement learning and feedback mechanisms to adapt their responses over time, refining their performance and better meeting user needs.

Industry applications. Extensive applications across industries have been found for chatbots. For instance, Airbnb makes use of chatbots in assisting users to answer FAQs, resolving booking issues, and finding accommodation, while Duolingo utilizes a chatbot in simulating conversations in foreign language learning and feedback. They’re also used in other industries such as financial institutions, healthcare, and ecommerce. This usually requires providing these bots with domain-specific knowledge in order for them to do a great job in their respective use cases.

Integration with backend systems. Due to this immense growth, we now have chatbots that are being integrated with backend systems and databases. This allows them to access and provide up-to-date information, which further enhances their ability to provide accurate and up-to-date responses to user queries.

As a result of all these developments, we now have far more intelligent chatbots that are capable of handling several tasks on different scales, ranging from booking a reservation at your favorite restaurant, or performing extensive research on various topics with references, to solving technical issues in software development. Some of the most popular chatbots that we have today include Google’s Bard, Microsoft’s Bing Chat, and OpenAI’s ChatGPT, all of which are powered by large language models.

ChatGPT

ChatGPT (Chat Generative Pre-trained Transformer) is an AI program built on a model that’s based on a transformer architecture. It was trained on vast amounts of text data and code, and it can generate natural language responses, write code, translate languages, and so on. It was developed by OpenAI and was launched on November 30, 2022. Within a few days of the launch, it had over a million users. In January 2023, it had about 100 million monthly active users. This rapid user growth also led to the reemergence of AI as a trending topic among people and companies alike.

ChatGPT is built on GPT-3.5 and GPT-4, both of which are products of OpenAI’s proprietary series of foundational GPT models.

Features of ChatGPT

ChatGPT has a lot of amazing features, some of which we’ll look at below:

Natural language understanding. Based on the type of data ChatGPT was trained on, it comes with the ability to generate human-like text, making the conversations feel more natural and engaging. It can understand and generate responses across a wide range of topics, handling both open-ended discussions and specific queries.

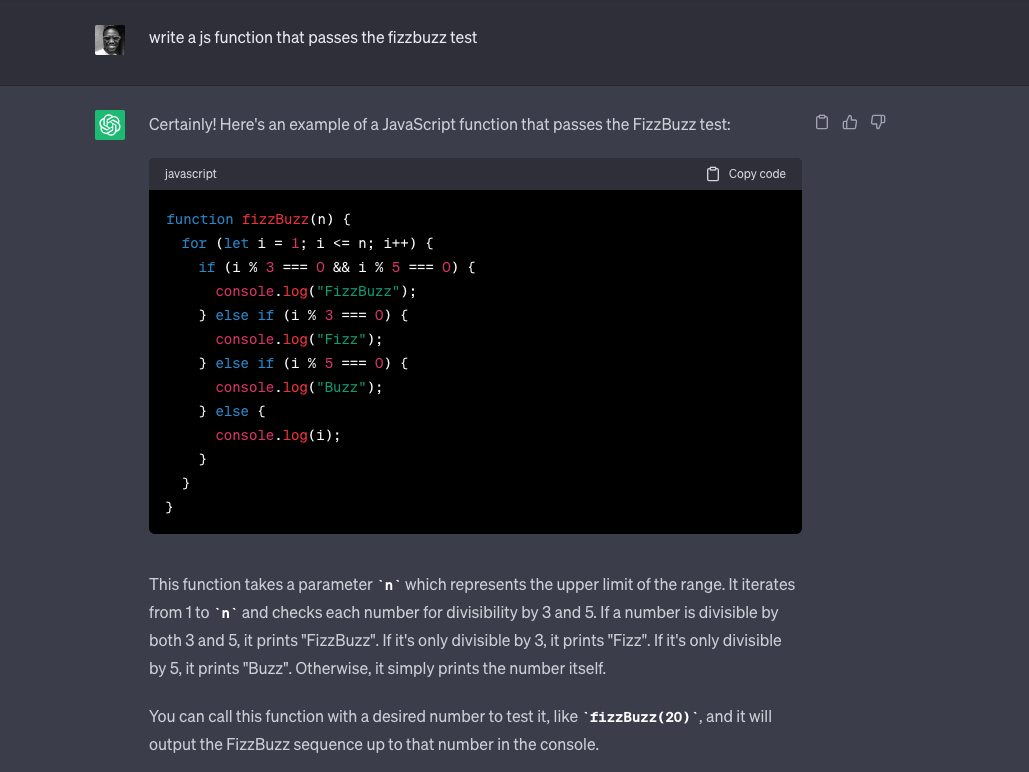

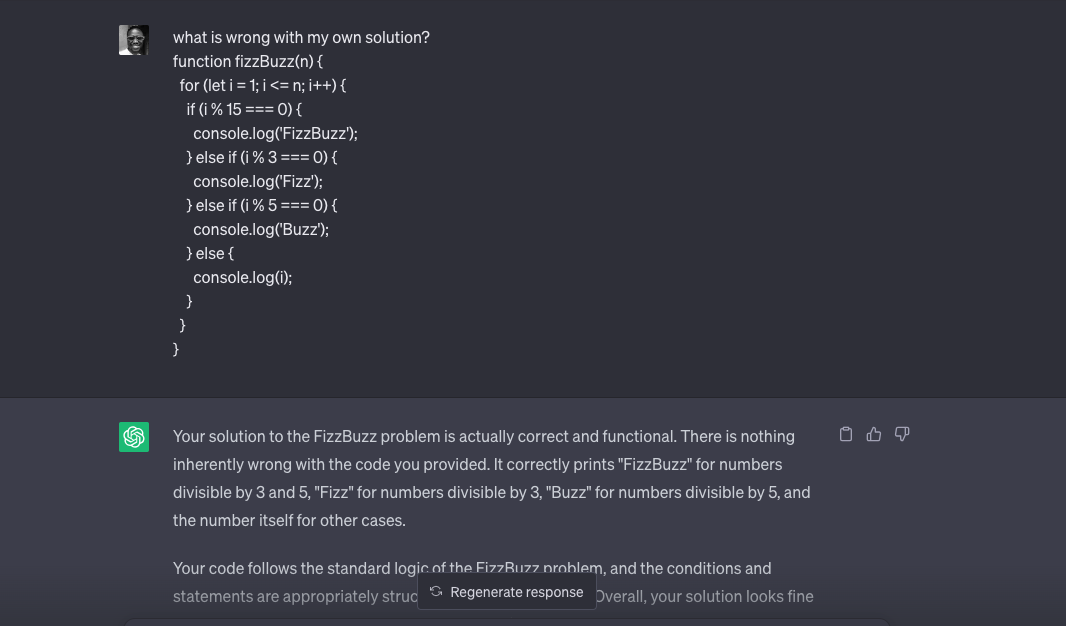

Knowledge of code. ChatGPT was trained on a vast amount of text data and code, including programming languages, code snippets, documentation, and discussions on platforms like GitHub and Stack Overflow. This makes it possible for it to understand and write code in several languages. For example, it can solve the FizzBuzz test, a popular interview question that involves looping through numbers in a certain range to determine if they’re either multiples of 3, 5, or both 3 and 5 (15, 30). This is powered by OpenAI Codex, a system that translates natural language into code.

The image below shows a ChatGPT FizzBuzz response.

Contextual responses. One of the best features of ChatGPT is the ability for it to provide responses based on the flow of the current conversation being held. This means it’s capable of storing users’ messages in memory to analyze and provide future responses concerning them.

The image below shows a ChatGPT contextual response.

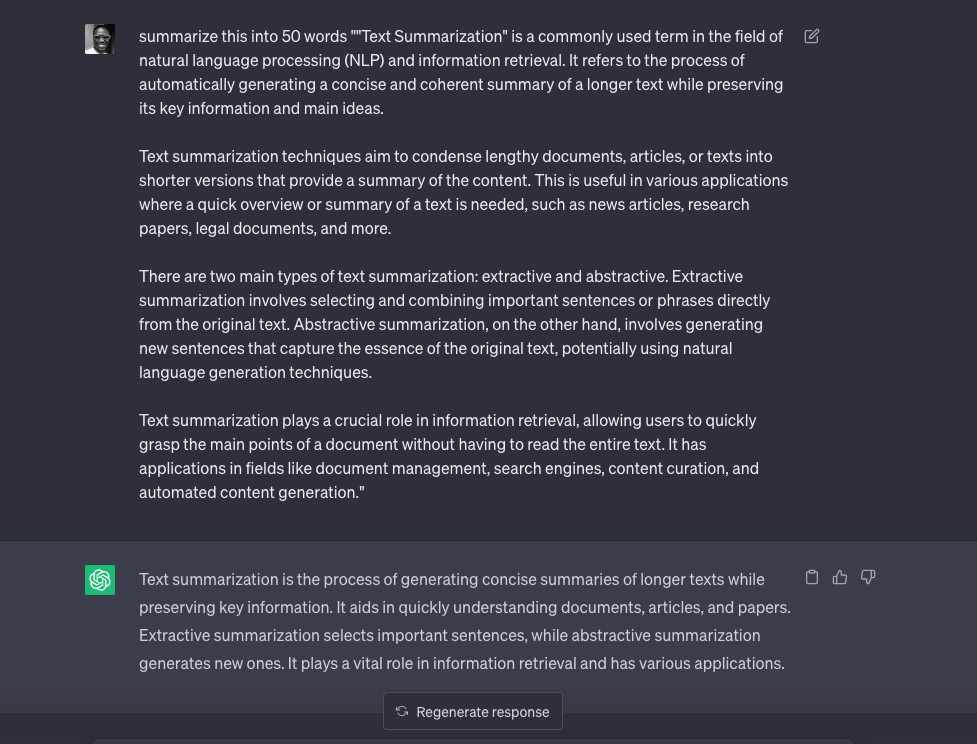

Text summarization. Another popular feature of ChatGPT is the ability to take lengthy text, documents, or articles and provide a summary, while also preserving key information and ideas.

The image below shows ChatGPT summarizing an article.

While the knowledge of ChatGPT’s free version is limited to information gathered up until 2021, users who want to use it to fetch up-to-date information from the Web—along with other cool benefits like plugins—can do so on ChatGPT Plus, a premium version that costs $20 per month. Some of the benefits of ChatGPT Plus include:

Greater availability. One of the benefits of being a ChatGPT Plus user is the unlimited access to the service that comes with it, even during periods of high demand.

Access to new features. As ChatGPT gains new features, paying users get access to these improvements before anyone else.

Access to GPT-4. The free version of ChatGPT is currently powered by the GPT-3.5, which has proven to be a very powerful model. However, OpenAI also has GPT-4, a more capable language model that’s only available to users on the paid version of the app.

Limitations of ChatGPT

As amazing as ChatGPT is, there’s still room for improvement:

Input sensitivity. ChatGPT currently exhibits a sensitivity to how user input is phrased, thereby leading to different responses for the same request. For instance, the model may claim not to know the answer to a question when presented with one phrasing, but provide the correct answer when the same question is slightly rephrased.

Lack of updated data. The free version of ChatGPT doesn’t have access to the Internet and, as such, it’s only capable of providing information regarding certain topics up until September 2021. However, users can get access to up-to-date information by upgrading to ChatGPT Plus.

Verbosity. The model is often excessively verbose and overuses certain phrases. These concerns can be attributed to biases in the training data, where trainers favor longer responses for perceived comprehensiveness.

Inability to ask clarifying questions. The model has a limitation where it’s not able to seek clarification from users when their request is ambiguous or unclear. Instead, it usually suggests or guesses what the user intended.

Bing Chat

Bing Chat is an AI-powered chatbot built on the next-generation OpenAI large language model, GPT-4, which is faster, more accurate, and more capable than GPT-3 and GPT-3.5. It comes with the version of Bing that was announced on February 7, 2023. It’s currently only available to Microsoft Edge users, as it comes integrated with Bing Search.

Bing Chat Features

Some of Bing Chat’s features are listed below:

Multilingual support. Users of Bing Chat aren’t limited to a particular language, as it comes with support for several languages both via voice input and text.

Up-to-date information. Bing Chat is integrated with Bing, a search engine owned by Microsoft, so it has access to the Internet. This makes it possible to provide its users with updated information in real time.

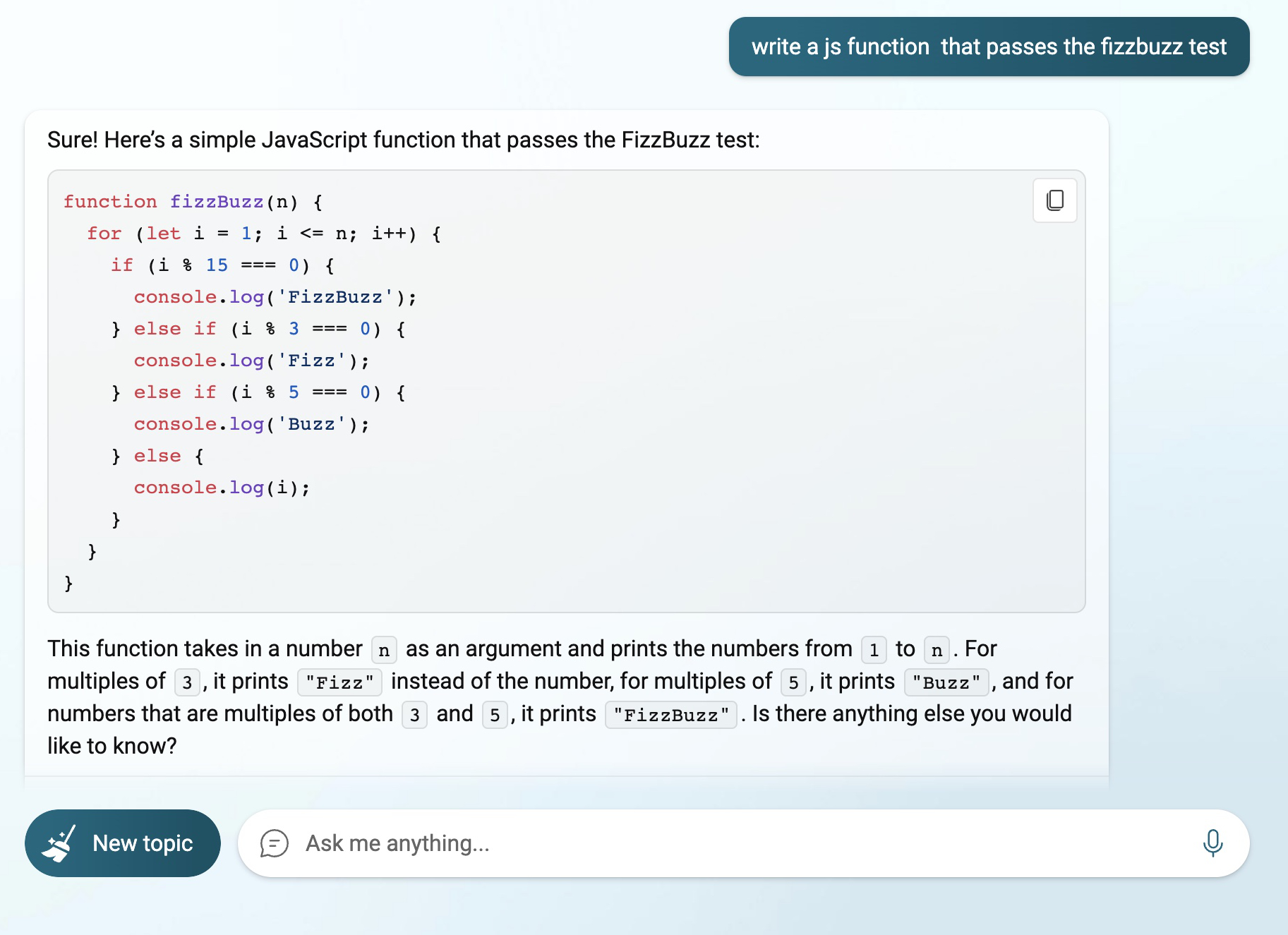

Code generation. Another exciting feature of Bing Chat is the ability to both read and write code. This is because it’s powered by a language model that’s been trained on a large dataset that includes examples of code in various programming languages.

The image below shows Bing Chat attempting the FizzBuzz test.

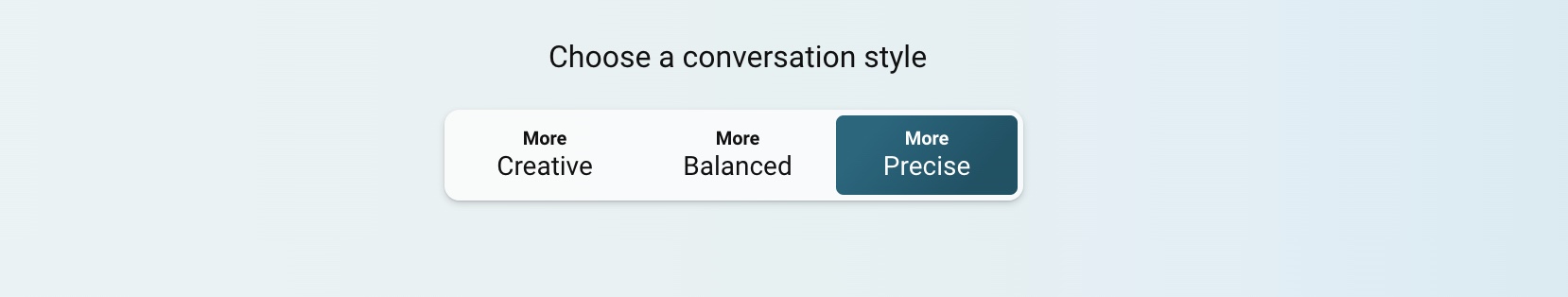

Conversation style. As part of the flexibility of Bing Chat, users can choose a conversation style depending on what they’re trying to achieve. For instance, “More Creative” will work best when trying to draft a cover letter, while “More Precise” will be a better option when conducting research for work or an assignment.

The image below shows Bing Chat conversation options.

Limitations of Bing Chat

As with ChatGPT, there’s still room for Bing Chat to improve:

Limited number of questions. Bing Chat users currently have a cap on the number of questions they can ask per day. This limit was set in place after multiple users reported that it had either been providing inaccurate responses or rude answers. It’s currently limited to 30 chats per session and 200 total chats per day. Once this limit has been exceeded, users get a prompt to try again in 24 hours.

Only available to Microsoft Edge users. Currently, Bing Chat lives inside the Microsoft Edge browser, as it’s integrated with the search engine and can only be accessed from the browser. This makes it impossible for non-Microsoft Edge users to take advantage of its many features.

Bard

Bard is an AI-powered chatbot developed by Google AI. It was originally powered by the Language Model for Dialogue Applications (LaMDA) family of large language models. Later, it was updated to be powered by the PaLM 2 LLM (Pathways Language Model). PaLM is a state-of-the-art language, Transformer-based model with improved multilingual, reasoning, and coding capabilities, trained using a mixture of objectives similar to UL2 (Unified Language Learner), a breakthrough language pre-training paradigm that boosts the effectiveness of language models in every given setting and data set.

Bard was first released on March 21, 2023, in a limited capacity depending on users’ location, before it was finally opened to the general public on May 10, 2023.

Bard Features

Bard has a lot of interesting features and capabilities, some of which are listed below:

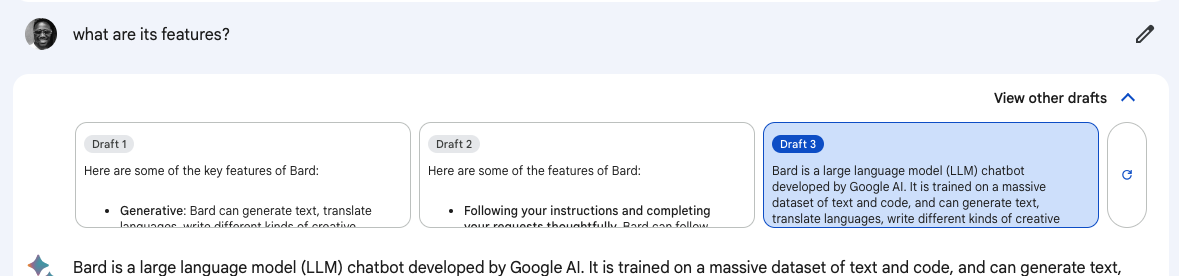

Multiple response options. Bard offers the ability for users to switch between a variety of available responses without having to rephrase their message. This presents users with a variety of answers to the same question or request so they can have a different, better, and clearer understanding of their request.

The image below shows Bard offering multiple answers.

Voice input. Bard offers a voice feature where its users can interact using their voice. This feature comes in handy when trying to dictate a lengthy sentence, and it’s also good for accessibility.

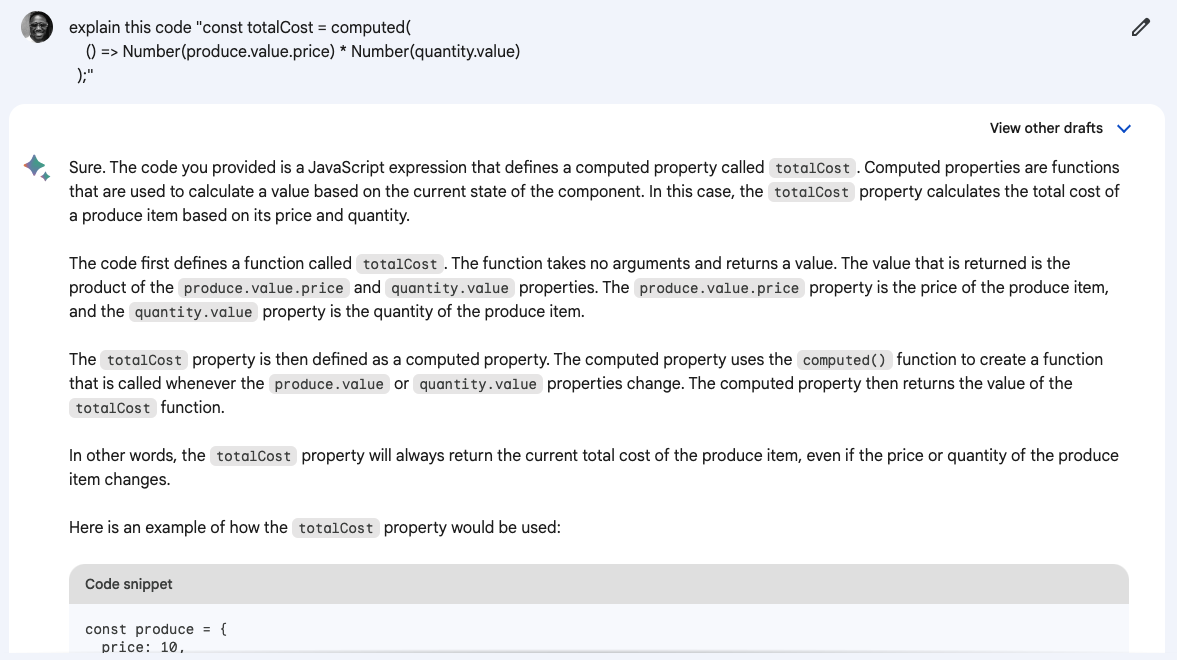

Code explanation. Bard can also serve as a peer coding partner, as it’s capable of understanding and explaining code snippets. While the code in question may not always be accurate, it can serve as a good starting point for understanding the block of code.

The image below shows Bard explaining a code snippet.

Limitations of Bard

Since its release, Bard has proven to be quite useful as both a research assistant and a personal assistant due to the number of features it comes with, but it’s still under development and has some limitations. A few of those limitations are listed below:

Bias. Bard was trained on a data set of text and code that may be biased, so it can be biased in its responses.

Inconsistencies. Bard has the potential to deliver inconsistent and inaccurate responses, which can lead to user confusion. It’s important for users to be mindful of these inconsistencies and to exercise critical evaluation when considering the reliability of information obtained from Bard.

Security. Bard possesses the capability to generate content that may be harmful or offensive, which can be based on the user’s original input. To ensure responsible usage, it’s important to exercise caution and be aware of the limitations associated with Bard.

Wrapping Up

Chatbots have come a long way since ELIZA, the first chatbot. In the last few years, there’s been a lot of development and growth in AI and machine learning, with more advanced language models and NLP techniques. Despite these developments, chatbots are still far from perfect, as there are concerns around issues such as hallucinations. But one thing is clear: there will be continuous developments and improvements in this space.

In the next tutorial, we’ll explore how we can enhance our learning and skill acquisition using the latest advancements in AI and chatbot technologies.