What is Deno?

Section 1: Getting Familiar with Deno

In this section, you will get to know what Deno is, why it was created, and how it was created. This section will help you set up the environment and get familiar with the ecosystem and available tooling.

This section contains the following chapters:

- Chapter 1, What Is Deno?

- Chapter 2, The Toolchain

- Chapter 3, The Runtime and Standard Library

Deno is a secure runtime for JavaScript and TypeScript. I'll guess that you are probably getting that excitement of experimenting with a new tool. You have worked with JavaScript or TypeScript and have at least heard of Node.js. Deno will feel like it has the right amount of novelty for you and, at the same time, has some things that will sound familiar for someone working in the ecosystem.

Before we start getting our hands dirty, we'll understand how Deno was created and its motivations. Doing that will help us learn and understand it better.

We'll be focusing on practical examples throughout this book. We'll be writing code and then rationalizing and explaining the underlying decisions we've made. If you come from a Node.js background, some of the concepts might sound familiar to you. We will also explain Deno and compare it with its ancestor, Node.js.

Once the fundamentals are in place, we'll dive into Deno and explore its runtime features by building small utilities and real-world applications.

Without Node, there would be no Deno. To understand the latter well, we can't ignore its 10+ year-old ancestor, which is what we'll look at in this chapter. We'll explain the reasons for its creation back in 2009 and the pain points that were detected after a decade of usage.

After that, we'll present Deno and the fundamental differences and challenges it proposes to solve. We'll have a look at its architecture, some principles and influences of the runtime, and the use cases where it shines.

After understanding how Deno came to life, we will explore its ecosystem, its standard library, and some use cases where Deno is instrumental.

Once you've read this chapter, you'll be aware of what Deno is and what it is not, why it is not the next version of Node.js, and what to think about when you're considering Deno for your next project.

In this chapter, we'll cover the following topics:

- A little history

- Why Deno?

- Architecture and technologies that support Deno

- Grasping Deno's limitations

- Exploring Deno's use cases

Let's get started!

A little history

Deno's first stable version, v1.0.0, was launched on the May 13, 2020.

The first time Ryan Dahl – Node.js creator – mentioned it was in his famous talk, 10 things I regret about node.js (https://youtu.be/M3BM9TB-8yA). Apart from the fact that it presents the first very alpha version of Deno, it is a talk worth watching as a lesson on how software ages. It is an excellent reflection on how decisions evolve, even when they're made by some of the smartest people in the open source community, and how they can end up in a different place than what they initially planned for.

After the launch, in May 2020 and due to its historical background, its core team, and the fact that it appeals to the JavaScript community, Deno has been getting lots of attention. That's probably one way you've heard about it, be it via blog posts, tweets, or conference talks.

This enthusiasm is having positive consequences on its runtime, with lots of people wanting to contribute and use it. The community is growing due to its Discord channel (https://discord.gg/deno) and the number of pull requests on Deno's repositories (https://github.com/denoland). It is currently evolving at a cadence of one minor version per month, with lots of bug fixes and improvements being shipped. The roadmap shows a vision for a future that is no less exciting than the present. With a well-defined path and set of principles, Deno has everything it takes to become more significant by the day.

Let's rewind a little and go back to 2009 and the creation of Node.js.

At the time, Ryan started by questioning how most backend languages and frameworks were dealing with I/O (input/output). Most of the tools were looking at I/O as an synchronous operation, blocking the process until it is done, and only then continuing to execute the code.

Fundamentally, it was this synchronous blocking operation that Ryan questioned.

Handling I/O

When you are writing servers that must deal with thousands of requests per second, resource consumption and speed are two significant factors.

For such resource-critical projects, it is important that the base tools – the primitives – have an architecture that is accounting for this. When the time to scale arises, it helps that the fundamental decisions you made at the beginning support that.

Web servers are one of those cases. The web is a significant platform in today's world. It never stops growing, with more devices and new tools accessing the internet daily, making it accessible to more people. The web is the common, democratized, decentralized ground for people around the world. With this in mind, the servers behind those applications and websites need to handle giant loads. Web applications such as Twitter, Facebook, and Reddit, among many others, deal with thousands of requests per minute. So, scale is essential.

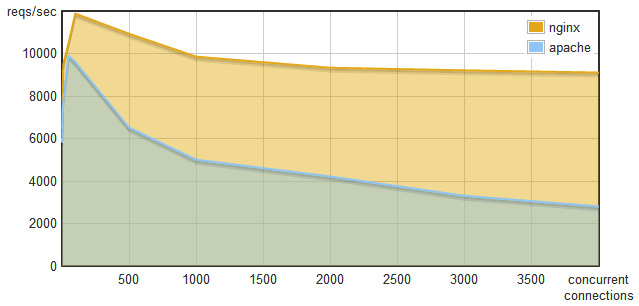

To kickstart a conversation about performance and resource efficiency, let's look at the following graph, which is comparing two of the most used open-source web servers: Apache and Nginx:

Figure 1.1 – Requests per second versus concurrent connections – Nginx versus Apache

At first glance, this tells us that Nginx comes out on top pretty much every time. We can also understand that, as the number of concurrent connections increases, Apache's number of requests per second decreases. Comparatively, Nginx keeps the number of requests per second pretty stable, despite also showing an expected drop in requests per second as the number of connections grows. After reaching a thousand concurrent connections, Nginx gets close to double the number of Apache's requests per second.

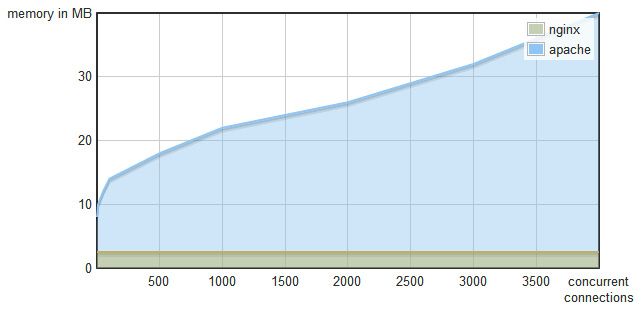

Let's look at a comparison of the RAM memory consumption:

Figure 1.2 – Memory consumption versus concurrent connections – Nginx versus Apache

Apache's memory consumption grows linearly with the number of concurrent connections, while Nginx's memory footprint is constant.

You might already be wondering why this happens.

This happens because Apache and Nginx have very different ways of dealing with concurrent connections. Apache spawns a new thread per request, while Nginx uses an event loop.

In a thread-per-request architecture, it creates a thread every time a new request comes in. That thread is responsible for handling the request until it finishes. If another request comes while the previous one is still being handled, a new thread is created.

On top of this, handling networking on threaded environments is not known as something particularly easy to do. You can incur in file and resource locking, thread communication issues, and common problems such as deadlocks. Adding to the difficulties presented to the developer, using threads does not come for free, as threads by themselves have a resource overhead.

In contrast, in an event loop architecture, everything happens on a single thread. This decision dramatically simplifies the lives of developers. You do not have to account for the factors mentioned previously, which means more time to deal with your users' problems.

By using this pattern, the web server just sends events to the event loop. It is an asynchronous queue that executes operations when there are available resources, returning to the code asynchronously when these operations finish. For this to work, all the operations need to be non-blocking, meaning they shouldn't wait for completion and just send an event and wait for a response later.

Blocking versus non-blocking

Take, for instance, reading a file. In a blocking environment, you would read the file and have the process waiting for it to finish until you execute the next line of code. While the operating system is reading the file's contents, the program is in an idle state, wasting valuable CPU cycles:

Code snippet

const result = readFile('./README.md');// Use resultThe program will wait for the file to be read and only then it will continue executing the code.

The same operation using an event loop would be to trigger the "read the file" event and execute other tasks (for instance, handling other requests). When the file reading operation finishes, the event loop will call the callback function with the result. This time, the runtime uses the CPU cycles to handle other requests while the OS retrieves the file's contents, making better use of the resources:

Code snippet

const result = readFileAsync('./README.md', function(result) { // Use result});In this example, the task gets a callback assigned to it. When the job is complete (this might take seconds or milliseconds), it calls back the function with the result. When this function is called, the code inside runs linearly.

Why aren't event loops used more often?

Now that we understand the advantages of event loops, this is a very plausible question. One of the reasons event loops are not used more, even though there are some implementations in Python and Ruby, is that they require all the infrastructure and code to be non-blocking. Being non-blocking means being prepared not to execute the code synchronously. It means triggering events and dealing with the result later, at some point in time.

On top of all of that, many of the commonly used languages and libraries do not provide asynchronous APIs. Callbacks are not present in many languages, and anonymous functions do not exist in programming languages such as C. Crucial pieces of today's software, such as libmysqlclient, do not support asynchronous operations, even though part of its internals might use asynchronous task execution. Asynchronous DNS resolution is another example that's also not a standard in many systems. As another example, you might take, for instance, the manual pages of operating systems. Most of them don't even provide us with a way to understand if a particular function does I/O or not. These are all evidences that the ability to make asynchronous I/O is not present in many of today's fundamental software pieces.

Even the existing tools that provide these features require developers to have a deep understanding of asynchronous I/O patterns to use event loops. It's a difficult job to wire up these existing solutions to get something to work while going around technical limitations, such as the ones shown in the libmysqlclient example.

JavaScript to the rescue

JavaScript was a language created by Brendan Eich in 1995 while working for Netscape. It initially only ran in browsers and allowed developers to add interactive features to web pages. It is composed of elements that revealed themselves as perfect for the event loop:

- It has anonymous functions and closures.

- It only executes one callback at a time.

- I/O is done on DOM via callbacks (for example, addEventListener).

Combining these three fundamental aspects of the language made the event loop something natural to anyone used to JavaScript in the browser.

The language's features ended up gearing its developers toward event-driven programming.

Node.js enters the scene

After all these thoughts and questions about I/O and how it should be dealt with, Ryan Dahl came up with Node.js in 2009. It is a JavaScript runtime, based on Google's V8 – a JavaScript engine that brings JavaScript to the server.

Node.js is asynchronous and single-threaded by design. It has an event loop at its core and presents itself as a scalable way to develop backend applications that handle thousands of concurrent requests.

Event loops provide us with a clean way to deal with concurrency, a topic where Node.js contrasts with tools such as PHP or Ruby, which use the thread-per-request model. This single-threaded environment grants Node.js users the simplicity of not caring about thread-safety problems. It very much succeeds in abstracting the event loop and all the issues with synchronous tools from the user, requiring little to no knowledge about the event loop itself. Node.js does this by leveraging callbacks and, more recently, the use of promises.

Node.js positioned itself as a way to provide a low-level, purely evented, non-blocking infrastructure for users to program their applications.

Node.js' rise

Telling companies and developers that they could leverage their JavaScript knowledge to write servers rapidly resulted in a Node.js popularity rise.

It didn't take much time for the language to evolve fast since it was released and started being used in production by companies of all sizes.

Just 2 years after its creation, in 2011, Uber and LinkedIn were already running JavaScript on the server. In 2012, Ryan Dahl resigned from the Node.js community's day-to-day operations to dedicate himself to research and other projects.

Estimates say that, in 2017, there were more than 8.8 million instances of Node.js running (source: https://blog.risingstack.com/history-of-node-js/). Today, more than 103 billion packages have been downloaded from Node Package Manager (npm), and there are around 1,467,527 packages published.

Node.js is a great platform, there's no questions about that. Pretty much anyone who has used it has experienced many of its advantages. Popularity and community play a significant role in this. Having a lot of people of very different experience levels and backgrounds working with a piece of technology can only push it forward. That's what happened – and still happens – with Node.js.

Node.js enabled developers to use JavaScript for lots of varying use cases that weren't possible previously. This ranged from robotics, to cryptocurrencies, to code bundlers, APIs, and more. It is a stable environment where developers feel productive and fast. It will continue its job, supporting companies and businesses of different sizes for many years to come.

But you've bought this book, so you must believe that Deno has something worth exploring, and I can guarantee that it does.

You might be wondering, why bring a new solution to the table when the previous one is more than satisfactory? That's what we'll discover next.

Why Deno?

Many things have changed since Node.js was created. More than a decade has passed; JavaScript has evolved, as well as the software infrastructure community. Languages such as Rust and golang were born and were very important developments in the software community. These languages made it much easier to produce native machine code while providing a strict and reliable environment for developers to work on.

However, this strictness comes at the cost of productivity. Not that developers don't feel productive writing those languages, because they do, but you can easily argue that productivity is a subject where dynamic languages clearly shine.

The ease and speed of developing dynamic languages makes them a very strong contestant when it comes to scripting and prototyping. And when it comes to thinking about dynamic languages, JavaScript directly comes to mind.

JavaScript is the most used dynamic language and runs in every device with a web browser. Due to its heavy usage and giant community, many efforts have been put into optimizing it. The creation of organizations such as ECMA International has ensured that the language evolves stably and carefully.

As we saw in the previous section, Node.js played a very successful role in bringing JavaScript to the server, opening the door to a huge amount of different use cases. It's currently used for many different tasks, including web development tooling, creating web servers, and scripting, among many others. At the time of its creation, and to enable such use cases, Node.js had to invent concepts for JavaScript that didn't exist before. Later, these concepts were discusses by the standards' organizations and added to the language differently, making parts of Node.js incompatible with its mother language, ECMAScript. A decade has passed, and ECMAScript has evolved, as well as the ecosystem around it.

CommonJS modules are no longer the standard; JavaScript has ES modules now. TypedArrays are now a thing, and finally, JavaScript can directly handle binary data. Promises and async/await are the go-to way with asynchronous operations.

These features are available on Node.js, but they must coexist with the non-standard features that were created back in 2009 that still need to be maintained. These features, and the large number of users that Node.js has, made it difficult and slow to evolve the system.

To solve some of these problems, and to keep up with the evolution of the JavaScript language, many community projects were created. These projects made it possible for us to use the latest features of the language but added things such as a build system to many Node.js projects, heavily complicating them. Quoting Dahl, it "took away from the fun of dynamic language scripting."

More than 10 years of heavy usage also made it clear that some of the runtime's fundamental constructs needed improvement. A lack of a security sandbox was one of the major issues. At the time Node.js was created, it was possible for JavaScript to access the "outside world" by creating bindings in V8 – the JavaScript engine behind it. Even though these bindings enabled I/O features such as reading from the filesystem accessing the network, they also broke the purpose of the JavaScript sandbox. This decision made it really hard to let the developer control what a Node.js script has access to. In its current state, for instance, there's nothing preventing a third-party package in a Node.js script to read all the files the user has access to, among performing other nefarious actions.

A decade later, Ryan Dahl and the team behind Deno were missing a fun and productive scripting environment that could be used for a wide range of tasks. The team also felt like the JavaScript landscape has changed enough that it was worthwhile simplifying, and thus they decided to create Deno.

Presenting Deno

"Deno is a simple, modern, and secure runtime for JavaScript and TypeScript that uses V8 and is built into Rust." – https://deno.land/

Deno's name was constructed by inverting the syllables of its ancestor's name, no-de, de-no. With a lot of lessons learned from its ancestor, Deno presents the following as its main features:

- Secure by default

- First-class TypeScript support

- A single executable file

- Provides fundamental tools to write applications

- Complete and audited standard library

- Compatibility with ECMAScript and browser environments

Deno is secure by default, and it was created like that by design. It ultimately leverages the V8 sandbox and provides a strict permission model that enables developers to finely control what the code has access to.

TypeScript is also first-class supported, meaning developers can choose to use TypeScript without any extra configuration. All the Deno APIs are also written in TypeScript and thus have correct and precise types and documentation. The same is true for the standard library.

Deno ships a single executable with all the fundamental tools needed to write applications; it will always be that way. The team makes an effort to keep the executable small (~15 MB) so that we can use it in various situations and environments, from simple scripts to full-fledged applications.

More than just executing code, the Deno binary provides a complete set of developer utilities, namely a linter, a formatter, and a test runner.

Golang's carefully polished standard library inspired Deno's standard library. It is deliberately bigger and more complete compared to Node.js'. This decision was made to address the enormous dependency trees that used to occur in some Node.js projects. Deno's core team believes that, by providing a stable and complete standard library, it can help address this problem. By removing the need to create third-party packages to handle common use cases the platform provides by default, it aims to diminish the need to use loads of third-party packages.

To keep compatibility with ES6 and browsers, Deno made efforts to mimic browser APIs. Things such as performing HTTP requests, dealing with URLs, or encoding text, among others, can be done by using the same APIs you'd use in a browser. A deliberate effort was made by the Deno team to keep these APIs in sync with the browser.

Aiming to offer the best of three worlds, Deno provides the prototype-ability and developer experience of JavaScript, the type-safety and security offered by Typescript, and Rust's performance and simplicity.

Ideally, as Dahl also mentioned in one of his talks, code would follow the following flow in the path from prototype to production: developers can start writing JavaScript, migrate to TypeScript, and end up with Rust code.

At the time of writing, is it only possible to run JavaScript and TypeScript. Rust is only available via a (still unstable) plugin API that might become stable in a not-so-distant future.

A web browser for command-line scripts

As time passed, the Node.js module system evolved into something that is now overly complex and painful to maintain. It takes into consideration edge cases such as importing folders, searching for dependencies, importing relative files, searching for index.js, third-party packages, and reading the package.json file, among others.

It also got heavily coupled with npm, the Node Package Manager, which was initially part of Node.js itself but separated in 2014.

Having a centralized package manager is not very webby, to use Dahl's words. The fact that millions of applications depend on a single registry to survive is a liability.

Deno solves this problem by using URLs. It takes an approach that's very similar to a browser, only requiring an absolute URL to a file to execute or import code. This absolute URL can be local, remote, or HTTP-based and includes the following file extension:

Code snippet

import { serve } from 'https://deno.land/std@0.83.0/http/server.ts'The preceding code happens to be the same code you would write on a browser inside a <script> tag if you want to require an ES module.

In regard to installation and offline usage, Deno ensures that users don't have to worry about that by using a local cache. When the program runs, it installs all the required dependencies, removing the need for an installation step. We'll dive into this later in more depth later, in Chapter 2, The Toolchain.

Now that we are comfortable with what Deno is and the problems it solves, we're in good shape to go beyond the surface. By knowing what is happening behind the scenes, we can get a better comprehension of Deno itself.

In the next section, we'll explore technologies that support Deno and how they connect.

Architecture and technologies that support Deno

Architecture-wise, Deno took various topics into consideration such as security. Deno put much thought into establishing a clean and performant way of communicating with the underlying OS without leaking details to the JavaScript side. To enable that, Deno uses message-passing to communicate from inside the V8 to the Deno backend. The backend is the component written in Rust that interacts with the event loop and thus with the OS.

Deno has been made possible by four pieces of technology:

- V8

- TypeScript

- Tokio (event loop)

- Rust

It is the connection of all those four parts that make it possible to provide developers with a great experience and development speed while keeping the code safe and sandboxed. If you are not familiar with these pieces of technology, I'll leave a short definition:

V8 is a JavaScript engine developed by Google. It is written in C++ and runs across all major operating systems. It is also the engine behind Chrome, Node.js, and others.

TypeScript is a superset of JavaScript developed by Microsoft that adds optional static typing to the language and transpiles it to JavaScript.

Tokio is an asynchronous runtime for Rust that provides utilities to write network applications of any scale.

Rust is a server-side language designed by Mozilla focused on performance and safety.

Using Rust, a fast-growing language, to write Deno's core made it more approachable for developers than Node.js. Node.js' core was written in C++, which is not known for being exceptionally easy to deal with. With many pitfalls and with a not-so-good developer experience, C++ revealed itself as a small obstacle in the evolution of Node.js core.

Deno_core is shipped as a Rust crate (package). This connection with Rust is not a coincidence. Rust provides many features that facilitate this connection with JavaScript and adds capabilities to Deno itself. Asynchronous operations in Rust typically use Futures that map very well with JavaScript Promises. Rust is also an embeddable language, and that provides direct embedding capabilities to Deno. This added to Rust being one of the first languages to create a compiler for WebAssembly, made the Deno team choose it for its core.

Inspiration from POSIX systems

POSIX systems were of great inspiration to Deno. In one of his talks, Dahl even states that Deno handles some of its tasks "as an operating system".

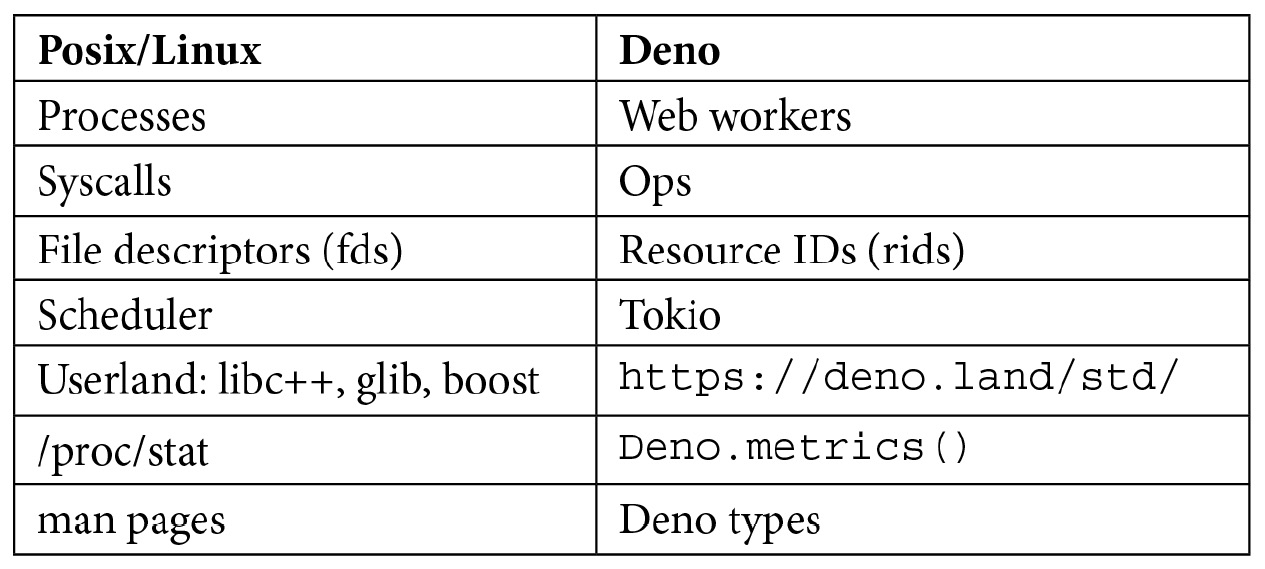

The following table shows some of the standard terms from POSIX/Linux systems and how they map to Deno concepts:

Some of the concepts from the Linux world might be familiar to you. Let's take, for instance, processes. They represent an instance of a running program that might execute using one or multiple threads. Deno uses WebWorkers to do the same job inside the runtime.

In the second row, we have syscalls. If you aren't familiar with them, they are the way for programs to perform requests to the kernel. In Deno, these requests do not go directly to the kernel; instead, they go from the Rust core to the underlying operating system, but they work similarly. We'll have the opportunity to see this in the upcoming architecture diagram.

These are just a couple of examples you might recognize if you are familiar with Linux/POSIX systems.

We'll explain and use most of the aforementioned Deno concepts throughout the rest of this book.

Architecture

Deno's core was initially written in golang, but it later changed to Rust. This decision was made to get away from golang as it is a garbage-collected language. Its combination with V8's garbage collector could lead to problems in the future.

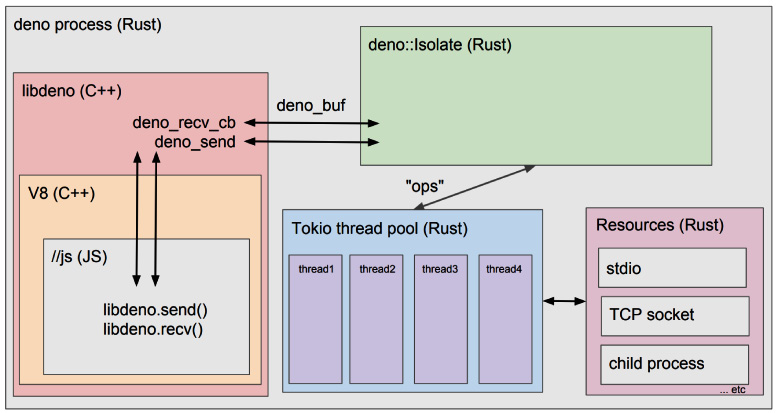

To understand how the underlying technologies interact with each other to form the Deno core, let's look at the following architecture diagram for it:

Figure 1.3 – Deno architecture

Deno uses message passing to communicate with the Rust backend. As a decision in regard to privilege isolation, Deno never exposes JavaScript object handles to Rust. All communication in and out of V8 uses Uint8Array instances.

For the event loop, Deno uses Tokio, a Rust thread pool. Tokio is responsible for handling I/O work and calling back the Rust backend, making it possible to handle all operations asynchronously. Operations (ops) is the name given to the messages that are passed back and forth between Rust and the event loop.

All the asynchronous messages dispatched from Deno's code into its core (written in Rust) return Promises back to Deno. To be more precise, asynchronous operations in Rust usually return Futures, which Deno maps to JavaScript Promises. Whenever these Futures are resolved, the JavaScript Promises are also resolved.

To enable communication from V8 to the Rust backend, Deno uses rusty_v8, a Rust crate created by the Deno team that provides V8 bindings to Rust.

Deno also includes the TypeScript compiler right inside V8. It uses V8 snapshots for startup time optimization. Snapshots are used for saving the JavaScript heap at a specific execution time and restoring it when needed.

Since it was first presented, Deno was subject to an iterative, evolutionary process. If you are curious about how much it changed, you can look at one of the initial roadmap documents written back in 2018 by Ryan Dahl (https://github.com/ry/deno/blob/a836c493f30323e7b40e988140ed2603f0e3d10f/Roadmap.md).

Now, not only do we know what Deno is, but we also know what's happening behind the scenes. This knowledge will help us in the future when we're running and debugging our applications. The creators of Deno made many technological and architectural decisions to bring Deno to the state it is today. These decisions pushed the runtime forward and made sure Deno excels in several situations, some of which we'll later explore. However, to make it work well for some use cases, some trade-offs had to be made. Those trade-offs resulted in the limitations we'll examine next.

Grasping Deno's limitations

As with everything, choosing solutions is a matter of dealing with trade-offs. The ones that adapt best to the projects and applications we're writing are what we end up using. Currently, Deno has some limitations; some of them due to its short lifetime, others because of design decisions. As it happens with most solutions, Deno is also not a one-size-fits-all tool. In the next few pages, we'll explore some of the current limitations of Deno and the motivations behind them.

Not as stable as Node.js

In its current state, Deno can't be compared to Node.js regarding stability for obvious reasons. Node.js has more than 10 years of development, while Deno is only nearing its second year.

Even though most of the core features presented in this book are already considered stable and correctly versioned, there are still features that are subject to change, and live under the unstable flag.

Node.js's years of experience made sure it is battle-tested and that it works in the most diversified environments. That's something we're hopeful Deno will get, but time and adoption are essential factors.

Better HTTP latency but worse throughput

Deno keeps performance on track from the beginning. However, as seen on the benchmarks page (https://deno.land/benchmarks), there are topics where it is still not at Node.js' level.

Its ancestor leverages the direct bindings with C++ on the HTTP server to amplify this performance score. Since Deno resisted to add native HTTP bindings and builds on top of native TCP sockets, it still suffers from a performance penalty. This decision is something that the team plans to tackle after optimizing TCP socket communication.

The Deno HTTP server handles about 25k requests per second with a max latency of 1.3 milliseconds, while Node.js handles 34k requests but has a latency that varies between 2 and 300 milliseconds.

We can't say 25k requests per second is not enough, especially since we're using JavaScript. If your app/website needs more than that, probably JavaScript, and thus Deno, is not the correct tool for the job.

Compatibility with Node.js

Due to many of the changes that have been introduced, Deno doesn't provide compatibility with existing JavaScript packages and tooling. A compatibility layer is being created on the standard library, but it is still not close to finished.

As Node.js and Deno are two very similar systems with shared goals, we expect the latter to execute more and more Node.js programs out of the box as time goes on. However, and even though some Node.js code is currently runnable, that is not the case currently.

TypeScript compiler speed

As we mentioned previously, Deno uses the TypeScript compiler. It reveals itself as one of the slowest parts of the runtime, especially compared to the time V8 takes to interpret JavaScript. Snapshots do help with this, but this is not enough. Deno's core team believes that they will have to migrate the TypeScript compiler to Rust to fix it.

Due to the extensive work required to complete this task, this is probably not going to happen anytime soon, even though it's supposed to be one of the things that would make its startup time orders of magnitude faster.

Lack of plugins/extensions

Even though Deno has a plugin system to support custom operations, it is not finished yet and is considered unstable. This means that extending native functionality to more than what Deno makes available is virtually impossible.

At this point, we should understand Deno's current limitations and why they exist. Some of them might be resolved soon, as Deno matures and evolves. Others are the result of design decisions or roadmap priorities. Understanding these limitations is fundamental when it comes to deciding if you should use Deno in a project. In the next section, we will have a look at the use cases we believe Deno is the perfect fit for.

Exploring use cases

As you are probably aware by now, Deno by itself has a lot of use cases in common with Node.js. Most of the changes that were made were to ensure the runtime is safer and more straightforward, but as it leverages most of the same pieces of technology, shares the same engine, and many of the same goals, the use cases can't differ by much.

However, and even though the differences are not that big, there may be small nuances that will make one a slightly better fit than the other in specific situations. In this section, we will explore some use cases for Deno.

A flexible scripting language

Scripting is one of those features where interpreted languages always shine. JavaScript is perfect when we want to prototype something fast. This can be renaming files, migrating data, consuming something from an API, and so on. It just feels like the right tool for these use cases.

Deno looked at scripting with much consideration. The runtime itself makes it very easy for users to write scripts with it, thus providing many benefits for this use case, especially compared to Node.js. These benefits are being able to execute code with just a URL, not having to manage dependencies, and the ability to create an executable based on Deno.

On top of all of this, the fact that you can now import remote code while controlling which permissions it uses is a significant step in terms of trust and security.

Deno's Read Eval Print Loop (REPL) is a great place to do experimentation work. Adding to what we mentioned previously, the small size of the binary and the fact it includes all the needed tools is the cherry on top of the cake.

Safer desktop applications

Although the plugin system is not stable yet and the packages that allow developers to create desktop applications depend heavily on that, it is very promising.

During the last few years, we've seen the rise of desktop web applications. The rise of the Electron framework (https://www.electronjs.org/) enabled applications such as VS Code or Slack to be created. These are web pages running inside a WebView with access to native features that are part of many people's daily lives.

However, for users to install these applications, they must trust them blindly. Previously, we discussed security and how JavaScript code used to have access to all the systems where it ran. Deno is fundamentally different here since, due to its sandbox and all its security features, this is much safer, and the potential that's unlocked is enormous.

We'll be looking at lots of advances in using JavaScript to build desktop applications in Deno throughout this book.

A quick and complete environment to write tools

Deno's features position it as a very complete, simple, and fast environment to write tooling in. When we say tooling, this is not only tooling for JavaScript or TypeScript projects. As the single binary contains everything needed to develop an application, we can use Deno in ecosystems outside of the JavaScript world.

Its clarity, automatic documentation via TypeScript, ease of running, and the popularity of JavaScript make Deno the right cocktail for writing tools such as code generators, automation scripts, or any other developer tools.

Running on embedded devices

By using Rust and distributing the core as a Rust crate, Deno automatically enables usage in embedded devices, from IoT devices to wearables and ARM devices. Again, the fact that it is small and includes all the tools in the binary might be a great win.

The fact that the crate is made available standalone allows people to embed Deno in different places. For instance, when writing a database in Rust and wanting to add Map-Reduce logic, we can use JavaScript and Deno to do so.

Generating browser-compatible code

If you haven't had a look at Deno before, then this probably comes as a surprise. Aren't we talking about a server-side runtime? We are. But this same server-side runtime has been making efforts to keep the API's browser compatible. It provides features in its toolchain that enable code to be written in Deno and executed in the browser, as we'll explore in Chapter 7, HTTPS, Extracting Configuration, and Deno in the Browser.

All of this is taken care of by the Deno team, which keeps its APIs browser-compatible and generates browser code that opens a new set of possibilities yet to be discovered. Browser compatibility is something we will use later in this book, in Chapter 7, HTTPS, Extracting Configuration, and Deno in the Browser to build a Deno application by writing a complete application, client, and server inside Deno.

Full-fledged APIs

Deno, like Node.js,, puts lots of effort into dealing with HTTP servers. With a complete standard library providing great primitives for frameworks to write on top of, there is no doubt that APIs are among the strongest Deno use cases. TypeScript is a great addition here in terms of documentation, code generation, and static type checking, helping mature code bases scale.

We'll be focusing more on this specific use case throughout the rest of this book as we believe it to be one of the most important ones – one where Deno shines.

These are just a few examples of use cases where we believe Deno is a great fit. As with Node.js, we're also aware that there are many new uses to discover. We're excited to accompany this adventure and see what it still has to unveil.

Summary

In this chapter, we traveled back in time to 2009 to understand the creation of Node.js. After that, we realized why and when we should use the event-driven approach compared to a threaded model and the advantages it brings. We came to understand what evented, asynchronous code is and how JavaScript helped Node.js and Deno make the most out of the server's resources.

After that, we fast-forwarded through the Node.js' 10+ year story, its evolution, and how its adoption started. We observed how the runtime grew, together with its base language, JavaScript, while helping millions of businesses deliver great products to its clients.

Then, we took a modern look at Node.js, with today's eyes. What changed in the ecosystem and the language? What are some of the developers' pain points? We dived into these pain points and explored why it was difficult and slow to change Node.js to solve them.

As this chapter progressed, Deno's motivations became more and more evident. After looking at the past of JavaScript on the server, it made sense for something new to appear – something that would solve the pain experienced previously while keeping the things developers love.

Finally, we got to know Deno, which will be our friend for this book. We learned its vision, principles, and how it offers to solve certain problems. After having a sneak peek at the architecture and the components that made Deno possible, we couldn't finish without talking about some of the trade-offs and current limitations.

We concluded this chapter by listing use cases where Deno is an excellent fit. We will come back to these use cases later in this book, when we start coding. From this chapter on, our approach will be more concrete and practical, always moving toward code and examples you can run and explore.

Now that we understand what Deno is, we have all it takes to start using it. In the next chapter, we will set up the respective environment and write a Hello World application, among doing many other exciting things.

That's how exciting adventures start, right? Let's go!