A recent report by Martin Goodson of Qubit (a conversion optimization startup) asserted that Most Winning A/B Test Results Are Illusory, due, for the most part, to such tests being badly executed. According to the author, this is something that can lead not only to the ‘needless modification of websites’, but also in some cases can do damage to a company’s profitability.

So why is this the case? And how can designers and businesses ensure that if A/B testing (also known as split testing) is carried out, it’s done properly and effectively?

What is A/B Testing?

To start with a quick primer, A/B testing is a way to compare two versions of a web page (it might be, for example, a landing page) to see which of the two versions performs the best. To carry out a test, two groups of people will each see a different page and results are measured by how the groups interact with each page.

For example, a page that contains a strong call-to-action (CTA) in a certain area of the page may be pitted against another that is similar, but has the CTA in a different place and might use different wording or color.

Other aspects of pages that are commonly used in A/B testing include:

- Headlines and product descriptions

- Forms

- Page layouts

- Special offers

- Images

- Text (long form, short form)

- Buttons

However, according to Goodson, it’s often the case that the tests carried out on these pages return results that are false and the expected ‘uplift’ (increase in conversions) is never realized.

The Math of A/B Testing

Simply put, you could say that A/B testing can be as simple as conversions versus non-conversions. From these possibilities, it’s then a case of calculating the number of visits and what percentage of these converted. In his report, Goodson points to two methods of testing: statistical power and multiple testing.

On the former, Goodson explains:

Statistical power is simply the probability that a statistical test will detect a difference between two values when there truly is an underlying difference. It is normally expressed as a percentage.

However, this can be affected by the size of the sample — if there are not that many people taking part, then the likelihood is that you won’t get realistic results. Further to this, gaining uplift and true results is something that also depends on how long the test runs.

In order to know how to calculate running time, it’s necessary to properly calculate sample sizes before implementing and running the test. Getting this wrong will return false results and it’s unlikely that any uplift in sales will be seen, even if the test has indicated that they will.

Multiple testing often relies on software and uses classical p-values for testing statistical significance. So both models rely on statistics, but often multiple testing is carried out using software such as Optimizely.

Dangers of Using P-Values

The use of p-values can and does often produce results that are false and this is due to two well-known factors:

- Carrying out many tests

- Stopping a test when positive results are seen

Bearing this in mind, it’s as well to really do your research when it comes to the software you use to check how variables are tested and how intuitive the software is.

Optimizely, for example, recommends that you set up variations before running tests using its software. If you don’t, you’re essentially just running an A/A test as the result is equal to the original page. The company also points out that without the variables being properly set up, you’re likely to get winning results that are false.

Common Mistakes in A/B Testing

Firstly, in order to carry out A/B testing that returns true results, it’s necessary to have a large enough sample size. According to Goodson, the statistical power increases with larger samples and while you may get the odd random variable with a large sample, this is unavoidable and won’t necessarily return false results.

However, not all sites have a large amount of traffic, so to some extent the sample will be somewhat beyond your control. Be aware of this because if you have few visitors, you’re more likely to get random variables and it could be a waste of your time.

The second important aspect to successful A/B testing is the length of time that the test runs for. Again, if you cut short the test, you’re essentially reducing the statistical power of it and you’re likely to receive false positives which, while appearing to generate uplift, actually result in no change when it comes to the bottom line: revenue.

If you cut short a test when you think you’re seeing winning results, Goodson says:

“Almost two-thirds of winning tests will be completely bogus.”

In other words it’s vital that you let the test run long enough — even if you’re seeing a good amount of conversions — in order to build statistical power and gain real results. So you could start out with a good solid testing model, with a good sample size, yet let impatience lead you to false results.

Running Simultaneous Tests

Another recent and damaging trend has been to perform a lot of tests all at the same time. This is a bad idea because if you perform 20 tests, then on average you’ll see one winning result, if it’s 40 then you’ll see two, as each test has a 5% chance of winning. Goodson says:

“Rather than a scattergun approach, it’s best to perform a small number of focused and well-grounded tests, all of which will have adequate statistical power.”

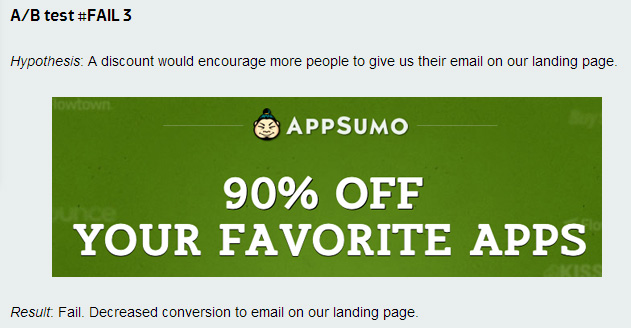

According to AppSumo, in their own testing for their product:

“Only 1 out of 8 A/B tests have driven significant change.”

AppSumo has around 5,000 visitors per day and while they maintain that they have seen some excellent results, such as email conversions increasing by more than five times and doubling purchase rates, the site has also seen some “harsh realities” when it comes to the testing process.

Even those that they were sure would work simply failed for various reasons, which included:

- People not reading the text

- Using a % as an incentive rather than $

- Pop-up/light boxes irritating the visitor

In order to actually get away with the above, it’s necessary to have a very strong brand that people trust, and that’s still the Holy Grail for many of us.

In the example above, the folks at AppSumo believe that the test failed due to the need to enter an email address — something that is a precious commodity to all sites. However, it’s equally precious to the “sophisticated” user and they don’t part with their email address lightly, which is why it’s a better idea to offer a cash incentive, rather than a percentage.

Running Successful A/B Testing

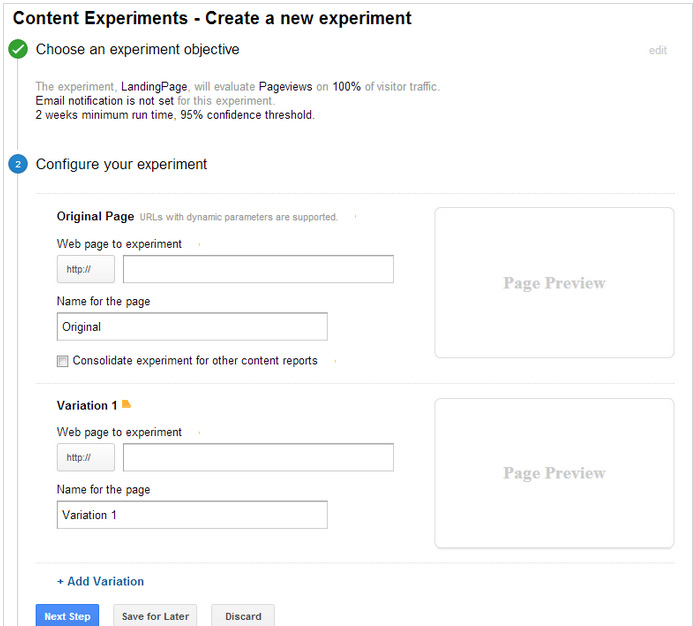

Before implementing a test, plan it well and determine how the test is likely to improve conversions. You should of course set up goals in Google Analytics for measurable results and use appropriate software. You can carry out testing manually, using your own calculations or use a template such as the one prepared by Visual Website Optimizer. Alternatively (or additionally), there’s a free online A/B test significance tool that you can use, which is created by the same people.

Note: You can also use Google Analytics Content Experiments to perform testing.

You should also realize that there’s no rush and you will have to exercise patience if you want to gain winning results — it’s likely that the tests will take weeks or even months to complete.

Additionally, you should:

- Test only one page at a time, or even one element on a page.

- Select pages that have a high bounce/exit rate.

- Expect a bare minimum of 1000 visitors before seeing any results.

- Be prepared for failure; very few tests are successful the first time around.

- Understand that A/B testing has a learning curve.

- Be patient.

- Understand your customer.

Says the Miva Merchant blog:

“Going into it knowing that 7 out of 8 of your tests will produce insignificant improvements will likely prevent you from un-real expectations. Stick with it, and don’t give up after multiple insignificant results.”

If your testing is going to be a success, it’s important to know your audience, too. Creating a buyer persona is something that should always be carried out before the design and development phase, but many businesses fail to understand the importance of this. If you don’t know who you’re addressing, then how can you possibly even attempt to give them what they want?

It’s all in the planning, as it is for pretty much every aspect of business. In order to gain conversions, it’s always necessary to do your research, no exceptions.

Final Thoughts

Martin Goodson recommends that, should you perform A/B tests and not see any real uplift, or uplift isn’t maintained, then it’s always worth carrying out the test again to check to see whether it was carried out effectively in the first instance. He also points out that estimated uplift from testing is often overestimated (the ‘winner’s curse’) and this is especially true for those with a small sample size.

If you do have a small sample, then ask yourself if it’s worth carrying out testing at this stage, as the results you’ll gain may not be very accurate at all. If this is the case, then you run the risk of making changes that will alienate future visitors.

A/B testing does have value, but if not carried out correctly, it will return false results. Even if it is done right, there’s no guarantee that it will be successful — so be prepared for this before you begin. Do your research, understand your goals and set up the test properly, while all the time exercising patience and you will get there.

Kerry is a prolific technology writer, covering a range of subjects from design & development, SEO & social, to corporate tech & gadgets. Co-author of SitePoint’s Jump Start HTML5, Kerry also heads up digital content agency markITwrite and is an all-round geek.