Google’s Susan Moskwa wrote in 2008 on the Google Webmaster Central Blog an article titled Demystifying the “duplicate content penalty”. One year later many webmasters still look at “duplicate content” without really understanding what it is and what it does.

Google’s Susan Moskwa wrote in 2008 on the Google Webmaster Central Blog an article titled Demystifying the “duplicate content penalty”. One year later many webmasters still look at “duplicate content” without really understanding what it is and what it does.

An older article, on the same site, reads Deftly dealing with duplicate content and clarifies most of the issues related to this topic. Although the article is dated 2006, things haven’t changed that much in the “duplicate content” camp. Google still wants you to optimize your sites and block all on-site duplicate content appropriately. Google still wants you to keep your internal linking consistent, they still want you to handle country-specific content with appropriate TLDs and they still want you to use the preferred domain feature in webmaster tools. Boilerplate repetition rules haven’t changed, and Google still doesn’t like publishing stubs (although many sites still get away with such practices, but not for long).

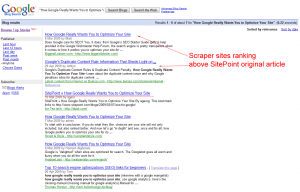

It’s still astonishing that Google advises not to worry about scrapers, since these rank often above the original content in its SERPs (image below – click to enlarge).

As a general rule, scraper sites don’t hurt as much as on-site duplicate content – and did you know that your website template counts in the duplicate content calculation too? Behind what the eye sees there is the HTML code that uses “words” to generate the visible layout. If those words in the code outnumber the actual text of an article by 70 % you might have duplicate content issues. So, if you have this possibility, don’t be too lazy to write longer texts.

Last but not least, you should know that on-site duplicate content can also influence your PageRank. You probably thought that when you publish a new site the PageRank is zero. Wrong. Every time you publish a new page it will have a PageRank greater than zero. Google assigns PageRank to sites just for existing – when they first appear the PageRank is based on internal linking structure and content, and not calculated based on external links. What influences the PageRank in such a situation is the content of the site and the number of non-duplicate pages (the more the merrier). I hope this gives at least one answer to those who saw their new sites having a PR4 for example, and then got puzzled because their PR dropped. PageRanks drops because Google’s generosity doesn’t last long. If new pages get a PR greater than usual, take advantage of the opportunity and try to get as many external links as possible to support them, or else, Google’s next PR update will make you wonder WTF!