Increase Search Traffic by Getting Your Site Recrawled More Often

Key Takeaways

- Increasing the frequency of Google’s site recrawls can improve search rankings and allow new pages to appear more quickly. This can be achieved through several strategies, such as improving site load times, reducing server errors, and regularly updating content.

- Reviewing your site’s crawl stats through Google Webmaster Tools can provide insight into how often your site is crawled. Factors such as site size and the number of inbound links can influence the crawl frequency.

- Creating more inbound links can increase your crawl rate, but this should be done appropriately to avoid being deemed as spam. Submitting a sitemap to Google can also facilitate easier site crawling, although its impact on recrawl rate is minor.

- The ‘Fetch as Google’ option in Webmaster Tools allows you to directly request a crawl by submitting a URL, yielding almost instant results. However, Google limits this to 10 index submissions per month, per account.

Search engine rankings are vital to the success of your website.

And precisely because they’re so important, it’s frustrating when you work hard to improve and optimize your site, only to find that your changes don’t show up in Google for days or even weeks.

This lag is due to the way Google indexes sites on the Internet. It deploys legions of “spiders” that use a sophisticated algorithm that runs across the entire Internet, examining sites and indexing pages based on keywords.

However, there is no fixed period as to when Google’s spiders, or “bots,” crawl your site. In fact, it can vary considerably from site to site.

But you’re not helpless. By taking a few basic steps, you can encourage Google to crawl your site more often–improving your rankings and getting your new pages to show up more quickly.

Reviewing your site’s crawl stats

Since Google’s crawl frequency varies from site to site, to get an idea of how often your site is crawled you first need to look in your Webmaster Tools.

When you first log in to Webmaster Tools, it will guide you through the setup process for your site.

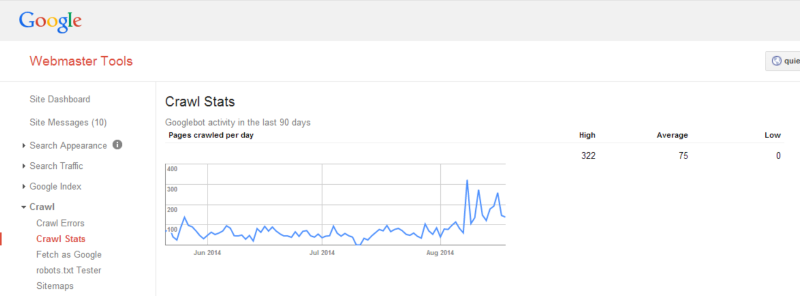

Once you have your site up and running in Webmaster Tools, click Crawl Stats in the left hand menu. Here’s what the stats look like for a bed retail site that I run:

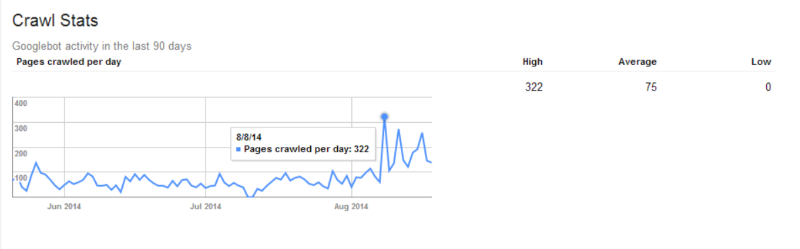

Google only displays crawl data for the last 90 days. On the right, Google tells me that it crawled my bed site an average of 75 times a day, with a high of 322 crawls on the August 8:

As you can see the crawl frequency can vary widely from day to day. This particular site was crawled less than 100 times a day between two and three months ago, with 0 crawls during a couple of days in June and July. Since then it has been crawled much more frequently with an upwards trend over the last month.

There are many reasons for this variance. Site size is one factor. This site is large–it lists thousands of beds and features a product inventory that gets updated regularly. Google tends to recrawl big, frequently updated sites multiple times per day.

The low frequency in July and June represents periods when Google applied a manual action to my site. Since August, those actions have been removed by Google. I’ve also redoubled my marketing efforts, resulting in more inbound links pointing to my site–and more crawls from Google.

That increased crawl rate has been critical in getting my site back up in the rankings after June’s manual actions. During this period I implemented site-wide canonical tags to eliminate duplicate content in an effort to get more of my products organically listed in Google’s search. With over a thousand listed products, getting hundreds of crawls a day helped my SEO efforts pay off.

Getting Google to crawl more often

This is often what most people want to know when they ask how often the big G crawls their site.

The fact is, while some sites get crawled multiple times a day, others won’t get crawled for a month at a time. This can be incredibly frustrating as you continue to add to your site but don’t see the changes reflected in Google’s search results for weeks.

There are multiple ways to encourage Google to crawl your site more frequently.

1. Improve site load times and reduce server errors

Google’s algorithms are more likely to crawl your site if it loads quickly and doesn’t serve up a lot of errors and bad pages.

If your site has connectivity issues, Google will be less likely to crawl your site often. This is why having a good host with reliable servers is crucial.

Furthermore, page load times also have a bearing on how often Google crawls your site. There are multiple ways to optimise page load times which are beyond the scope of this article. However, there is an easy way to see the crawl errors for yourself and see where the problem is.

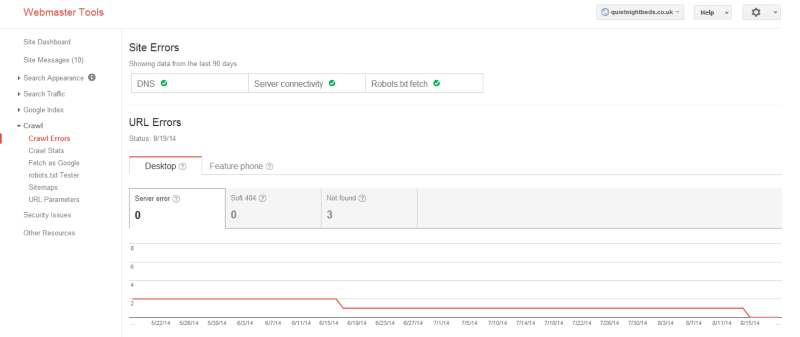

The Crawl Errors report is located in the left menu under the Crawl section of your Webmaster Tools. As you can see my site looks pretty healthy as far as connectivity is concerned (indicated under the Site Errors heading). From the URL Errors graph, you can see there were two server errors in May and one error in June, and the site is now returning zero errors. This also corresponds with the increased crawl frequency in August as my server errors have been reduced to zero.

2. Update your site frequently

Another way to increase how often Google crawls your site is by updating it frequently.

Google loves regularly updated sites and will reward your efforts by coming back often, making sure the site is fully indexed in its search.

This is straightforward for news sites, but can be tricky for retail sites, such as mine in the above example. Products rarely change–certainly not as often as the news–and it’s difficult for businesses to get around this.

That’s why having a blog can be so important. Blog content can be updated as often as you want. Regularly adding new material will make Google come back and recrawl your site.

3. Create more inbound links

This is one way of increasing your crawl rate that can get you into a lot of trouble if done wrong.

Google frowns on what it considers “inorganic” inbound links to your site, which it deems as spam.

If you try to game Google by buying links, or if you have too many low quality links pointing to your site (such as links from adult or gambling websites), you may find that Google’s spiders run from your site and never return.

However, it’s undeniable that the more authority links you have from other websites pointing to your site, the more likely Google will recrawl it. With newer and fresher links, Google will increase its crawl frequency.

4. Publish a sitemap

There’s a debate as to how effective this strategy is in terms of how often your site is crawled.

Google considers a whole range of factors when determining when to re-crawl your site, and the sitemap is a minor factor in the equation. Site update frequency, inbound links and page load times are much more important.

But by submitting a sitemap to Google, you give its spiders the full layout of your site, making it much easier for it to be crawled.

What’s more, some site map generators allow you to enter a field for how frequently you plan on changing or adding to the content on your site.

Although this alone won’t influence the recrawl rate, if you actually update the site as often as you say you intend to, that will have an impact on how often it’s crawled.

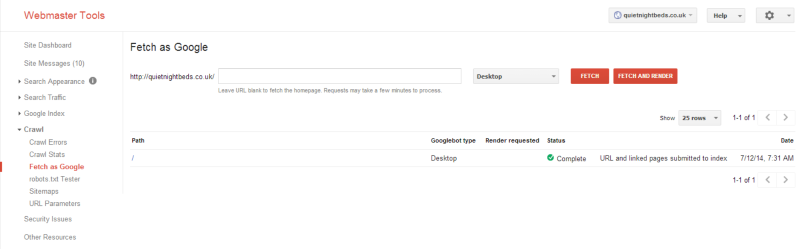

5. Fetch as Google

A final way to get Google to recrawl your site, is to directly request a crawl by submitting a URL in your Webmaster Tools. Under the Crawl menu on the left you’ll see a link for Fetch as Google:

This option allows you to force Google to recrawl your site by entering the full URL to any page in the space provided. This yields almost instant results and is one of the most surefire ways of letting Google know you’ve updated your site.

Google limits you to 10 index submissions per month, per account. If you host a number of client sites in the same Webmaster Tools account, make sure to use your submissions sparingly.

Start by understanding your Crawl Stats

There are clearly many factors that influence how often Google crawls your site.

Trying to understand how these factors influence your rankings starts with getting a clear picture using the Crawl Stats in your Webmaster Tools.

These reports will give you an idea of how you can improve your crawl rate, whether that’s upgrading your servers, submitting a sitemap or increasing your site’s number of inbound links.

Frequently Asked Questions (FAQs) about Increasing Search Traffic and Site Recrawling

How often does Google recrawl websites?

Google’s recrawling frequency varies depending on the website. High-traffic websites with frequently updated content may be recrawled several times a day, while smaller, less active sites might only be recrawled every few weeks. However, you can request Google to recrawl your site more frequently by using Google Search Console’s URL Inspection tool.

How can I get Google to recrawl my site more often?

There are several strategies to encourage Google to recrawl your site more frequently. These include regularly updating your content, improving your site’s loading speed, and ensuring your site is mobile-friendly. You can also use the URL Inspection tool in Google Search Console to request a recrawl of specific pages.

What is the importance of XML sitemaps in site recrawling?

XML sitemaps are crucial for site recrawling as they provide search engines with a roadmap of your website, making it easier for them to find and index your content. You can submit your sitemap to Google via the Search Console to ensure all your pages are crawled and indexed.

How does site speed affect Google’s recrawling rate?

Site speed is a significant factor in Google’s recrawling rate. If your site loads quickly, Google can crawl more pages within its allocated crawl budget, potentially leading to more frequent recrawling. Therefore, optimizing your site’s speed can help increase your search traffic.

How does mobile-friendliness impact site recrawling?

With Google’s mobile-first indexing, the search engine primarily uses the mobile version of your site for indexing and ranking. If your site isn’t mobile-friendly, it may not be crawled as frequently or rank as highly in search results, potentially reducing your search traffic.

Can broken links affect my site’s recrawling rate?

Yes, broken links can negatively impact your site’s recrawling rate. They can lead to crawl errors, causing Google to spend its crawl budget on non-existent pages instead of your actual content. Regularly checking and fixing broken links can help improve your site’s recrawling rate and search traffic.

How can I check if Google has recrawled my site?

You can check if Google has recrawled your site by using the URL Inspection tool in Google Search Console. This tool provides information about the last crawl date, any crawl errors, and the current index status of your page.

Does social media activity influence site recrawling?

While Google has stated that social signals aren’t a direct ranking factor, a strong social media presence can indirectly influence site recrawling. Regularly shared content can attract more traffic, potentially signaling to Google that your site is worth recrawling more frequently.

Can I control which pages Google recrawls on my site?

Yes, you can use the robots.txt file to control which pages Google crawls on your site. However, it’s important to use this tool carefully, as blocking important pages can negatively impact your site’s visibility in search results.

How does site structure affect site recrawling?

A well-structured site makes it easier for Google to crawl and index your content. Using clear navigation, logical URL structure, and internal linking can help guide Google’s crawlers through your site, potentially leading to more frequent recrawling and increased search traffic.

Jai Paul is a programmer and tech writer. Besides his freelance writing, he spends his time running several online ventures. He consults on all things related to web development.

Published in

·AI·Computing·Content Marketing·Email Marketing·Entrepreneur·Low Code·Marketing·SEO & SEM·October 6, 2021